In this blog post, I will discuss the solutions for monitoring databases (which includes alerting) I have worked with and recommended in the past to my clients. This survey will mostly focus on MySQL solutions.

One of the most common issues I come across when working with clients is monitoring and alerting. Many times, companies will fall into one of these categories:

- No monitoring or alerting. This means they have no idea what’s going on in their environment whatsoever.

- Inadequate monitoring. Maybe people in this camp are using a platform that just tells them the database is up or connections are happening, but there is no insight into what the database is doing.

- Too much monitoring and alerting. Companies in this camp have tons of dashboards filled with graphs, and their inbox is full of alerts that get promptly ignored. This type of monitoring is just as useful as the first option. Alert fatigue is a real thing!

With my clients, I like to talk about what monitoring they need and what will work for them.

Before we get started, I do want to point out that I have borrowed some text and/or graphics from the websites and promotional material of some of the products I’m discussing.

Simple Alerting

Percona provides a Nagios plugin for database alerts: http://ift.tt/1RohePo.

I also like to point out to clients what metrics are important to monitor long term to make sure there are no performance issues. I prefer the following approach:

- On the hardware level:

- Monitor CPU, IO, network usage and how it trends monthly. If some resource consumption comes to a critical level, this might be a signal that you need more capacity.

- On the MySQL server level:

- Monitor connections, active threads, table locks, row locks, InnoDB IO and buffer pool usage

- For replication, monitor seconds behind master (SBM), binlog size and replication errors. In Percona XtraDB Cluster, you might want to watch wsrep_local_recv_queue.

- On the query level:

- Regularly check query execution and response time, and make sure it stays within acceptable levels. When execution time approaches or exceeds established levels, evaluate ways to optimize your queries.

- On the application side:

- Monitor that response time is within established SLAs.

High-Level Monitoring Solution Comparison

|

PMM |

MonYOG |

Severalnines |

VividCortex |

SelectStar |

| Databases Supported |

MySQL, MongoDB and others with custom addons |

MySQL |

MySQL, MongoDB, PostgreSQL |

MySQL, MongoDB, PostgreSQL, Redis |

MySQL, MongoDB, PostgreSQL, Hadoop, Cassandra, Amazon Dynamo, IBM DB2, SQL Server, Oracle |

| Open Source |

x |

|

|

|

|

| Cost |

Free |

Subscription per node |

Subscription per node |

Subscription per instance |

Subscription per instance |

Cloud or

On Premises |

On premises |

On premises |

On premises |

Cloud with on premises collector |

Cloud with on premises collector |

| Has Agents |

x |

|

|

x |

|

| Monitoring |

x |

x |

x |

x |

x |

| Alerting |

Yes, but requires custom setup |

x |

x |

x |

x |

| Replication Topology Management |

x |

|

x |

|

|

| Query Analytics |

x |

x |

|

x |

x |

| Configuration Management |

|

x |

x |

|

|

| Backup Management |

|

|

x |

|

|

| OS Metrics |

x |

x |

|

x |

x |

| Configuration Advisors |

|

x |

|

|

x |

| Failover Management |

x |

|

x |

|

|

ProxySQL and

HA Proxy Support |

Monitors ProxySQL |

|

x |

|

|

PMM

http://ift.tt/20RDjvr

http://ift.tt/1S6sNir

http://ift.tt/1Socbl6

Percona Monitoring and Management (PMM) is a fully open source solution for managing MySQL platform performance and tuning query performance. It allows DBAs and application developers to optimize the performance of the database layer. PMM is an on-premises solution that keeps all of your performance and query data inside the confines of your environment, with no requirement for data to cross the Internet.

Assembled from a supported package of “best-of-breed” open source tools such as Prometheus, Grafana and Percona’s Query Analytics, PMM delivers results right out of the box.

With PMM, anyone with database maintenance responsibilities can get more visibility for actionable enhancements, realize faster issue resolution times, increase performance through focused optimization and better manage resources. More information allows you to concentrate efforts on the areas that yield the highest value, rather than hunting and pecking for speed.

PMM monitors and provides performance data for Oracle’s MySQL Community and Enterprise Servers, as well as Percona Server for MySQL and MariaDB.

Alerting

In the current version of PMM, custom alerting can be set up. Percona has a guide here: http://ift.tt/2jiJvjH.

Architecture

The PMM platform is based on a simple client-server model that enables efficient scalability. It includes the following modules:

- PMM Client is installed on every MySQL host that you want to monitor. It collects MySQL server metrics, general system metrics, and query analytics data for a complete performance overview. Collected data is sent to the PMM Server.

- PMM Server aggregates collected data and presents it in the form of tables, dashboards and graphs in a web interface.

MySQL Configuration

Percona recommends certain settings to get the most out of PMM. You can get more information and a guide here: http://ift.tt/2nwJ5WD.

Advantages

- Fast setup

- Fully supported and backed by Percona

- Impressive roadmap ahead

- Monitors your database in depth

- Query analytics

- Quick setup docker container

- Free and open source

Disadvantages

- New, could still have some growing pains

- Requires agents on database machines

Severalnines

http://ift.tt/1lZf79Y

Severalnines ClusterControl provides access to 100+ key database and host metrics that matter to your operational performance. You can visualize historical performance in custom dashboards to establish operational baselines and capacity planning. It lets you proactively monitor and receive advice to address immediate and potential database and server issues, and ships with over 100 built-in advisors or easily-writeable custom advisors for your specific needs.

Severalnines is more sysadmin focused.

Architecture

ClusterControl is an agentless management and automation software for database clusters. It helps deploy, monitor, manage and scale your database server/cluster directly from ClusterControl user interface.

ClusterControl consists of four components:

| Component |

Package Naming |

Role |

| ClusterControl controller (cmon) |

clustercontrol- controller |

The brain of ClusterControl. A backend service performing automation, management, monitoring and scheduling tasks. All the collected data will be stored directly inside CMON database |

| ClusterControl REST API |

clustercontrol-cmonapi |

Interprets request and response data between ClusterControl UI and CMON database |

| ClusterControl UI |

clustercontrol |

A modern web user interface to visualize and manage the cluster. It interacts with CMON controller via remote procedure call (RPC) or REST API interface |

| ClusterControl NodeJS |

clustercontrol-nodejs |

This optional package is introduced in ClusterControl version 1.2.12 to provide an interface for notification services and integration with 3rd party tools |

Advantages

- Agentless

- Monitors, deploys and manages:

- Database

- Configuration

- Backups

- Users

- Simple web GUI to manage your databases, alerts, users, settings

- Can create custom monitors or jobs

- Can off-load and compress backups

- Great support team

- Rich feature set and multiple databases supported

Disadvantages

- Cost per node

- UI can occasionally be clunky

- Query tools lack as compared to other solutions here

MONyog

http://ift.tt/1gsatxW

MONyog MySQL Monitor and Advisor is a “MySQL DBA in a box” that helps MySQL DBAs manage more MySQL servers, tune their current MySQL servers and find and fix problems with their MySQL database applications before they can become serious problems or costly outages.

MONyog proactively monitors enterprise database environments and provides expert advice on how even those new to MySQL can tighten security, optimize performance and reduce downtime of their MySQL powered systems.

MONyog is more DBA focused and focuses on the MySQL configuration and queries.

Architecture

MONyog web server runs on Linux, monitoring MySQL on all platforms and also monitoring OS-data on Linux servers. To retrieve OS metrics, MONyog uses SSH. However, with this scenario (MONyog installed on a Linux machine) MONyog web-server/agent cannot collect Windows OS metrics.

Of course, the client where the MONyog output is viewed can be any browser supporting AJAX on any platform. MONyog can be installed on a remote PC as well as the server. It does not require processing, and with agentless monitoring it can collect and retrieve data from the server.

Advantages

- Setup and startup within two minutes

- Agentless

- Good query tools

- Manages configuration

- Great advisors for database tuning built-in

- Most comprehensive and detailed alerting

Disadvantages

- Cost per node

- Only supports MySQL

VividCortex

VividCortex is a good cloud-based tool to see what your production databases are doing. It is a modern SaaS database performance monitoring platform that significantly eases the pain of database performance at scale, on distributed and polyglot systems, for the entire engineering team. It’s hosted for you with industry-leading security, and is continuously improved and maintained. VividCortex measures and analyzes the system’s work and resource consumption. The result is an immediate insight into query performance, better performance and quality, faster time-to-market and reduced cost and effort.

Architecture

VividCortex is the combination of agent programs, APIs and a web application. You install the agents on your servers, they send data to their APIs, and you access the results through the web application at http://ift.tt/1OgyUwi. VividCortex has a diagram on their site showing how it works:

The agents are self-supervising, managed by an agent called vc-agent-007. You can read more about the agents in the agent-specific documentation. They send primarily time-series metrics to the APIs, at one-second granularity. It sometimes sends additional metadata as well. For example, query digests are required to show what query is responsible for specific query-related metrics.

On the backend, a distributed, fully multi-tenant service stores your data separately from all other customers. VividCortex servers are currently hosted in the Amazon AWS public cloud.

Advantages

- Great visibility into query-level performance to pinpoint optimization efforts

- Granularity, with the ability to identify performance fluctuations down to a one-second resolution

- Smart anomaly detection using advanced statistics and machine learning to reduce false-positives and make alerts meaningful and actionable

- Unique collaboration tools, enabling developers to answer many of their own questions and freeing DBAs to be more responsive and proactive.

Disadvantages

- Cloud-based tools may not be desirable in a secure environment

- Cost

- Not useful if you lose outside network access during an incident

- Dependent on AWS availability

SelectStar

https://selectstar.io

SelectStar monitors key metrics for many different database types, and has a comprehensive alerts and recommendations system. SelectStar supports monitoring and alerts on:

- MySQL, Percona Server for MySQL, MariaDB

- PostgreSQL

- Oracle

- MongoDB

- Microsoft SQL

- DB2

- Amazon RDS and Aurora

- Hadoop

- Cassandra

The alerts and recommendations are designed to ensure you have an immediate understanding of key issues — and where they are coming from. You can pinpoint the exact database instance that may be causing the issue, or go further up the chain and see if it’s an issue impacting several database instances at the host level.

Recommendations are often tied to alerts — if you have a red alert, there’s going to be a recommendation tied to it on how you can improve. However, the recommendations pop up even if your database is completely healthy — ensuring that you have visibility into how you can improve your configuration before you actually have an issue impacting performance.

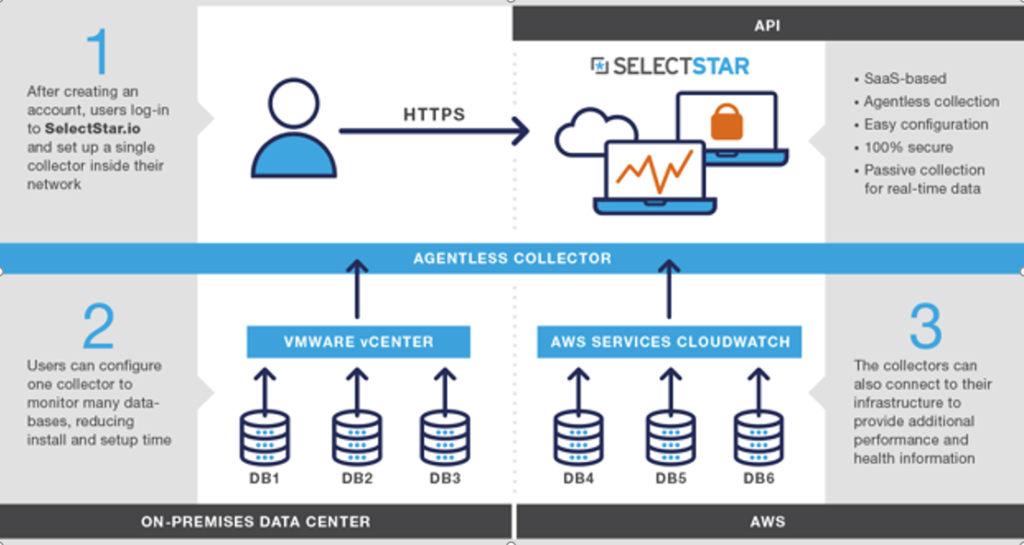

Architecture

Using agentless collectors, SelectStar gathers data from both your on-premises and AWS platforms so that you can have insight into all of your database instances.

The collector is an independent machine within your infrastructure that pulls data from your database. It is low impact in order to not impact performance. This is a different approach from all of the other monitoring tools I have looked at.

Advantages

- Multiple database technologies (the most out of the tools presented here)

- Great visibility into query-level performance to pinpoint optimization efforts

- Agentless

- Good query tools

- Great advisors for database tuning built in

- Good alerting

- Fast setup

- Monitors your database in depth

- Query analytics

Disadvantages

- Cloud-based tools may not be desirable in a secure environment

- Cost

- New, could still have some growing pains

- Still requires an on-premises collector

So What Do I Recommend?

“It depends.” – Peter Z., CEO Percona

As always, I recommend whatever works best for your workload, in your environment, and within the standards of your company’s practices!

via MySQL Performance Blog

Monitoring Databases: A Product Comparison

Welcome to another post in the series of Percona Live featured session blogs! In these blogs, we’ll highlight some of the session speakers that will be at this year’s Percona Live conference. We’ll also discuss how these sessions can help you improve your database environment. Make sure to read to the end to get a special Percona Live 2017 registration bonus!

Percona: How did you get into database technology? What do you love about it?