I’m happy to announce Finch: a new MySQL benchmarking tool for experts, developers, and modern infrastructure.

TL;DR: https://square.github.io/finch/

Planet MySQL

Just another WordPress site

I’m happy to announce Finch: a new MySQL benchmarking tool for experts, developers, and modern infrastructure.

TL;DR: https://square.github.io/finch/

Planet MySQL

https://www.percona.com/blog/wp-content/uploads/2023/03/ai-cloud-concept-with-robot-arm-1-200×150.jpg

Hello friends! If you plan to migrate your database from on-prem servers to RDS (either Aurora or MySQL RDS), you usually don’t have much choice but to do so using logical backups such as mysqldump, mysqlpump, mydumper, or similar. (Actually, you could do a physical backup with Percona XtraBackup to S3, but given that it has not been mentioned at any time which brand —MySQL, Percona Server for MySQL, or MariaDB — or which version —5.5, 5.6 or MariaDB 10.X — is the source, many of those combinations are unsupported for this strategy, so logical backup is the way to go.)

Depending on the size of the instance or the schema to be migrated, we can choose one tool or another to take advantage of the resources of the servers involved and save time.

In this blog, for the sake of simplicity, we are going to use mysqldump, and generate a single table, but the most curious thing is that we are going to create objects which have a certain DEFINER, and it must not be changed.

If you want to create the same lab, you can find it here.

Next, I leave below the list of objects to migrate (the schema is called “migration” and has the following objects):

mysql Source> SELECT *

FROM (SELECT event_schema AS SCHEMA_NAME,

event_name AS OBJECT_NAME,

definer,

'EVENT' AS OBJECT_TYPE

FROM information_schema.events

UNION ALL

SELECT routine_schema AS SCHEMA_NAME,

routine_name AS OBJECT_NAME,

definer,

'ROUTINE' AS OBJECT_TYPE

FROM information_schema.routines

UNION ALL

SELECT trigger_schema AS SCHEMA_NAME,

trigger_name AS OBJECT_NAME,

definer,

'TRIGGER' AS OBJECT_TYPE

FROM information_schema.triggers

UNION ALL

SELECT table_schema AS SCHEMA_NAME,

table_name AS OBJECT_NAME,

definer,

'VIEW' AS OBJECT_TYPE

FROM information_schema.views

UNION ALL

SELECT table_schema AS SCHEMA_NAME,

table_name AS OBJECT_NAME,

'',

'TABLE' AS OBJECT_TYPE

FROM information_schema.tables

Where engine <> 'NULL'

) OBJECTS

WHERE OBJECTS.SCHEMA_NAME = 'migration'

ORDER BY 3,

4;

+-------------+-----------------------+---------+-------------+

| SCHEMA_NAME | OBJECT_NAME | DEFINER | OBJECT_TYPE |

+-------------+-----------------------+---------+-------------+

| migration | persons | | TABLE |

| migration | persons_audit | | TABLE |

| migration | func_cube | foo@% | ROUTINE |

| migration | before_persons_update | foo@% | TRIGGER |

| migration | v_persons | foo@% | VIEW |

+-------------+-----------------------+---------+-------------+

5 rows in set (0.01 sec)

That’s right, that’s all we got.

The classic command that is executed for this kind of thing is usually the following:

$ mysqldump --single-transaction -h source-host -u percona -ps3cre3t! migration --routines --triggers --compact --add-drop-table --skip-comments > migration.sql

What is the next logical step to follow in the RDS/Aurora instance (AKA the “Destination”)?

Here we must make a clarification: as you may have noticed, the objects belong to the user “foo,” who is a user of the application, and it is very likely that for security reasons, the client or the interested party does not provide us with the password.

Therefore, as DBAs, we will use the user with all the permissions that AWS allows us to have (unfortunately, AWS does not allow the SUPER permission), which will be a problem that we will show below, which we will solve with absolute certainty.

So, the command to execute the data import would be the following:

$ mysql -h <instance-endpoint> migration -u percona -ps3cre3t! -vv < migration.sql

And this is where the problems begin:

If you want to migrate to a version of RDS MySQL/Aurora 5.7 (which we don’t recommend as the EOL is October 31, 2023!!) you will probably get the following error:

--------------

DROP TABLE IF EXISTS `persons`

--------------

Query OK, 0 rows affected

--------------

/*!40101 SET @saved_cs_client = @@character_set_client */

--------------

Query OK, 0 rows affected

--------------

/*!50503 SET character_set_client = utf8mb4 */

--------------

Query OK, 0 rows affected

--------------

CREATE TABLE `persons` (

`PersonID` int NOT NULL,

`LastName` varchar(255) DEFAULT NULL,

`FirstName` varchar(255) DEFAULT NULL,

`Address` varchar(255) DEFAULT NULL,

`City` varchar(255) DEFAULT NULL,

PRIMARY KEY (`PersonID`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci

... lot of messages/lines

--------------

/*!50003 CREATE*/ /*!50017 DEFINER=`foo`@`%`*/ /*!50003 TRIGGER `before_persons_update` BEFORE UPDATE ON `persons` FOR EACH ROW INSERT INTO persons_audit

SET PersonID = OLD.PersonID,

LastName = OLD.LastName,

City = OLD.City,

changedat = NOW() */

--------------

ERROR 1227 (42000) at line 23: Access denied; you need (at least one of) the SUPER privilege(s) for this operation

Bye

By the way, do you need help upgrading to MySQL 8.0? Do you need to stay on MySQL 5.7 a bit longer? We will support you either way. Learn more

What does this error mean? Since we are not executing the import (which is nothing more and nothing less than executing a set of queries and SQL commands) with the user “foo,” who is the owner of the objects (see again the define column of the first query shown above), the user “percona” needs special permissions such as SUPER to impersonate and “become” “foo” — but as we mentioned earlier, that permission is not possible in AWS.

So?

Several options are possible; we will list some of them

As you will see, the solution is not simple. I would say complex but not impossible.

Let’s see what happens if the RDS/Aurora version is from the MySQL 8 family. Using the same command to perform the import, this is the output:

--------------

DROP TABLE IF EXISTS `persons`

--------------

Query OK, 0 rows affected

--------------

/*!40101 SET @saved_cs_client = @@character_set_client */

--------------

Query OK, 0 rows affected

--------------

/*!50503 SET character_set_client = utf8mb4 */

--------------

Query OK, 0 rows affected

--------------

CREATE TABLE `persons` (

`PersonID` int NOT NULL,

`LastName` varchar(255) DEFAULT NULL,

`FirstName` varchar(255) DEFAULT NULL,

`Address` varchar(255) DEFAULT NULL,

`City` varchar(255) DEFAULT NULL,

PRIMARY KEY (`PersonID`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci

--------------

Query OK, 0 rows affected

... lot of messages/lines

--------------

/*!50003 CREATE*/ /*!50017 DEFINER=`foo`@`%`*/ /*!50003 TRIGGER `before_persons_update` BEFORE UPDATE ON `persons` FOR EACH ROW INSERT INTO persons_audit

SET PersonID = OLD.PersonID,

LastName = OLD.LastName,

City = OLD.City,

changedat = NOW() */

--------------

ERROR 1227 (42000) at line 23: Access denied; you need (at least one of) the SUPER or SET_USER_ID privilege(s) for this operation

Oops! A different message appeared, saying something like, “You need (at least one of) SUPER or SET_USER_ID privileges for this operation.”

Therefore, all we have to do now is assign the following permission to the “percona” user:

mysql Destination> GRANT SET_USER_ID ON *.* TO 'percona';

And bingo! The import finishes without problems. I am going to show you some of the commands that would have continued to fail and worked.

--------------

/*!50003 CREATE*/ /*!50017 DEFINER=`foo`@`%`*/ /*!50003 TRIGGER `before_persons_update` BEFORE UPDATE ON `persons` FOR EACH ROW INSERT INTO persons_audit

SET PersonID = OLD.PersonID,

LastName = OLD.LastName,

City = OLD.City,

changedat = NOW() */

--------------

Query OK, 0 rows affected

--------------

CREATE DEFINER=`foo`@`%` FUNCTION `func_cube`(num INT) RETURNS int

DETERMINISTIC

begin DECLARE totalcube INT; SET totalcube = num * num * num; RETURN totalcube; end

--------------

Query OK, 0 rows affected

Besides that, the objects belong to the user they correspond to (I mean, the DEFINER, the security context).

mysql Destination> SELECT *

FROM (SELECT event_schema AS SCHEMA_NAME,

event_name AS OBJECT_NAME,

definer,

'EVENT' AS OBJECT_TYPE

FROM information_schema.events

UNION ALL

SELECT routine_schema AS SCHEMA_NAME,

routine_name AS OBJECT_NAME,

definer,

'ROUTINE' AS OBJECT_TYPE

FROM information_schema.routines

UNION ALL

SELECT trigger_schema AS SCHEMA_NAME,

trigger_name AS OBJECT_NAME,

definer,

'TRIGGER' AS OBJECT_TYPE

FROM information_schema.triggers

UNION ALL

SELECT table_schema AS SCHEMA_NAME,

table_name AS OBJECT_NAME,

definer,

'VIEW' AS OBJECT_TYPE

FROM information_schema.views

UNION ALL

SELECT table_schema AS SCHEMA_NAME,

table_name AS OBJECT_NAME,

'',

'TABLE' AS OBJECT_TYPE

FROM information_schema.tables

Where engine <> 'NULL'

) OBJECTS

WHERE OBJECTS.SCHEMA_NAME = 'migration'

ORDER BY 3,

4;

+-------------+-----------------------+---------+-------------+

| SCHEMA_NAME | OBJECT_NAME | DEFINER | OBJECT_TYPE |

+-------------+-----------------------+---------+-------------+

| migration | persons | | TABLE |

| migration | persons_audit | | TABLE |

| migration | func_cube | foo@% | ROUTINE |

| migration | before_persons_update | foo@% | TRIGGER |

| migration | v_persons | foo@% | VIEW |

+-------------+-----------------------+---------+-------------+

5 rows in set (0.01 sec)

As you can see, there are no more excuses. It is necessary to migrate to MySQL 8. These kinds of small details help make it possible more easily.

A migration of this type is usually always problematic; it requires several iterations in a test environment until everything works really well, and everything can still fail. Now my dear reader, knowing that MySQL 8 solves this problem (as of version 8.0.22), I ask you, what are you waiting for to migrate?

Of course, these kinds of migrations can be complex. But Percona is at your service, and as such, I share Upgrading to MySQL 8: Tools That Can Help from my colleague Arunjith that can guide you so that the necessary migration reaches a good destination.

And remember, you always have the chance to contact us and ask for assistance with any migration. You can also learn how Percona experts can help you migrate to Percona Server for MySQL seamlessly:

Upgrading to MySQL 8.0 with Percona

I hope you enjoyed the blog, and see you in the next one!

Percona Database Performance Blog

https://laravelnews.s3.amazonaws.com/images/state-machines-laracon.jpg

Jake Bennett dives into state machines and how they can be leveraged to manage workflows and state transitions in applications.

The post Watch Jake Bennett’s "State Machines" talk from Laracon appeared first on Laravel News.

Join the Laravel Newsletter to get Laravel articles like this directly in your inbox.

Laravel News

https://laravelnews.s3.amazonaws.com/images/enjoying-laravel-data-featured.jpg

Freek Van Der Herten’s "Enjoying Laravel Data" talk from Laracon US is now live on Youtube!

The post Watch Freek Van Der Herten’s "Enjoying Laravel Data" talk from Laracon appeared first on Laravel News.

Join the Laravel Newsletter to get Laravel articles like this directly in your inbox.

Laravel News

https://laravelnews.s3.amazonaws.com/images/enterprise-laravel-featured.jpg

Matt Stauffer’s "Enterprise Laravel" talk from Laracon US is now live on Youtube!

The post Watch Matt Stauffer’s "Enterprise Laravel" talk from Laracon appeared first on Laravel News.

Join the Laravel Newsletter to get Laravel articles like this directly in your inbox.

Laravel News

https://s.yimg.com/os/creatr-uploaded-images/2023-07/9c89c3e0-2d72-11ee-9f97-e965bc439d43

Novelty accessory maker 8BitDo today announced a new mechanical keyboard inspired by Nintendo’s NES and Famicom consoles from the 1980s. The $100 Retro Mechanical Keyboard works in wired / wireless modes, supports custom key mapping and includes two giant red buttons begging to be mashed.

The 8BitDo Retro Mechanical Keyboard ships in two colorways: the “N Edition” is inspired by the Nintendo Entertainment System (NES), and the “Fami Edition” draws influence from the Nintendo Famicom. Although the accessory-maker likely toed the line enough to avoid unwelcome attention from Nintendo’s lawyers, the color schemes match the classic consoles nearly perfectly: The NES-inspired variant ships in a familiar white / dark gray / black color scheme, while the Famicom-influenced one uses white / crimson.

The Fami Edition includes Japanese characters below the English markings for each standard alphanumerical key. The keyboard’s built-in dials and power indicator also have a charmingly old-school appearance. And if you want to customize the keyboard’s hardware, you can replace each button on its hot-swappable printed circuit board (PCB). 8BitDo tells Engadget it uses Kailh Box White Switches V2 for the keyboard and Gatreon Green Switches for the Super Buttons.

As for what those bundled Super Buttons do, that’s up to you: The entire layout, including the two ginormous buttons, is customizable using 8BitDo’s Ultimate Software. The company tells Engadget they connect directly to the keyboard via a 3.5mm jack. And if the two in the box aren’t enough, you can buy extras for $20 per set.

The 87-key accessory works with Bluetooth, 2.4 GHz wireless and USB wired modes. Although the keyboard is only officially listed as compatible with Windows and Android, 8BitDo confirmed to Engadget that it will also work with macOS. It has a 2,000mAh battery for an estimated 200 hours of use from four hours of charging.

Pre-orders for the 8BitDo Retro Mechanical Keyboard are available starting today on Amazon and direct at 8BitDo. The accessory costs $100 and is estimated to begin shipping on August 10th.

This article originally appeared on Engadget at https://www.engadget.com/8bitdos-nintendo-inspired-mechanical-keyboard-has-super-buttons-just-begging-to-be-mashed-150024778.html?src=rssEngadget

https://www.louderwithcrowder.com/media-library/image.png?id=34709900&width=980

Sneaking in before the deadline, we here at the Louder with Crowder Dot Com website are declaring this man to be our Content King for the month of July. He didn’t start the day wanting to be a hero. Our man just wanted to buy some bloody strawberries. That’s where he learned what a cashless store was. And the cashless store learned they can go f*ck themselves.

A better IRL version of Jean Valjean I cannot imagine.

"If you want to call the police, call the police. I have paid my legal tender. And I’m going to leave with my strawberries. I’m going to eat my strawberries. I paid my legal tender in this dystopian place."

Shout out to this guy applauding, who would have given our hero a standing ovation if… you know.

There is context missing because obviously there was a dispute before this video starts. No one in the store had the foresight to remember to do it for the content early on and whip out their smartphones.

What we missed was the man being informed that his $4.22 purchase (I converted to real American money since none of you care about the pound sterling) needed to be made via credit card or cash app. Our hero said that was bollocks and that he wasn’t going to put a five-dollar purchase on his credit card. Also, they can take their ApplePay and stick it up their nose. The clerk said "Then you don’t get strawberries" and our hero started his patriotic dissent.

The idea of a "cashless" society is a controversial one. The Associated Press has issued a "fact" "check" claiming a video showing an elitist telling you the coming digital cashless society will be regulated and undesirable purchases will be controlled is misleading and out of context. They also claimed Democrats didn’t want to ban gas stoves. The fact that these words are even being said out loud is the problem.

Is the Left saying verbatim "We are going to use digital currency to control what you are allowed to buy?" No. Would any of you put it past them? Also no.

Republican presidential candidate Ron DeSantis promises on day one, he is banning central bank digital currencies. "I think it’s a huge threat to freedom. I think it’s a huge threat to privacy … it will allow [elitists] to block what they consider to be undesirable purchases, like too much fuel, … they just won’t let the transaction go through. [Or on] ammunition."

This old-based dude showed us the way. He showed us the way with strawberries.

The runners-up for July’s Content Kings are also from Europe: The bachelor party that stopped Just Stop Oil and the leggy German broad who yeeted protestors off the road by the hair. Step your game up, ‘Merica!

><><><><><><

Brodigan is Grand Poobah of this here website and when he isn’t writing words about things enjoys day drinking, pro-wrestling, and country music. You can find him on the Twitter too.

Facebook doesn’t want you reading this post or any others lately. Their algorithm hides our stories and shenanigans as best it can. The best way to stick it to Zuckerface? Bookmark LouderWithCrowder.com and check us out throughout the day! Also, follow us on Instagram and Twitter.

Louder With Crowder

https://www.percona.com/blog/wp-content/uploads/2023/07/Dynamic-SQL-200×119.jpg

Dynamic SQL is a desirable feature that allows developers to construct and execute SQL statements dynamically at runtime. While MySQL lacks built-in support for dynamic SQL, this article presents a workaround using prepared statements. We will explore leveraging prepared statements to achieve dynamic query execution, parameterized queries, and dynamic table and column selection.

Prepared statements refer to the ability to construct SQL statements dynamically at runtime rather than writing them statically in the code. This provides flexibility in manipulating query components, such as table names, column names, conditions, and sorting. The EXECUTE and PREPARE statements are key components for executing dynamic SQL in MySQL.

Example usage: Let’s consider a simple example where we want to construct a dynamic SELECT statement based on a user-defined table name and value:

SET @table_name := 't1';

SET @value := '123';

SET @sql_query := CONCAT('SELECT * FROM ', @table_name, ' WHERE column = ?');

PREPARE dynamic_statement FROM @sql_query;

EXECUTE dynamic_statement USING @value;

DEALLOCATE PREPARE dynamic_statement;

In this example, we use the CONCAT function to construct the dynamic SQL statement. The table name and value are stored in variables and concatenated into the SQL string.

Let’s look at another scenario:

Killing queries for a specific user:

CREATE PROCEDURE kill_all_for_user(user_connection_id INT)

BEGIN

SET @sql_statement := CONCAT('KILL ', user_connection_id);

PREPARE dynamic_statement FROM @sql_statement;

EXECUTE dynamic_statement;

END;

In this case, the prepared statement is used to dynamically construct the KILL statement to terminate all queries associated with a specific user.

You might use prepared statements to make dynamic queries, but dynamic queries can definitely make debugging more challenging. You should consider implementing some additional testing and error handling to help mitigate this issue. That could help you catch any issues with the dynamic queries early on in the development process.

Percona Monitoring and Management is a best-of-breed open source database monitoring solution. It helps you reduce complexity, optimize performance, and improve the security of your business-critical database environments, no matter where they are located or deployed.

Download Percona Monitoring and Management Today

Planet MySQL

https://laravelnews.s3.amazonaws.com/images/phpsandbox.jpg

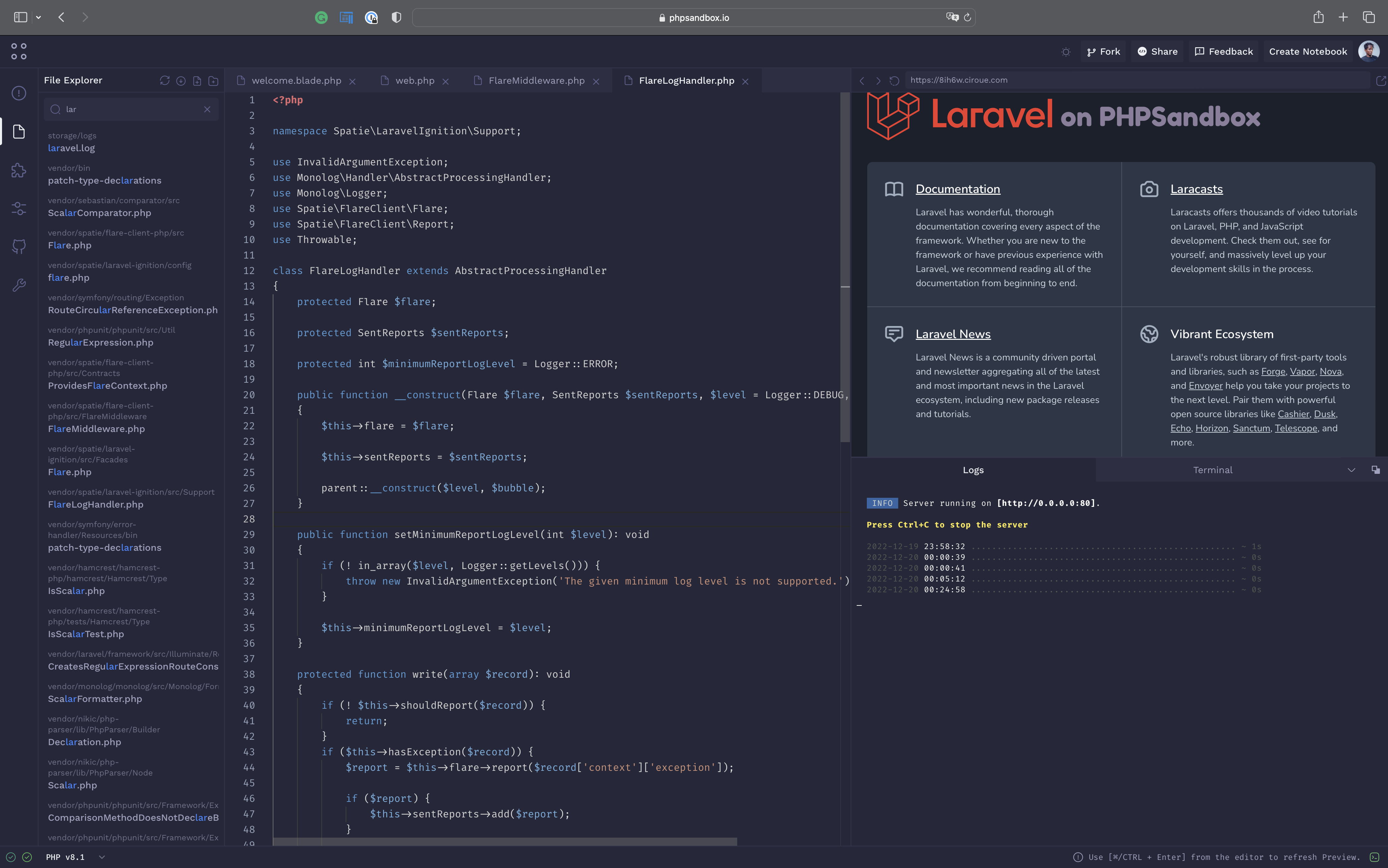

PHPSandbox is a web app that allows you to quickly prototype or share PHP projects without setting up a local environment.

It’s a pretty neat service because it allows you to test all kinds of things, such as the new “slim skeleton” in Laravel 11, or our Livewire Volt demo app, and even the new Laravel Prompts feature that Jess Archer demoed at Laracon.

Here are some more of the features PHPSandbox includes:

PHPSandbox automatically provisions a permanent preview URL for your project so you can see your changes instantly.

Multiple PHP Versions, all PHP extensions you need, and a full-featured Linux environment.

Import an existing public composer project from GitHub or Export your projects on PHPSandbox to GitHub.

The Composer integration allows you to use Composer in your projects while they ensure it keeps working.

Configure your environment to your liking. Do you want to change your PHP version or your public directory? No problem.

The JavaScript and CSS ecosystem have had code playgrounds, but this is one of the nicest ones available for PHP. The base plan is free, and they have an upgraded professional plan for $6 a month, including private repos, email captures, and more.

But the base plan works great for quickly testing out packages and making demos of your next tutorial.

Laravel News

https://i.kinja-img.com/gawker-media/image/upload/c_fill,f_auto,fl_progressive,g_center,h_675,pg_1,q_80,w_1200/2a0328e61c23c55902592781dc37a5db.jpg

Since the LEGO system was introduced in the mid-1950s, the sets of interlocking blocks, figures, and other pieces have been popular with people of all ages (except, maybe, the people who accidentally step on the blocks while barefoot).

In addition to providing the opportunity for creative play—allowing children to design and build their own structures—LEGO has released thousands of sets with the pieces and instructions for a specific building, design, vehicle, and countless other objects. Now, building instruction booklets for more than 6,800 different sets are available to download for free at the Internet Archive. Here’s what to know.

Created on May 29, 2023, the Internet Archive’s LEGO Building Instructions collection contains “a dump of all available building instruction booklet PDFs from the LEGO website” as of March 2023, according to the description on the site.

You can search for sets by their number or name, or simply browse the collection. At this point, it’s not possible to sort the instructions based on the date they were initially released, but you can sort them by the number of views that particular week, or since the collection launched.

Currently, the most popular instructions are the ones for the Colosseum, the Galaxy Explorer, and the cover photo of Meet the Beatles.

Of course, the Internet Archive collection isn’t the only site with information about LEGO, including build instructions. A few others include:

Lifehacker