https://www.percona.com/blog/wp-content/uploads/2023/01/B-tree-Index-Corruption.jpg

This blog is in continuation of my previous blog on the basic understanding of corruption with the subject line The Ultimate Guide to Database Corruption: Part 1 – An Overview. If you have not already gone through it, I encourage you to read it to understand data corruption.

This blog is in continuation of my previous blog on the basic understanding of corruption with the subject line The Ultimate Guide to Database Corruption: Part 1 – An Overview. If you have not already gone through it, I encourage you to read it to understand data corruption.

Introduction

This blog focuses on B-tree indexes and various corruption-related issues that occur with them. Understanding how Postgres implements the B-tree index is needed before one can begin understanding index corruption. Once we have a proper understanding of the index structure, and how the b-tree indexes are accessed, it would be easy to understand how the corruption affects the data retrieval operation, and various methods to resolve them.

Some case studies will also be covered in this blog.

B-tree index structure

In PostgreSQL, a B-tree index structure is implemented using Lehman and Yao’s high-concurrency B-tree algorithm. Logically, it comprises four types of pages organized in a hierarchical structure.

- The meta page

- The root page

- Internal pages

- Leaf pages

Meta page:

This is the first page of an index that basically contains metadata information, such as a type of index, and is also called the page-zero. The location of the root page can be obtained from this page. If this page is corrupted or inaccessible, it is impossible to access the index.

Root pages and internal pages:

The root page is the very first page that contains links to the pages called internal pages and leaf pages. In terms of storage, internal pages are no different than the root page; they also store pointers to other internal pages. The only difference is that there is only one root page in every index, while there may be a number of internal pages.

These pages do not contain any information to access table records.

Leaf pages:

These pages lie at the last level and cannot be spawned further. They contain actual information to access table data. The data stored in these pages are values and CTIDs from the table where the particular value lies.

A typical structure of a B-tree index is as below.

As narrated in the diagram above, the root page and internal pages have tuples that are linked with the internal pages or leaf pages. Every internal and leaf page should have equal or greater values than the value linked from the previous page and the next higher value than the associated value in that page. The first tuple in every root and internal page is blank; it points to the page that contains all the values lower than its immediate right-hand neighbor.

For example, in the image above,

- The root page has three tuples (blank, 40, 80), and the “internal page 1” is associated with the tuple whose value is blank, and the next higher value in the root page is 40. So here, the “internal page 1” may contain values less than 40.

- While the “internal page 2” contains values greater than or equal to 40 and less than 80.

Case studies

In the case of index corruption, it gives different results as per the position where the data is corrupted. Here, corruption may exist in any page (root, internal, and leaf). But, while studying it carefully, one may understand that corruption may not always reveal its existence. On occasions, it misguides users as well. If the page header or format is corrupt, it throws an error while executing the query itself. But, when the actual data inside pages are corrupted instead of format, it cannot detect it as corrupted, but it returns some results.

In this blog, I have deliberately chosen an index without any internal pages, so that readers may understand that internal pages may not necessarily be present in every B-tree index. The index used here for example is a primary key index.

This section deals with cases where queries return incorrect results due to corruption. Here, we delve into data corruption with leaf pages and internal pages.

Case 1 – Data gets corrupted and becomes unsearchable

This is a classical case of corruption where Postgres tries to find a record, but it cannot be discovered because the value does not belong to its current parent node. In this case, in one of the leaf pages, two adjacent bits get exchanged with each other. Details are as below.

Here, we randomly pick a number from a table and prepare a case on it. Below is the record and details related to it. The record (id = 612) described in the snapshot below will be our target to describe corruption.

We have an index on the id column of corruption_test, and as described below, positions of underlined bits (10th and 11th) get exchanged in an index. Due to this, the actual value becomes 1124 from 612; however, the value in the associated table is still the same.

Table name: – corruption_test

Index name: – test_tbl_pkey

Corruption in page:- Leaf page 2

Associated CTID in table:- (101,6)

Actual value in an index tuple:- 00000010 01100100 (612)

Value in an index tuple after corruption:- 00000100 01100100 (1124)

The below image delineates what actually transpired in the test_tbl_pkey index.

Now, we will see how queries respond when we request to retrieve the data for values associated with this case.

Querying a table using the pristine number:

As described above, the actual (non-corrupted) value is 612. Let’s see what result we receive by querying using “id = 612” predicate.

Now, what if we search data using previously extracted CTID in the table?

This is sufficient to take you by surprise as the record actually exists in the table, but still, it fails to retrieve it when we directly query it using a particular value.

This happens because 612 was replaced by 1124 after corruption in the index. Here, the search performs the index scan to obtain the record easily, and the index is unable to locate the desired record; hence, it is unable to show any data. The below describes this situation.

Querying a table using a corrupted number:

As we have understood that 612 does not exist in the index, it may not be gleaned from the index. But, we know that 1124 exists in the index. Curious to know what happens when querying that 1124.

Let us dive a little deeper to know why we did not get any record upon querying corrupted data.

Here, as per a valid structure of an index, 1124 ought to be there in Leaf page 3, but it may not be found there as it does not exist in either the page or the table. While Leaf page 2 contains details pertaining to 1124, PostgreSQL will not search 1124 in Leaf page 2 as it does not logically satisfy to explore the value 1124 in that page; hence, the value will not be found.

The image below narrates the situation.

Case 2 – Incorrect data pointers (CTID) stored against the value

In this case, PostgreSQL tries to find a record from the index, and it can find the record, but shows the false one. This happens because false CTID values are stored in an index. Due to this, it shows a completely different record.

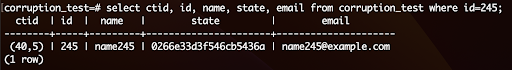

Here, one bit of CTID gets changed, which gives a fresh impetus to corruption. We randomly pick a record (id = 245) from a table as a subject to describe the test case. The snapshot below is the record details.

Table name: – corruption_test

Index name: – test_tbl_pkey

Corruption in page:- Leaf page 1

Associated CTID in table:- (40,5)

Value at the CTID:- 245

Actual CTID in an index tuple :- (40,5) – (00101000,00000101)

CTID in an index tuple after corruption:- (56,5) – (00111000,00000101)

As described above, due to a change of value in the 5th bit, it stores a different CTID. The case is as described in the below.

Now, we will observe how different queries behave in this kind of situation.

Querying a table using an in-doubt number:

As described above, the value in doubt here is 245. Let’s see what result we receive by querying using the “id = 245” predicate.

This is shocking and perplexing as the data returned is completely different than we expected. No need to explain what its implication could be on day-to-day business.

Here, in an index, we can observe that the CTID stored against 245 points to a different value. So, the query returns different values here. The below image describes what actually happened internally.

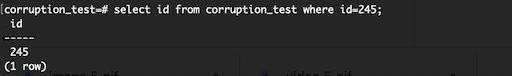

Querying a table by selecting id only:

If we select only the id column in a query, it shows the correct result.

The reason to get the correct result is that it performs an “index-only scan” because the index exists on the id column. It is therefore not required to visit table data to show records as the index contains all the required records.

Case 3 – Incorrect index format

Every database object has a pre-defined format. PostgreSQL (or any other database) is programmed to read and write in a specific format that is just a sequence of characters (or bytes). If one or more characters get changed, the object becomes unreadable. In such a corruption case, the query instantly returns an error, and the error texts are not the same every time.

Let’s look into various cases of errors.

Corrupted meta page:

As described above, the meta page is a core page of any index; it stores the metadata of an index. So, if any format-related corruption is there in the meta page, the index becomes unrecognizable. Due to this, the query returns no result.

The below snapshot shows the result when querying a particular record.

![]()

The below query shows the result when the whole table is queried.

![]()

Here is a catch! It is understandable not to receive data if a query performs an index scan. But, it is perturbing when a query cannot deliver because of an index corruption when it is not expected to use an index. But, this happens due to a planner.

Corrupted non-meta page:

If format-related corruption exists in a non-meta page (root, internal, or leaf), it returns a different error. However, it does not affect queries that perform sequential scans.

![]()

Causes

After reading the above sections, one may understand corruption is nothing but storing inappropriate bytes in database files, which leads to anomalies in data. But, the question here is how it occurs. Although many events leave corruption, it is difficult to create any reproducible test case for corruption because there is no surety of what actually caused it. The only option left to us is to speculate around them. The majority of issues are caused by hardware failure or hardware issues, and the following are the most probable reasons.

- Faulty RAID disks or RAID controllers

- Defective disk or RAM

- Disks without power failure protection

- Overloaded CPU

- PostgreSQL/OS bug

Additionally, there are some problems caused by users. Some of them are as below.

- Killing a connection with signal 9

- Abruptly stopping PostgreSQL

- Copying file for backup without pg_start_backup

- Executing pg_resetwal

Corruption is not a frequent affair; however, it becomes routine in case of faulty hardware or a bug.

Detection

As already mentioned in the case studies section, it is difficult (albeit there are exceptions) to detect corruption when data inside an index is corrupted. This is because data cannot be scanned by a database product; however, this is not true with the format.

When a B-tree index’s format is corrupted, we can use functions of amcheck and pageinspect extensions to verify its format. Here is an example of verifying an index page using the bt_page_items function of the amcheck extension.

We can iterate through all the pages of an index and list out what pages are corrupted. However, this hardly makes sense as we can not change data inside an index.

Repairing

Removing corruption from a B-tree index does not require a thorough analysis; it is just a matter of re-creating an index. As we know, an index is a subset of table data to enable quicker data retrieval. As long as there is no issue in the table data, we can rebuild the index. It can be performed by the following options.

- Drop and recreate an index

- Use reindex command

- Rebuild using pg_repack utility

Out of all of these, pg_repack is the most viable option as it starts creating an index in parallel so that running operations do not get affected. For more information, kindly visit the pg_repack blog.

Anticipation

After reviewing various scenarios related to corruption, we can surely say that it is really scary as it may spook us by showing unwanted and unexpected results. Data is a valuable asset, hence, it is needless to describe its business impact; we can surely reckon the same. Well, how about nipping it in the bud?

Yes, it is possible. We can get corruption recorded in the database using a checksum feature. While performing initdb, we can use the -k option to enable checksum and later need to keep the data_checksums parameter enabled. This will record every corruption pg_stat_database view. Here, if we already know where the corruption exists, it is possible to take necessary action before it is propagated to users.

Summary

A robust structure of B-tree indexes is designed for faster data access. However, when certain bits in bit sequences change, it becomes unreadable or starts returning false data, which has an impact on day-to-day business. Unequivocally, this is a horrible situation. However, it is possible to detect certain kinds of corruption, and it is recommended to take preemptive measures to tackle such a situation.

Please feel free to post your queries or suggestions in the comments section.

Percona Database Performance Blog