https://media.notthebee.com/articles/64a9c7048b28764a9c7048b288.jpg

"…a kind of architecture that really hates people, that is designed to oppress the human spirit, and make people feel without value…" ????

Not the Bee

Just another WordPress site

https://media.notthebee.com/articles/64a9c7048b28764a9c7048b288.jpg

"…a kind of architecture that really hates people, that is designed to oppress the human spirit, and make people feel without value…" ????

Not the Bee

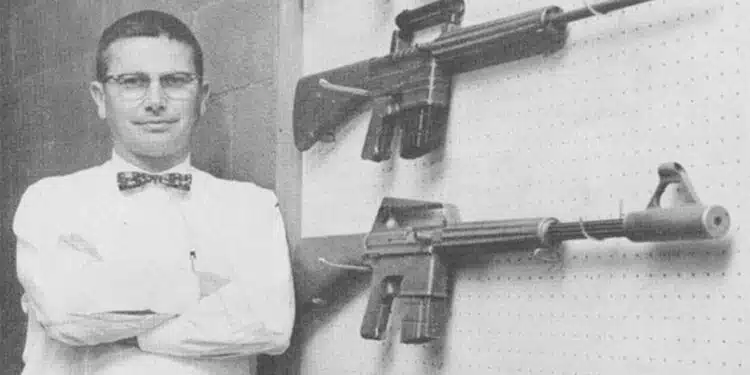

https://www.pewpewtactical.com/wp-content/uploads/2023/06/Eugene-Stoner-AR-10.png

Everybody is familiar with the iconic AR-15, but just where did it come from?

To learn the history of the AR-15, you have to first look at the genius behind it…Eugene Stoner.

So, follow along as we talk about Stoner, his life, and what led him to create one of the most notable rifles in history.

Table of Contents

Loading…

Born in 1922, Stoner graduated from high school right in time for the beginning of World War II. Immediately after graduation, he landed a job at Vega Aircraft Company, installing ordnance. It was here that he would first learn about manufacturing arms.

But then Pearl Harbor happened, leading Stoner to join the Marines soon after.

His background in ordnance resulted in him being shipped to the Pacific Theater, where he was involved in aviation ordnance.

After the war, Stoner hopped around from a few different engineering jobs until he landed a position with a small division of the Fairchild Engine and Airplane Corporation known as Armalite.

Stoner’s first major accomplishment at Armalite was developing a new survival weapon for U.S. Air Force pilots.

This weapon was designed to easily stow away under an airplane’s seat, and in the event of a crash, a pilot would have a rifle at the ready to harvest small game and serve as an acceptable form of self-defense as well.

The result was known as the Armalite Rifle 5 – the AR-5. Though the modern semi-auto version is known as the AR-7, this weapon can still be found in gun cabinets across America.

Prices accurate at time of writing

Prices accurate at time of writing

Eugene Stoner had already left his mark but was far from fading into the shadows. He was just getting started.

Stoner continued his work at Armalite, but it wasn’t long until another opportunity appeared for him to change the course of history…the Vietnam War.

In 1955 the U.S. Army put out a notice that they were looking for a new battle rifle. A year later, the Army further defined they wanted the new weapon to fire the 7.62 NATO.

Tinkering in his garage, Stoner emerged with a prototype for a new rifle not long afterward called the AR-10.

The AR-10 was the first rifle of its kind, as never before had a rifle utilized the materials Stoner had incorporated.

Guns had always been made of wood and steel, but Stoner drew from his extensive history in the aircraft industry, using lightweight aluminum alloys and fiberglass instead.

This made his AR-10 a lighter weapon that could better resist weather.

Unfortunately, Stoner was late to the race, and the M14 was chosen as the Army’s battle rifle of choice instead.

The designs for the AR-10 were sold to the Dutch instead. Stoner returned to his day job, focusing on the regular rut of daily life.

But then the Army called again…

As it turned out, the M14 was too heavy with too much recoil and difficult to control while under full auto.

In addition, the 7.62 NATO was overkill within the jungles of Vietnam. Often the enemy couldn’t be seen beyond 50 yards, meaning that a lighter weapon could still accomplish the job and let soldiers carry more ammunition while on patrol.

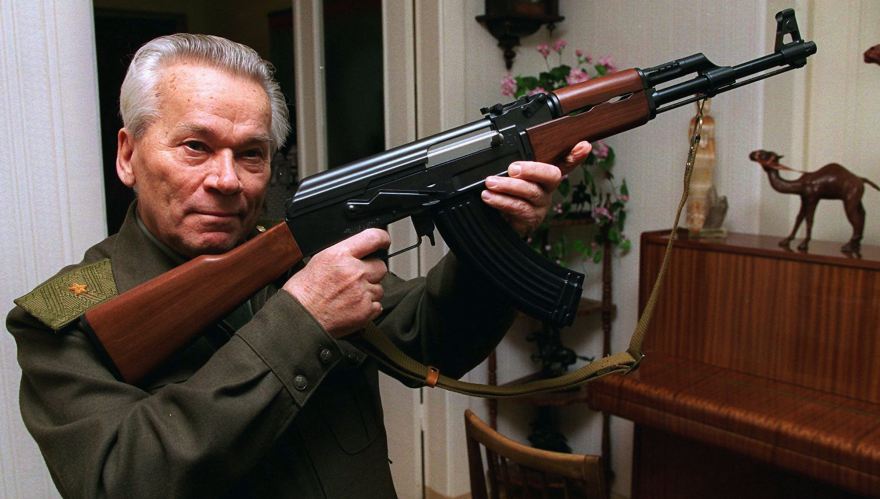

Adding further urgency to the need was the Soviet development of the AK-47.

Amid The Cold War, the idea that the communists may have a better battle rifle than American soldiers was concerning.

So, the Army needed a new battle rifle.

Returning to his AR-10 plans, Stoner set to scaling things down. The AR-10 was modified to use the .223 Remington, with the new rifle designated the Armalite Rifle–15 or AR-15.

However, Armalite didn’t have the resources to produce weaponry on a mass scale, so they sold the designs to Colt.

Colt presented the design to the Army, but Army officials dismissed the design. It seemed they preferred the traditional look and feel of wood and steel over the AR-15’s aluminum and plastic.

But the story doesn’t end there…

At an Independence Day cookout in 1960, a Colt contract salesman showed Air Force General Curtis LeMay an AR-15. Immediately, LeMay set up a series of watermelons to test the rifle.

LeMay ended up so impressed with the new gun that the very next year – after his promotion to Chief of Staff – he requested 80,000 AR-15s to replace the Air Force’s antiquated M2 rifles.

His request was denied, and the Army kept supplying American soldiers overseas with the M14.

In 1963, the Army and Marines finally ordered 85,000 AR-15s…redesignated as the M16.

Immediately, the Army began to fiddle with Stoner’s design. They changed the powder to a design that proved more corrosive and generated much higher pressures.

Also, they added the forward assist (which Stoner hated). Inexplicably, they began to advertise the weapon as “self-cleaning.”

They then shipped thousands of rifles – without manuals or cleaning gear – to men in combat overseas. Men trained on an entirely different weapon system.

As expected, American solider began to experience jammed M-16s on the battlefield.

By this point, Stoner had left Armalite, served a brief stint as a consultant for Colt, and finally landed a position at Cadillac Gauge (now Textron).

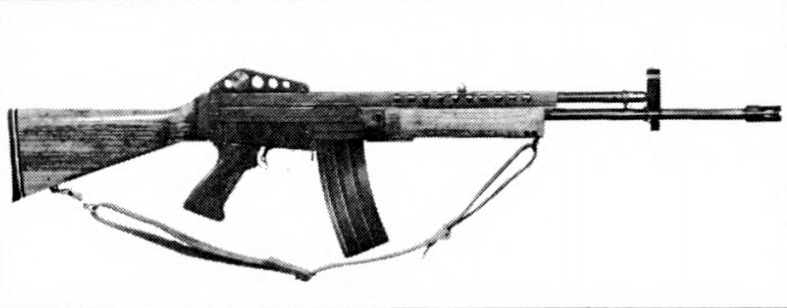

It was there between the years of 1962-1963 that he began designing one of the most versatile firearms designs of its time: the Stoner 63.

The Stoner 63 was a modular system chambered in 5.56 NATO. Stoner crafted this weapon to be something of a Mr. Potato Head. The lower receiver could be transformed into just about anything.

A carbine, rifle, belt-fed SAW, vehicle-mounted weapon, and top-fed light machine gun were all variations of the Stoner 63, which could easily be crafted from the common receiver.

Interchangeable parts were utilized across the platform, and the barrels didn’t need tools to be swapped out. This was the Swiss Army knife of guns. It was truly a game-changer.

The catch was that it didn’t like to work as well on extended missions. There were so many moving parts, with such fine tolerances, that when spending weeks in the muddy jungle with a Stoner 63, the odds of losing a component or having a dirty, jammed gun were dangerous.

While the system worked wonderfully on quick missions of a few hours, it was deemed too much of a risk for use amongst the basic infantryman.

Despite this, the Stoner 63 still saw widespread use throughout the Special Forces before finally being retired in 1983.

In 1972, Stoner finally left Cadillac Gauge to start his own company, co-founding ARES with a friend.

Aside from making improvements on the Stoner 63 — with the new model called the Stoner 86 — he also began working on yet another rifle design that sadly never took off, known as the Future Assault Rifle Concept or FARC.

Stoner would continue designing weapons with ARES until he received an offer from Knight’s Armament Company.

Knight’s Armament Company would be the final company where Stoner would produce his legendary work.

Almost immediately, Stoner developed the SR-25 rifle, a more accurate version of the AR-10.

The Navy SEALS would finally adopt the weapon in 2000 as their Mark 11 Mod 0 Sniper Weapon. It would see use until finally being phased out 17 years later in 2017.

Another sniper rifle, the KAC SR-50, was also developed but strangely fell to the wayside due to political pressure.

As police departments nationwide began to upgrade their .38 Special revolvers for the new-tech polymer Glock, Stoner jumped into the fray.

He created a polymer-framed, single-stack, striker-fired design that showed great promise.

But the weapon was so unwieldy and inaccurate (engineers had bumped Stoner’s initial 6-pound trigger pull up to 12 pounds) that it was a fiasco. Colt would later pull it from shelves in 1993 over safety issues.

It was yet another frustrating end to what was originally a great design.

Eugene Stoner passed away from brain cancer in 1997 in the garage of his Palm City, Florida home.

By the time of his death, there were nearly 100 patents that were filed in his name. Not to mention, he’d revolutionized both the world of firearms and Americans’ ability to defend themselves.

What are your thoughts on Eugene Stoner and his designs? Let us know in the comments below. Want to learn more about other firearms designers? Check out our list of the 5 Most Influential Gun Inventors. Or, for your very own AR-15, check out our list of the top recommended AR-15 models.

The post Eugene Stoner: The Man Behind the AR-15 appeared first on Pew Pew Tactical.

Pew Pew Tactical

https://static1.makeuseofimages.com/wordpress/wp-content/uploads/2023/06/man-works-on-laptop-next-to-networking-equipment.jpg

Linux is commonly preferred among network engineers—so if you’ve thought about installing it for your work, you’re not alone.

SCROLL TO CONTINUE WITH CONTENT

If you’re a network engineer, it’s easy to wonder which distributions will have the best features for your work. Here are the six best Linux distributions for network engineering:

Of all the Linux distributions, one of the most highly regarded among network engineers is Fedora—and there’s a simple reason why.

Fedora is an open-source distribution that serves as a community equivalent to Red Hat Enterprise Linux (RHEL). RHEL itself is commonly chosen as the operating system for enterprise-level systems.

As a result, network engineers who use Fedora enjoy a greater level of familiarity with the RHEL systems they may encounter throughout their careers.

Fedora also offers users an incredible arsenal of open-source tools, built-in support for containerized applications, and consistent access to cutting-edge features and software.

Download: Fedora (free)

As one of the most popular enterprise distributions, RHEL is a great option because it is robust and reinforced. Each version of RHEL has a 10-year lifecycle, meaning that you’ll be able to use your chosen version of RHEL (and enjoy little to no compatibility issues) for years.

By using RHEL, you’ll also become familiar with many of the systems you’re likely to encounter on the job.

Many of the qualities of RHEL that make it attractive as an enterprise solution are just as appealing for independent users.

RHEL comes pre-equipped with the SELinux security module, so you will find it easy to get started with managing access controls and system policies. You’ll also have access to tools like Cacti and Snort through the RPM and YUM package managers.

Download: RHEL (free for developers; $179 annually)

Much like Fedora, CentOS Stream is a distribution that stays in line with the development of RHEL. It serves as the upstream edition of RHEL, meaning that the content in the latest edition of CentOS Stream is likely to appear in RHEL’s next release.

While CentOS Stream may not offer the same stability as Fedora, its enticing inclusion of cutting-edge software makes it worth considering.

CentOS Stream also has a distinct advantage over downstream editions of RHEL following Red Hat’s decision to close public access to the source code of RHEL: it will continue to stay in line with the latest experimental changes considered for the next release of RHEL.

In the future, CentOS Stream is likely to become the best option for anyone seeking an RHEL-adjacent distribution.

Download: CentOS Stream (free)

Another powerful and reliable option for network engineers is openSUSE. openSUSE is impressively stable and offers frequent new releases, making it a good option if you prefer to avoid broken packages while still taking advantage of the latest software releases.

Out of the box, you won’t have any issues configuring basic network settings through YaST (Yet another Setup Tool). Many of the packages that come preinstalled with openSUSE can provide you with incredible utility.

Wicked is a powerful network configuration framework, for example, while Samba is perfect for enabling file-sharing between Linux and Windows systems. You won’t have any trouble installing the right tool for a job with openSUSE’s Zypper package manager.

Download: openSUSE (free)

Debian is a widely-renowned Linux distribution known for being incredibly stable and high-performance. Several branches of Debian are available, including Debian Stable (which is extremely secure and prioritizes stability) and Debian Unstable (which is more likely to break but provides access to the newest cutting-edge releases of software).

One of the biggest advantages of using Debian for network engineering is that it has an incredible package-rich repository with over 59,000 different software packages.

If you’re interested in trying out the newest niche and experimental tools in networking and cybersecurity, an installation of Debian will provide you with total access.

Download: Debian (free)

As a distribution designed for penetration testing, Kali Linux comes with a massive variety of preinstalled tools that network engineers are certain to find useful. Wireshark offers tantalizing information about packets moving across a network, Nmap provides useful clues about network security, and SmokePing provides interesting visualizations of network latency.

Not all of the software packaged with Kali Linux is useful for network engineers, but luckily, new Kali installations are completely customizable. You should plan out what packages you intend to use in advance so that you can avoid installing useless packages and keep your Kali system minimally cluttered.

Download: Kali Linux (free)

While some Linux distributions are better suited to network engineers, almost any Linux distribution can be used with the right software and configurations.

You should test out software like Nmap and familiarize yourself with networking on your new Linux distro so that lack of familiarity doesn’t become an obstacle later on.

MakeUseOf

https://static1.makeuseofimages.com/wordpress/wp-content/uploads/2023/06/documents-on-wooden-surface.jpg

Data science is constantly evolving, with new papers and technologies coming out frequently. As such, data scientists may feel overwhelmed when trying to keep up with the latest innovations.

SCROLL TO CONTINUE WITH CONTENT

However, with the right tips, you can stay current and remain relevant in this competitive field. Thus, here are eight ways to stay on top of the latest trends in data science.

Data science blogs are a great way to brush up on the basics while learning about new ideas and technologies. Several tech conglomerates produce high-quality blog content where you can learn about their latest experiments, research, and projects. Great examples are Google, Facebook, and Netflix blogs, so waste no time checking them out.

Alternatively, you can look into online publications and individual newsletters. Depending on your experience level and advancement in the field, these blogs may address topics you’d find more relatable. For example, Version Control for Jupyter Notebook is easier for a beginner to digest than Google’s Preference learning for cache eviction.

You can find newsletters by doing a simple search, but we’d recommend Data Elixir, Data Science Weekly, and KDnuggets News, as these are some of the best.

Podcasts are easily accessible and a great option when you’re pressed for time and want to get knowledge on the go. Listening to podcasts exposes you to new data science concepts while letting you carry out other activities simultaneously. Also, using interviews with experts in the field, some podcasts offer a window into the industry and let you learn from professionals’ experiences.

On the other hand, YouTube is a better alternative for audio-visual learners and has several videos at your disposal. Channels like Data School and StatQuest with Josh Starmer cover a wide range of topics for both aspiring and experienced data scientists. They also touch on new trends and methods, so following these channels is a good idea to keep current.

It’s easy to get lost in a sea of podcasts and videos, so carefully select detailed videos and the best podcasts for data science. This way, you can acquire accurate knowledge from the best creators and channels.

Online courses allow learning from data science academics and experts, who condense their years of experience into digestible content. Recent courses cover several data science necessities, from hard-core machine learning to starting a career in data science without a degree. They may not be cheap, but they are well worth their cost in the value they give.

Additionally, books play an important role as well. Reading current data science books can help you learn new techniques, understand real-world data science applications, and develop critical thinking and problem-solving skills. These books explain in-depth data science concepts you may not find elsewhere.

Such books include The Data Science Handbook, Data Science on the Google Cloud Platform, and Think Bayes. You should also check out a few data science courses on sites like Coursera and Udemy.

Attending conferences ushers you into an environment of like-minded individuals you can connect with. Although talking to strangers may feel uncomfortable, you will learn so much from the people at these events. By staying home, you will likely miss out on networking, job opportunities, and modern techniques like deep learning methods.

Furthermore, presentations allow you to observe other projects and familiarize yourself with the latest trends. Seeing what big tech companies are up to is encouraging and educative, and you can always take away something from them to apply in your work.

Data science events can be physical or virtual. Some good data science events to consider are the Open Data Science Conference (ODSC), Data Science Salon, and the Big Data and Analytics Summit.

Data science hackathons unite data scientists to develop models that solve real-world problems within a specified time frame. They can be hosted by various platforms, such as Kaggle, DataHack, or UN Big Data Hackathon.

Participating in hackathons enhances your mastery and accuracy and exposes you to the latest data science tools and popular techniques for building models. Regardless of your results, competing with other data scientists in hackathons offers valuable insights into the latest advancements in data science.

Consider participating in the NERSC Open Hackathon, BNL Open Hackathon, and other virtual hackathons. Also, don’t forget to register for physical hackathons that may be happening near your location.

Contributing to open-source data science projects lets you work with other data scientists in development. From them, you’ll learn new tools and frameworks used by the data science community, and you can study project codes to implement in your work.

Furthermore, you can collaborate with other data scientists with different perspectives in an environment where exchanging ideas, feedback, and insights is encouraged. You can discover the latest techniques data science professionals use, industry standards, best practices, and how they keep up with data science trends.

First, search for repositories tagged with the data science topic on GitHub or Kaggle. Once you discover a project, consider how to contribute, regardless of your skill level, and start collaborating with other data scientists.

Following data science thought leaders and influencers on social media keep you informed about the latest data science trends. This way, you can learn about their views on existing subject matters and up-to-date news on data science trends. Additionally, it allows you to inquire about complicated subjects and get their reply.

You can take it a step further and follow Google, Facebook, Apple, and other big tech companies on Twitter. This gives you the privilege of knowing tech trends to expect, not only limited to data science.

Kirk Borne, Ronald van Loon, and Ian Goodfellow are some of the biggest names in the data science community. Start following them and big tech companies on Twitter and other social media sites to stay updated.

Sharing your work lets you get feedback and suggestions from other data scientists with different experience levels and exposure. Their comments, questions, and critiques can help you stay up-to-date with the latest trends in data science.

You can discover trendy ideas, methods, tools, or resources you may not have known before by listening to their suggestions. For example, a person may unknowingly use an outdated version of Python until he posts his work online and someone points it out.

Sites like Kaggle and Discord have several data science groups through which you can share your work and learn. After signing up and joining a group, start asking questions and interacting with other data scientists. Prioritize knowledge, remember to be humble, and try to build mutually beneficial friendships with other data scientists.

Continuous learning is necessary to remain valuable as a data scientist, but it can be difficult to keep up all by yourself. Consequently, you’ll need to find a suitable community to help you, and Discord is one of the best platforms to find one. Find a server with people in the same field, and continue your learning with your new team.

MakeUseOf

https://laraveldaily.com/storage/437/Copy-of-Copy-of-Copy-of-ModelpreventLazyLoading();-(4).png

If you create foreign keys in your migrations, there may be a situation that the table is created successfully, but the foreign key fails. Then your migration is “half successful”, and if you re-run it after the fix, it will say “Table already exists”. What to do?

First, let me explain the problem in detail. Here’s an example.

Schema::create('teams', function (Blueprint $table) {

$table->id();

$table->string('name');

$table->foreignId('team_league_id')->constrained();

$table->timestamps();

});

The code looks good, right? Now, what if the referenced table “team_leagues” doesn’t exist? Or maybe it’s called differently? Then you will see this error in the Terminal:

2023_06_05_143926_create_teams_table ..................................................................... 20ms FAIL

Illuminate\Database\QueryException

SQLSTATE[HY000]: General error: 1824 Failed to open the referenced table 'team_leagues'

(Connection: mysql, SQL: alter table `teams` add constraint `teams_team_league_id_foreign` foreign key (`team_league_id`) references `team_leagues` (`id`))

But that is only part of the problem. So ok, you realized that the referenced table is called “leagues” and not “team_leagues”. Possible fix options:

->constrained('leagues')

But the real problem now is the state of the database:

teams is already createdThis means there’s no record of this migration success in the “migrations” Laravel system DB table.

Now, the real problem: if you fix the error in the same migration and just run php artisan migrate, it will say, “Table already exists”.

2023_06_05_143926_create_teams_table ...................................................................... 3ms FAIL

Illuminate\Database\QueryException

SQLSTATE[42S01]: Base table or view already exists:

1050 Table 'teams' already exists

(Connection: mysql, SQL: create table `teams` (...)

So should you create a new migration? Rollback? Let me explain my favorite way of solving this.

You can re-run the migration for already existing tables and ensure they would be created only if they don’t exist with the Schema::hasTable() method.

But then, we need to split the foreignId() into parts because it’s actually a 2-in-1 method: it creates the column (which succeeded) and the foreign key (which failed).

So, we rewrite the migration into this:

if (! Schema::hasTable('teams')) {

Schema::create('teams', function (Blueprint $table) {

$table->id();

$table->string('name');

$table->unsignedBigInteger('team_league_id');

$table->timestamps();

});

}

// This may be in the same migration file or in a separate one

Schema::table('teams', function (Blueprint $table) {

$table->foreign('team_league_id')->constrained('leagues');

});

Now, if you run php artisan migrate, it will execute the complete migration(s) successfully.

Of course, an alternative solution would be to go and manually delete the teams table via SQL client and re-run the migration with the fix, but you don’t always have access to the database if it’s remote. Also, it’s not ideal to perform any manual operations with the database if you use migrations. It may be ok on your local database, but this solution above would be universal for any local/remote databases.

Laravel News Links

https://media.notthebee.com/articles/64a3282b35efc64a3282b35efd.jpg

It’s always been racist; we just put up with it for decades until our man Clarence Thomas put an end to it. We can now refer to it in the past tense (even though the wokies are gonna try to keep it alive, bless their racist little hearts).

Not the Bee