https://media.notthebee.com/articles/657b2e2993ba1657b2e2993ba2.jpg

I love it.

Not the Bee

Just another WordPress site

https://media.notthebee.com/articles/657b2e2993ba1657b2e2993ba2.jpg

I love it.

Not the Bee

https://cdn.arstechnica.net/wp-content/uploads/2023/12/enemycloset-760×380.jpg

The archived hour-long chat is a must-watch for any long-time Doom fan.

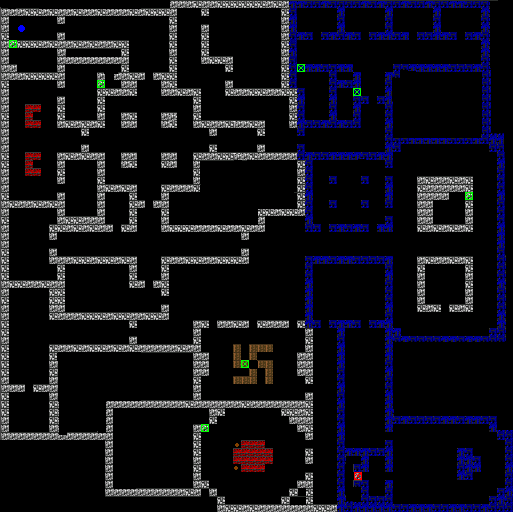

While Doom can sometimes feel like an overnight smash success, the seminal first-person shooter was far from the first game created by id co-founders John Carmack and John Romero. Now, in a rare joint interview that was livestreamed during last weekend’s 30th-anniversary celebration, the pair waxed philosophical about how Doom struck a perfect balance between technology and simplicity that they hadn’t been able to capture previously and have struggled to recapture since.

Carmack said that Doom-precursor Wolfenstein 3D, for instance, "was done under these extreme, extraordinary design constraints" because of the technology available at the time. "There just wasn’t that much we could do."

Wolfenstein 3D‘s grid-based mapping led to a lot of boring rectangular rooms connected by long corridors.

One of the biggest constraints in Wolfenstein 3D was a grid-based mapping system that forced walls to be at 90-degree angles, leading to a lot of large, rectangular rooms connected by long corridors. "Making the levels for the original Wolfenstein had to be the most boring level design job ever because it was so simple," Romero said. "Even [2D platformer Commander Keen] was more rewarding to make levels for."

By the time work started on Doom, Carmack said it was obvious that "the next step in graphics was going to be to get away from block levels." The simple addition of angled walls let Doom hit "this really sweet spot," Carmack said, allowing designers to "create an unlimited number of things" while still making sure that "everybody could draw in this 2D view… Lots of people could make levels in that."

Doom‘s support for angled walls and variable heights added a huge amount of design variation while still keeping things relatively simple to edit.

Romero expanded on the idea, saying that working on top of the Doom engine was, at the time, "the easiest way to make something that looks great. If you want to get anything that looks better than this, you’re talking 10 times the work."

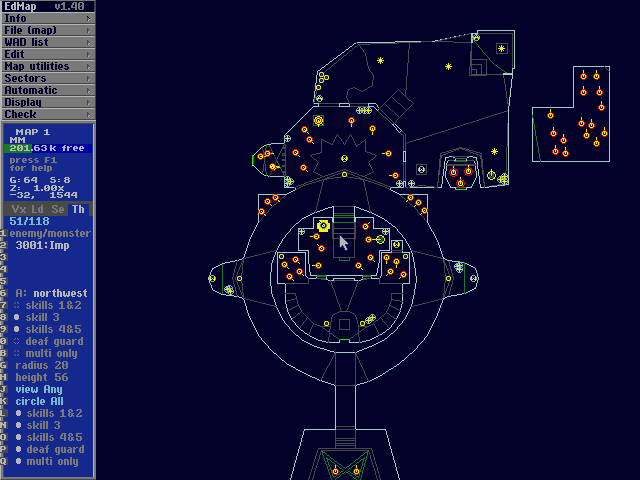

Then came Quake, with a full 3D design that Carmack admitted was "more ambitious" and "did not reach all of its goals." When it came to modding and designing new levels, Carmack lamented how, with Quake, "a lot of potentially great game designers just hit their limit as far as compositional aesthetic in terms of what something is going to look like."

"When you have the ability to do a full six degree-of-freedom modeling, you not only have to be a game designer, you have to be an architect, a modeler working through your composition," Carmack continued. "[Doom] helped you along by keeping you from wasting time doing some crazy things that you would have had to be a master of a different craft to pull off… Going to full 3D made this something that not everybody does on a lark, but something you set out time, almost set a career arc to make mods for newer games."

Enlarge / Quake‘s full 3D maps required much more design skill to build good-looking levels.

Romero reminisced during the chat about "the Everest of Quake" and "the insane amount of technology we took on" during its development. "Even adding QuakeC on top of [a] client/server [architecture] on top of full 3D, it was so much tech. It was a whole new engine, it wasn’t Doom at all. It was all brand new."

Looking back, Carmack allowed that "there’s a couple different steps we could have taken [with Quake], and we probably did not pick the optimal direction, but we kept wanting to make this, just throw everything at it and say, ‘If you can think of something that’s going to be better, we should just strive our hardest to do that.’ When Doom came together, it was just a perfect storm of ‘everything went right.’.. [it was] as close to a perfect game as anything we made."

Ars Technica – All content

https://opengraph.githubassets.com/1f9367f276bb74707b68c5b387ff964b9e84a2b12ad55594b2a533de7f130699/maantje/pulse-database

Get real-time insights into the status of your database

Install the package using Composer:

composer require maantje/pulse-database

In your pulse.php configuration file, register the DatabaseRecorder with the desired settings:

return [ // ... 'recorders' => [ \Maantje\Pulse\Database\Recorders\DatabaseRecorder::class => [ 'connections' => [ 'mysql_another' => [ 'values' => [ 'Connections', 'Threads_connected', 'Threads_running', 'Innodb_buffer_pool_reads', 'Innodb_buffer_pool_read_requests', 'Innodb_buffer_pool_pages_total', 'Max_used_connections' ], 'aggregates' => [ 'avg' => [ 'Threads_connected', 'Threads_running', 'Innodb_buffer_pool_reads', 'Innodb_buffer_pool_read_requests', 'Innodb_buffer_pool_pages_total', ], 'max' => [ // ], 'count' => [ // ], ], ], 'mysql' => [ 'values' => [ 'Connections', 'Threads_connected', 'Threads_running', 'Innodb_buffer_pool_reads', 'Innodb_buffer_pool_read_requests', 'Innodb_buffer_pool_pages_total', 'Max_used_connections' ], 'aggregates' => [ 'avg' => [ 'Threads_connected', 'Threads_running', 'Innodb_buffer_pool_reads', 'Innodb_buffer_pool_read_requests', 'Innodb_buffer_pool_pages_total', ], 'max' => [ // ], 'count' => [ // ], ], ] ] ], ] ]

Ensure you’re running the pulse:check command.

Integrate the card into your Pulse dashboard by publish the vendor view.

and then modifying the dashboard.blade.php file:

<x-pulse>

<livewire:pulse.servers cols="full" />

+ <livewire:database cols='6' title="Active threads" :values="['Threads_connected', 'Threads_running']" :graphs="[

+ 'avg' => ['Threads_connected' => '#ffffff', 'Threads_running' => '#3c5dff'],

+ ]" />

+ <livewire:database cols='6' title="Connections" :values="['Connections', 'Max_used_connections']" />

+ <livewire:database cols='full' title="Innodb" :values="['Innodb_buffer_pool_reads', 'Innodb_buffer_pool_read_requests', 'Innodb_buffer_pool_pages_total']" :graphs="[

+ 'avg' => ['Innodb_buffer_pool_reads' => '#ffffff', 'Innodb_buffer_pool_read_requests' => '#3c5dff'],

+ ]" />

<livewire:pulse.usage cols="4" rows="2" />

<livewire:pulse.queues cols="4" />

<livewire:pulse.cache cols="4" />

<livewire:pulse.slow-queries cols="8" />

<livewire:pulse.exceptions cols="6" />

<livewire:pulse.slow-requests cols="6" />

<livewire:pulse.slow-jobs cols="6" />

<livewire:pulse.slow-outgoing-requests cols="6" />

</x-pulse>

And that’s it! Enjoy enhanced visibility into your database status on your Pulse dashboard.

Laravel News Links

https://media.notthebee.com/articles/6579bf8b4fa716579bf8b4fa72.jpg

Do you need further proof of man’s total depravity and his need for a Savior?

Not the Bee

https://media.notthebee.com/articles/657883b4a4a3b657883b4a4a3c.jpg

Christmas carols have officially peaked, you guys. Watch this video and tell me otherwise.

Not the Bee

https://s.yimg.com/os/creatr-uploaded-images/2023-12/7fc6a590-9873-11ee-bb6b-c5c58a96588d

Apple’s AI-powered Journal app is finally here. The new diary entry writing tool was first teased for iOS 17 back in June, but it only became available on Monday with the new iPhone update — nearly three months after iOS 17 itself came out. After Apple released iOS 17.2, iPhone users can now access to the Journal app, which allows users to jot down their thoughts in a digital diary. Journaling is a practice that can improve mental wellbeing and it can also be used to fuel creative projects.

You can create traditional text entries, add voice recordings to your notes, or include recent videos or pictures. If you need inspiration, AI-derived text prompts can offer suggestions for what to write or create an entry for next. The app also predicts and proposes times for you to create a new entry based on your recent iPhone activity, which can include newer photos and videos, location history, recently listened-to playlists, and workout habits. This guide will walk you through how to get started with the Journal app and personalize your experience.

When you open the Journal app, tap the + button at the bottom of the page to create a new entry. If you want to start with a blank slate, when you tap ‘New Entry’ an empty page will appear and from there you can start typing text. You can add in recent photos from your library when you tap the photos icon below the text space, take a photo in the moment and add it to your entry or include a recorded voice memo when you tap the voice icon. You can also add locations to your entry when you tap the arrow icon at the bottom right of an entry page. This feature might be helpful for travel bloggers looking back at their trips abroad. You can edit the date of an entry at the top of the page.

Alternatively, you can create a post based on recent or recommended activities that your phone compiled — say, pictures, locations from events you attended, or contacts you recently interacted with. The recent tab will show you, in chronological order, people, photos and addresses that can inspire entries based on recent activities. The recommended tab pulls from highlighted images automatically selected from your photo memories. For example, a selection of portraits from 2022 can appear as a recommendation to inspire your next written entry. Some suggestions underneath the recommendation tab may appear within the app with ‘Writing prompts.’ For example, a block of text may appear with a question like, “What was the highlight of your trip?”

If you’re not free to write when a suggestion is made, you can also save specific moments you want to journal about and write at a later time. Using the journaling schedule feature, you can set a specific time to be notified to create an entry, which will help a user make journaling a consistent practice. Go to the Settings app on your iPhone and search for the Journal app. Turn on the ‘Journaling schedule’ feature and personalize the days and times you would like to be reminded to write entries. As a side note, in Settings, you can also opt to lock your journal using your device passcode or Face ID.

You can also organize your entries within the app using the bookmarking feature, so you can filter and find them at your own convenience. After creating an entry, tap the three dots at the bottom of your page and scroll down to tap the bookmark tab. This is the same place where you can delete or edit a journal entry.

Later on, if you want to revisit a bookmarked entry, tap the three-line icon at the corner of the main journal page to select the filter you would like applied to your entries. You can select to only view bookmarked entries, entries with photos, entries with recorded audio and see entries with places or locations. This might be helpful when your journal starts to fill up with recordings.

Using your streaming app of choice, (Apple Music, Spotify or Amazon Music), you can integrate specific tracks or podcast episodes into your entries by tapping three buttons at the bottom of your screen that opens up the option to ‘share your music.’ The option to share a track to the Journal app should appear and it will sit at the top of a blank entry when you open the app.

You can use the same method with other applications, like Apple’s Fitness app. You can share and export a logged workout into your journal and start writing about that experience.

This article originally appeared on Engadget at https://www.engadget.com/how-to-use-apples-new-journal-app-with-the-ios-172-update-164518403.html?src=rssEngadget

https://photos5.appleinsider.com/gallery/57678-117473-qnapnasbook-xl.jpg

QNAP has introduced its Thunderbolt 4 All-Flash NASbook, a high-density storage device aimed at video production that can provide high-speed transfers to connected Macs.

Firmly aimed at streamlining video production in pre-production and post-production, the QNAP Thunderbolt 4 All-Flash NASbook is an M.2 SSD device meant to hold fast storage drives, as well as delivering the data to users as quickly as possible.

Measuring 8.46 inches by 7.83 inches by 2.36 inches, the NASbook is a very small storage appliance. At 4.94 pounds, it’s also reasonably portable for a dedicated storage device, meaning it could be brought to filming locations instead of being kept in an office.

Capable of holding five E1S/M.2 PCIe NVMe SSDs, the unit offers automatic RAID disk replacement, so SSDs can be replaced without any system downtime. It also uses 13th-gen Intel Core hybrid architecture 8-core, 12-thread or 12-core, 16-thread processors for dealing with video production tasks, while the built-in GPU can help accelerate video transcoding.

On the connectivity side, it has two Thunderbolt 4 ports to connect to Mac and PC workstations, while 2.5GbE and 10GbE ports can be used for more conventional network-based NAS usage. A pair of USB 3.2 Gen 2 10Gbps ports can deal with ingesting video from other storage, or for connecting a DAS or JBOD for archiving projects.

The included HDMI port allows teams to display raw footage stored on the NASbook on an external display at up to 4K resolution.

The entire unit runs on the ZFS-based QuTS hero operating system, which has self-healing properties to detect and repair corrupted data in RAW images.

The QNAP Thunderbol 4 All-Flash NASbook starts from $1,199 for the 8-core version, $1,449 for the 12-core model, and are available now.

As part of a launch offer, a free one-year 1TB allocation of myQNAPcloud cloud storage is being provided to early buyers from QNAP’s website.

AppleInsider News

https://i.kinja-img.com/image/upload/c_fill,h_675,pg_1,q_80,w_1200/6a12586b70dc3414af93d6de525cd139.png

It’s certainly disappointing that Dune: Part Two isn’t in theaters right now but sometimes waiting is the best part. Case in point, today we’ve been graced with a brand new trailer, and it’ll make you somehow even more excited for the sequel than you already are. Which is really saying something.

‘Even AI Rappers are Harassed by Police’ | AI Unlocked

Dune: Part Two stars Timothée Chalamet, Zendaya, Rebecca Ferguson, Josh Brolin, Austin Butler, Florence Pugh, Dave Bautista, Christopher Walkenand many more. It opens March 1 and here’s the new trailer.

[Editor’s Note: This article is part of the developing story. The information cited on this page may change as the breaking story unfolds. Our writers and editors will be updating this article continuously as new information is released. Please check this page again in a few minutes to see the latest updates to the story. Alternatively, consider bookmarking this page or sign up for our newsletter to get the most up-to-date information regarding this topic.]

Read more from io9:

Want more io9 news? Check out when to expect the latest Marvel, Star Wars, and Star Trek releases, what’s next for the DC Universe on film and TV, and everything you need to know about the future of Doctor Who.

Gizmodo

https://www.percona.com/blog/wp-content/uploads/2023/12/pt-table-sync-for-Replica-Tables-With-Triggers-200×119.jpg

In Percona Managed Services, we manage Percona for MySQL, Community MySQL, and MariaDB. Sometimes, the replica server might have replication errors, and the replica might be out of sync with the primary. In this case, we can use Percona Toolkit’s pt-table-checksum and pt-table-sync to check the data drift between primary and replica servers and make the replica in sync with the primary. This blog gives you some ideas on using pt-table-sync for replica tables with triggers.

In my lab, we have two test nodes with replication setup, and both servers will have Debian 11 and Percona Server for MySQL 8.0.33 (with Percona Toolkit) installed.

The PRIMARY server is deb11m8 (IP: 192.168.56.188 ), and the REPLICA server name is deb11m8s (IP: 192.168.56.189).

Create the below table and trigger on PRIMARY, and it will replicate down to REPLICA. We have two tables: test_tab and test_tab_log. When a new row is inserted into test_tab, the trigger will fire and put the data and the user who did the insert into the test_tab_log table.

Create database testdb; Use testdb; Create table test_tab (id bigint NOT NULL , test_data varchar(50) NOT NULL ,op_time TIMESTAMP NOT NULL , PRIMARY KEY (id,op_time)); Create table test_tab_log (id bigint NOT NULL , test_data varchar(50) NOT NULL ,op_user varchar(60) NOT NULL ,op_time TIMESTAMP NOT NULL , PRIMARY KEY (id,op_time)); DELIMITER $$ CREATE DEFINER=`larry`@`%` TRIGGER after_test_tab_insert AFTER INSERT ON test_tab FOR EACH ROW BEGIN INSERT INTO test_tab_log(id,test_data,op_user,op_time) VALUES(new.id, NEW.test_data, USER(),NOW()); END$$ DELIMITER ;

We do an insert as a root user. You can see that after data is inserted, the trigger fires as expected.

mysql> insert into test_tab (id,test_data,op_time) values(1,'lt1',now()); Query OK, 1 row affected (0.01 sec) mysql> select * from test_tab; select * from test_tab_log; +----+-----------+---------------------+ | id | test_data | op_time | +----+-----------+---------------------+ | 1 | lt1 | 2023-11-26 09:59:19 | +----+-----------+---------------------+ 1 row in set (0.00 sec) +----+-----------+----------------+---------------------+ | id | test_data | op_user | op_time | +----+-----------+----------------+---------------------+ | 1 | lt1 | root@localhost | 2023-11-26 09:59:19 | +----+-----------+----------------+---------------------+ 1 row in set (0.00 sec) We Insert another row insert into test_tab (id,test_data,op_time) values(2,'lt2',now()); mysql> select * from test_tab; select * from test_tab_log; +----+-----------+---------------------+ | id | test_data | op_time | +----+-----------+---------------------+ | 1 | lt1 | 2023-11-26 09:59:19 | | 2 | lt2 | 2023-11-26 10:01:30 | +----+-----------+---------------------+ 2 rows in set (0.00 sec) +----+-----------+----------------+---------------------+ | id | test_data | op_user | op_time | +----+-----------+----------------+---------------------+ | 1 | lt1 | root@localhost | 2023-11-26 09:59:19 | | 2 | lt2 | root@localhost | 2023-11-26 10:01:30 | +----+-----------+----------------+---------------------+ 2 rows in set (0.00 sec)

CREATE TABLE percona.dsns (`id` int(11) NOT NULL AUTO_INCREMENT,`parent_id` int(11) DEFAULT NULL, `dsn` varchar(255) NOT NULL, PRIMARY KEY (`id`)) ENGINE=InnoDB DEFAULT CHARSET=utf8;

INSERT INTO percona.dsns (dsn) VALUES ('h=192.168.56.190');

mysql> use testdb; Database changed mysql> set sql_log_bin=0; Query OK, 0 rows affected (0.00 sec) mysql> delete from test_tab where id=1; Query OK, 1 row affected (0.00 sec) mysql> select * from test_tab; select * from test_tab_log; +----+-----------+---------------------+ | id | test_data | op_time | +----+-----------+---------------------+ | 2 | lt2 | 2023-11-26 10:01:30 | +----+-----------+---------------------+ 1 row in set (0.00 sec) +----+-----------+----------------+---------------------+ | id | test_data | op_user | op_time | +----+-----------+----------------+---------------------+ | 1 | lt1 | root@localhost | 2023-11-26 09:59:19 | | 2 | lt2 | root@localhost | 2023-11-26 10:01:30 | +----+-----------+----------------+---------------------+ 2 rows in set (0.00 sec)

root@deb11m8:~/test_pt_trigger# pt-table-checksum h=192.168.56.189 --port=3306 --no-check-binlog-format

--no-check-replication-filters --replicate percona.checksums_test_tab

--recursion-method=dsn=D=percona,t=dsns

--tables testdb.test_tab

--max-load Threads_running=50

--max-lag=10 --pause-file /tmp/checksums_test_tab

Checking if all tables can be checksummed ...

Starting checksum ...

TS ERRORS DIFFS ROWS DIFF_ROWS CHUNKS SKIPPED TIME TABLE

11-26T10:02:58 0 1 2 1 1 0 4.148 testdb.test_tab

root@deb11m8:~/test_pt_trigger#

on REPLICA, deb11m8s, we can see the checksum reports the difference.

mysql> SELECT db, tbl, SUM(this_cnt) AS total_rows, COUNT(*) AS chunks

-> FROM percona.checksums_test_tab

-> WHERE (

-> master_cnt <> this_cnt

-> OR master_crc <> this_crc

-> OR ISNULL(master_crc) <> ISNULL(this_crc))

-> GROUP BY db, tbl;

+--------+----------+------------+--------+

| db | tbl | total_rows | chunks |

+--------+----------+------------+--------+

| testdb | test_tab | 1 | 1 |

+--------+----------+------------+--------+

1 row in set (0.00 sec)

Pt-table-sync says Triggers are defined on the table and will not continue to fix it.

root@deb11m8:~/test_pt_trigger# pt-table-sync h=192.168.56.190,P=3306 --sync-to-master --replicate percona.checksums_test_tab --tables=testdb.test_tab --verbose --print # Syncing via replication P=3306,h=192.168.56.190 # DELETE REPLACE INSERT UPDATE ALGORITHM START END EXIT DATABASE.TABLE Triggers are defined on the table at /usr/bin/pt-table-sync line 11306. while doing testdb.test_tab on 192.168.56.190 # 0 0 0 0 0 10:03:31 10:03:31 1 testdb.test_tab

Pt-table-sync has an option –[no]check-triggers- to that will skip trigger checking. The print result is good.

root@deb11m8:~/test_pt_trigger# pt-table-sync --user=larry --ask-pass h=192.168.56.190,P=3306 --sync-to-master --nocheck-triggers

--replicate percona.checksums_test_tab

--tables=testdb.test_tab

--verbose --print

# Syncing via replication P=3306,h=192.168.56.190

# DELETE REPLACE INSERT UPDATE ALGORITHM START END EXIT DATABASE.TABLE

REPLACE INTO `testdb`.`test_tab`(`id`, `test_data`, `op_time`) VALUES ('1', 'lt1', '2023-11-26 09:59:19') /*percona-toolkit src_db:testdb src_tbl:test_tab src_dsn:P=3306,h=192.168.56.189 dst_db:testdb dst_tbl:test_tab dst_dsn:P=3306,h=192.168.56.190 lock:1 transaction:1 changing_src:percona.checksums_test_tab replicate:percona.checksums_test_tab bidirectional:0 pid:4169 user:root host:deb11m8*/;

# 0 1 0 0 Nibble 10:03:54 10:03:55 2 testdb.test_tab

When we run pt-table-sync with –execute under user ‘larry’@’%’:

root@deb11m8:~/test_pt_trigger# pt-table-sync --user=larry --ask-pass h=192.168.56.190,P=3306 --sync-to-master --nocheck-triggers --replicate percona.checksums_test_tab --tables=testdb.test_tab --verbose --execute # Syncing via replication P=3306,h=192.168.56.190 # DELETE REPLACE INSERT UPDATE ALGORITHM START END EXIT DATABASE.TABLE # 0 1 0 0 Nibble 10:05:26 10:05:26 2 testdb.test_tab -------PRIMARY ------- mysql> select * from test_tab; select * from test_tab_log; +----+-----------+---------------------+ | id | test_data | op_time | +----+-----------+---------------------+ | 1 | lt1 | 2023-11-26 09:59:19 | | 2 | lt2 | 2023-11-26 10:01:30 | +----+-----------+---------------------+ 2 rows in set (0.00 sec) +----+-----------+----------------+---------------------+ | id | test_data | op_user | op_time | +----+-----------+----------------+---------------------+ | 1 | lt1 | root@localhost | 2023-11-26 09:59:19 | | 1 | lt1 | larry@deb11m8 | 2023-11-26 10:05:26 | | 2 | lt2 | root@localhost | 2023-11-26 10:01:30 | +----+-----------+----------------+---------------------+ 3 rows in set (0.00 sec) -----REPLICA mysql> select * from test_tab; select * from test_tab_log; +----+-----------+---------------------+ | id | test_data | op_time | +----+-----------+---------------------+ | 1 | lt1 | 2023-11-26 09:59:19 | | 2 | lt2 | 2023-11-26 10:01:30 | +----+-----------+---------------------+ 2 rows in set (0.00 sec) +----+-----------+----------------+---------------------+ | id | test_data | op_user | op_time | +----+-----------+----------------+---------------------+ | 1 | lt1 | root@localhost | 2023-11-26 09:59:19 | | 1 | lt1 | | 2023-11-26 10:05:26 | | 2 | lt2 | root@localhost | 2023-11-26 10:01:30 | +----+-----------+----------------+---------------------+ 3 rows in set (0.00 sec)

We can see a new row inserted into the test_tab_log table. The reason is that the trigger fired on the primary and replicated to the REPLICA when we ran pt-table-sync.

Option 1: Do the pt-table-checksum/pt-table-sync for the test_tab_log table again. This might fix the issue.

Option 2: We might need to do some work on the trigger like below (or there might be another better way).

Let‘s recreate the trigger as below; the trigger will check if it’s run by ‘larry’.

Drop trigger after_test_tab_insert;

DELIMITER $$

CREATE DEFINER=`larry`@`%` TRIGGER after_test_tab_insert

AFTER INSERT

ON test_tab FOR EACH ROW

BEGIN

IF left(USER(),5) <> 'larry' and trim(left(USER(),5)) <>'' THEN

INSERT INTO test_tab_log(id,test_data, op_user,op_time)

VALUES(new.id, NEW.test_data, USER(),NOW());

END IF;

END$$

DELIMITER ;

And restore the data to its original out-of-sync state.

The PRIMARY

mysql> select * from test_tab; select * from test_tab_log; +----+-----------+---------------------+ | id | test_data | op_time | +----+-----------+---------------------+ | 1 | lt1 | 2023-11-26 09:59:19 | | 2 | lt2 | 2023-11-26 10:01:30 | +----+-----------+---------------------+ 2 rows in set (0.00 sec) +----+-----------+----------------+---------------------+ | id | test_data | op_user | op_time | +----+-----------+----------------+---------------------+ | 1 | lt1 | root@localhost | 2023-11-26 09:59:19 | | 2 | lt2 | root@localhost | 2023-11-26 10:01:30 | +----+-----------+----------------+---------------------+

The REPLICA

mysql> select * from test_tab; select * from test_tab_log; +----+-----------+---------------------+ | id | test_data | op_time | +----+-----------+---------------------+ | 2 | lt2 | 2023-11-26 10:01:30 | +----+-----------+---------------------+ 1 row in set (0.00 sec) +----+-----------+----------------+---------------------+ | id | test_data | op_user | op_time | +----+-----------+----------------+---------------------+ | 1 | lt1 | root@localhost | 2023-11-26 09:59:19 | | 2 | lt2 | root@localhost | 2023-11-26 10:01:30 | +----+-----------+----------------+---------------------+ 2 rows in set (0.00 sec)

Run pt-table-sync under user ‘larry’@’%’.

root@deb11m8s:~# pt-table-sync --user=larry --ask-pass h=192.168.56.190,P=3306 --sync-to-master --nocheck-triggers --replicate percona.checksums_test_tab --tables=testdb.test_tab --verbose --execute Enter password for 192.168.56.190: # Syncing via replication P=3306,h=192.168.56.190,p=...,u=larry # DELETE REPLACE INSERT UPDATE ALGORITHM START END EXIT DATABASE.TABLE # 0 1 0 0 Nibble 21:02:26 21:02:27 2 testdb.test_tab

We can use pt-table-sync, which will fix the data drift for us, and the trigger will not fire when pt-table-sync is run under user larry.

root@deb11m8s:~# pt-table-sync --user=larry --ask-pass h=192.168.56.190,P=3306 --sync-to-master --nocheck-triggers --replicate percona.checksums_test_tab --tables=testdb.test_tab --verbose --execute Enter password for 192.168.56.190: # Syncing via replication P=3306,h=192.168.56.190,p=...,u=larry # DELETE REPLACE INSERT UPDATE ALGORITHM START END EXIT DATABASE.TABLE # 0 1 0 0 Nibble 21:02:26 21:02:27 2 testdb.test_tab

—The PRIMARY mysql> select * from test_tab; select * from test_tab_log; +----+-----------+---------------------+ | id | test_data | op_time | +----+-----------+---------------------+ | 1 | lt1 | 2023-11-26 09:59:19 | | 2 | lt2 | 2023-11-26 10:01:30 | +----+-----------+---------------------+ 2 rows in set (0.00 sec) +----+-----------+----------------+---------------------+ | id | test_data | op_user | op_time | +----+-----------+----------------+---------------------+ | 1 | lt1 | root@localhost | 2023-11-26 09:59:19 | | 2 | lt2 | root@localhost | 2023-11-26 10:01:30 | +----+-----------+----------------+---------------------+ 2 rows in set (0.00 sec) —The REPLICA mysql> select * from test_tab; select * from test_tab_log; +----+-----------+---------------------+ | id | test_data | op_time | +----+-----------+---------------------+ | 1 | lt1 | 2023-11-26 09:59:19 | | 2 | lt2 | 2023-11-26 10:01:30 | +----+-----------+---------------------+ 1 row in set (0.00 sec) +----+-----------+----------------+---------------------+ | id | test_data | op_user | op_time | +----+-----------+----------------+---------------------+ | 1 | lt1 | root@localhost | 2023-11-26 09:59:19 | | 2 | lt2 | root@localhost | 2023-11-26 10:01:30 | +----+-----------+----------------+---------------------+ 2 rows in set (0.00 sec)

mysql> select user(); +----------------+ | user() | +----------------+ | root@localhost | +----------------+ 1 row in set (0.00 sec) mysql> insert into test_tab (id,test_data,op_time) values(3,'lt3',now()); Query OK, 1 row affected (0.01 sec) -— The PRIMARY mysql> select * from test_tab; select * from test_tab_log; +----+-----------+---------------------+ | id | test_data | op_time | +----+-----------+---------------------+ | 1 | lt1 | 2023-11-26 09:59:19 | | 2 | lt2 | 2023-11-26 10:01:30 | | 3 | lt3 | 2023-11-26 21:04:26 | +----+-----------+---------------------+ 3 rows in set (0.00 sec) +----+-----------+----------------+---------------------+ | id | test_data | op_user | op_time | +----+-----------+----------------+---------------------+ | 1 | lt1 | root@localhost | 2023-11-26 09:59:19 | | 2 | lt2 | root@localhost | 2023-11-26 10:01:30 | | 3 | lt3 | root@localhost | 2023-11-26 21:04:26 | +----+-----------+----------------+---------------------+ 3 rows in set (0.00 sec) — The REPLICA mysql> select * from test_tab; select * from test_tab_log; +----+-----------+---------------------+ | id | test_data | op_time | +----+-----------+---------------------+ | 1 | lt1 | 2023-11-26 09:59:19 | | 2 | lt2 | 2023-11-26 10:01:30 | | 3 | lt3 | 2023-11-26 21:04:26 | +----+-----------+---------------------+ 3 rows in set (0.00 sec) +----+-----------+----------------+---------------------+ | id | test_data | op_user | op_time | +----+-----------+----------------+---------------------+ | 1 | lt1 | root@localhost | 2023-11-26 09:59:19 | | 2 | lt2 | root@localhost | 2023-11-26 10:01:30 | | 3 | lt3 | root@localhost | 2023-11-26 21:04:26 | +----+-----------+----------------+---------------------+ 3 rows in set (0.00 sec)

In our test case, we just cover one AFTER INSERT trigger. In a live production system, there might be more complex scenarios (e.g. a lot of different types of triggers defined on the table you are going to do pt-table-sync, auto-increment value, the table has foreign key constraints, etc.). It would be better to test on a test environment before you go to production and make sure you have a valid backup before making a system change.

I hope this will give you some ideas on pt-table-sync on a table with triggers.

Percona Distribution for MySQL is the most complete, stable, scalable, and secure open source MySQL solution available, delivering enterprise-grade database environments for your most critical business applications… and it’s free to use!

Try Percona Distribution for MySQL today!

Percona Database Performance Blog

https://laracoding.com/wp-content/uploads/2023/12/laravel-eloquent-encryption-cast-type.png

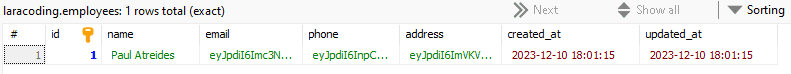

Using the Eloquent ‘encrypted’ cast type, you can instruct Laravel to encrypt specific attributes before storing them in the database. Later, when accessed through Eloquent, the data is automatically decrypted for your application to use.

Encrypting fields in a database enhances security by scrambling sensitive data. This measure shields information like emails, addresses, and phone numbers, preventing unauthorized access and maintaining confidentiality even if data is exposed.

In this guide you’ll learn to use Eloquent’s built-in ‘encrypted’ cast to encrypt sensitive data within an ‘Employee’ model to ensure personal data is stored securely.

Important note: Encryption and decryption in Laravel are tied to the APP_KEY found in the .env file. This key is generated during the installation and should remain unchanged. Avoid running ‘php artisan key:generate‘ on your production server. Generating a new APP_KEY will render any encrypted data irretrievable.

While keeping that in mind. Let’s get started and apply encryption!

Begin by creating a new Laravel project if you haven’t done so already. Open your terminal and run:

composer create-project laravel/laravel model-encrypt

cd model-encrypt.env fileOpen the .env file in your project and add the database credentials you wish to use:

DB_CONNECTION=mysql

DB_HOST=127.0.0.1

DB_PORT=3306

DB_DATABASE=your-db

DB_USERNAME=your-db-user

DB_PASSWORD=your-db-passwordBegin by generating an Employee model and its corresponding migration using Artisan commands:

php artisan make:model Employee -mOpen the generated migration file and add the code below to define the table and its columns, including those that we will apply encryption to later on.

As stated in the documentation on Eloquent encrypted casting all columns that will be encrypted need to be of type ‘text’ or larger, so make sure you use the correct type in your migration!

<?php

use Illuminate\Database\Migrations\Migration;

use Illuminate\Database\Schema\Blueprint;

use Illuminate\Support\Facades\Schema;

return new class extends Migration

{

public function up(): void

{

Schema::create('employees', function (Blueprint $table) {

$table->id();

$table->string('name'); // The 'name' column which we won't encrypt

$table->text('email'); // The 'email' column, which we will encrypt

$table->text('phone'); // The 'phone' column, which we will encrypt

$table->text('address'); // The 'address' column, which we will encrypt

// Other columns...

$table->timestamps();

});

}

public function down(): void

{

Schema::dropIfExists('employees');

}

};Run the migration to create the ‘employees’ table:

encrypted casts to ModelOpen the Employee model and add the code below to specify the attributes to be encrypted using the $casts property. We’ll also define a fillable array to make sure fields will support mass assignment to ease creation of models with data:

<?php

namespace App\Models;

use Illuminate\Database\Eloquent\Model;

class Employee extends Model

{

protected $casts = [

'email' => 'encrypted',

'phone' => 'encrypted',

'address' => 'encrypted',

// Other sensitive attributes...

];

protected $fillable = [

'name',

'email',

'phone',

'address',

];

// Other model configurations...

}

Once configured, saving and retrieving data from the encrypted attributes remains unchanged. Eloquent will automatically handle the encryption and decryption processes.

To test this out I like to use Laravel tinker. To follow along open Tinker by running:

Then paste the following code:

use App\Models\Employee;

$employee = Employee::create([

'name' => 'Paul Atreides',

'email' => 'paul@arrakis.com', // Data is encrypted before storing

'phone' => '123-456-7890', // Encrypted before storing

'address' => 'The Keep 12', // Encrypted before storing

]);

echo $employee->email; // Automatically decrypted

echo $employee->phone; // Automatically decrypted

echo $employee->address; // Automatically decryptedThe output shows the Laravel Eloquent was able to decrypt the contents properly:

> echo $employee->email;

paul@arrakis.com⏎

> echo $employee->phone;

123-456-7890⏎

> echo $employee->address;

The Keep 12⏎If we view the contents in our database we can verify that the sensitive data in email, phone and address is in fact encrypted:

employees TableBy using Laravel Eloquent’s built-in cast “encrypted” we can easily add a layer of security that applies encryption to sensitive data.

In our example we learned how to encrypt sensitive data of employee’s like email, address and phone and demonstrated how the application can still use them.

Now you can apply this technique to your own applications and ensure the privacy of your users is up to todays standards. Happy coding!

References

Laravel News Links