Learn how to fully localize your Laravel application in this tutorial. Localization is essential for your business, so it is to localize your Laravel application.

Learn how to manage your translation files, work with localized strings and create localized routes.

Laravel’s localization features provide a way to work with localized strings but with some extra code, you can convert your application into a real multilanguage app.

Installation

As a Laravel user, we’ll assume you already have a Laravel application, or you created a new one. You can follow the more suitable method from the official documentation or the recent Laravel Bootcamp.

Language files

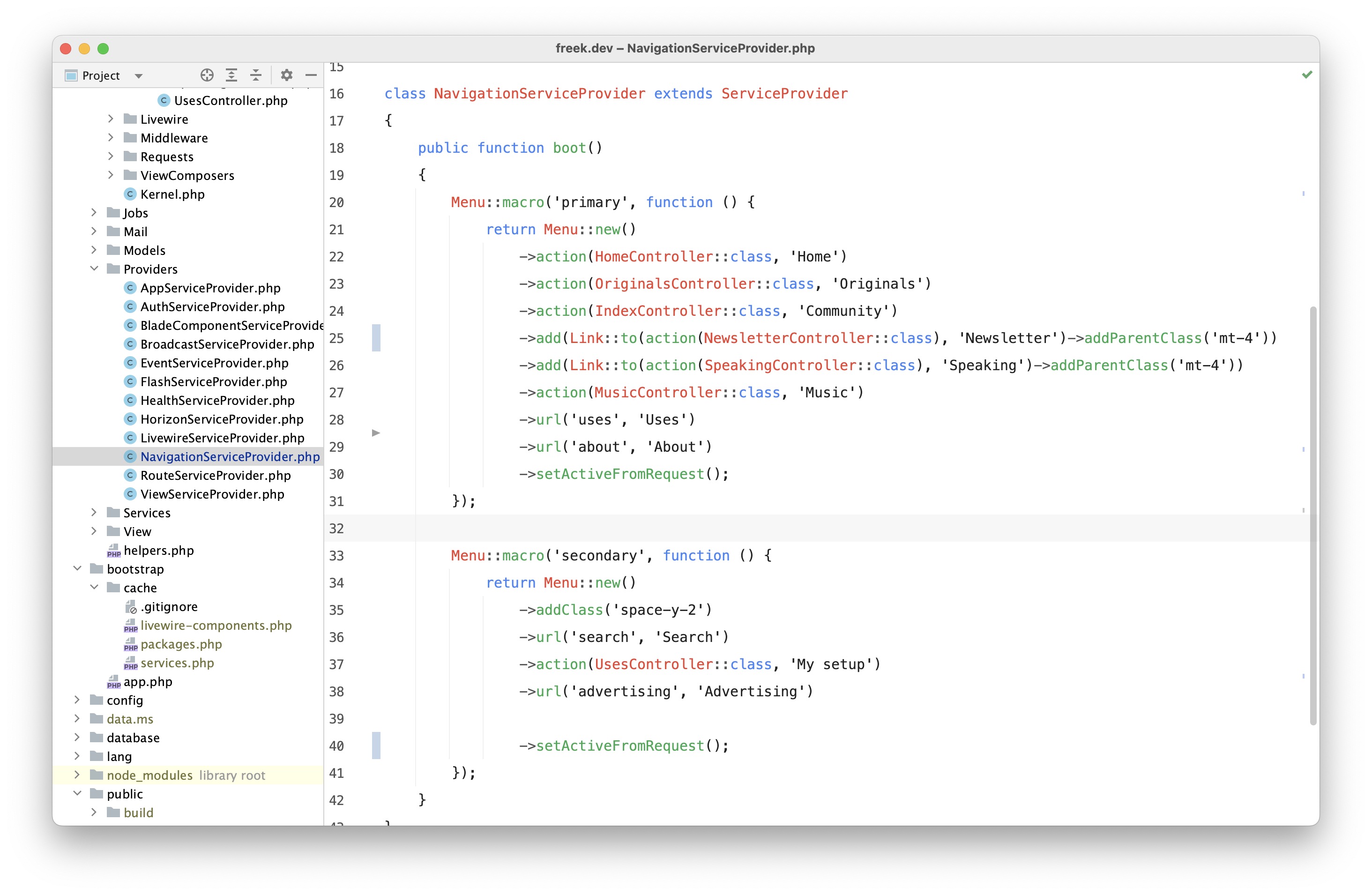

Language files are stored within the lang directory. Laravel provides two ways to organize your translations.

First, language strings stored in .php files in a directory for each language supported by the application.

/lang

/en

messages.php

/es

messages.php

With this method, you should define your translation string using short keys like messages.welcome inside a PHP file:

<?php

// lang/en/messages.php

return [

'welcome' => 'Welcome to our application!',

];

Or, translation strings stored in JSON files that are placed within the lang directory. Each language supported by your application should have a corresponding JSON file within the lang directory.

/lang

en.json

es.json

With this method, the key is used also as a translation for the default locale. For example, if your application has a Spanish translation and English as a default locale, you should create a lang/es.json file:

{

"I love programming.": "Me encanta programar."

}

You should decide which method is the most suitable for your project depending on your translation strings volume and organization.

While the official docs conclude that JSON files are better for applications with a larger number of translatable strings, we’ve found that this is not always true, and it depends on your application or team.

Our advice is to try and investigate which method works best for your application.

Using translation strings

You may retrieve your string using the __ helper function. Depending on the translation string strategy you choose, you should refer to your translation string in a different way:

- For short keys (PHP array files): you should use the dot syntax. For example, to retrieve the

welcome string from the lang/en/messages.php, you should use __('messages.welcome')

- For default translation string keys (JSON files): you should pass the default translation in the default language to the

__ function. For example, __('I love programming.')

In both cases, if the translation exists does not exist, the __ function will return the translation string key.

Starting here, we’ll use the short keys syntax for our examples, but you can use what works best for your application.

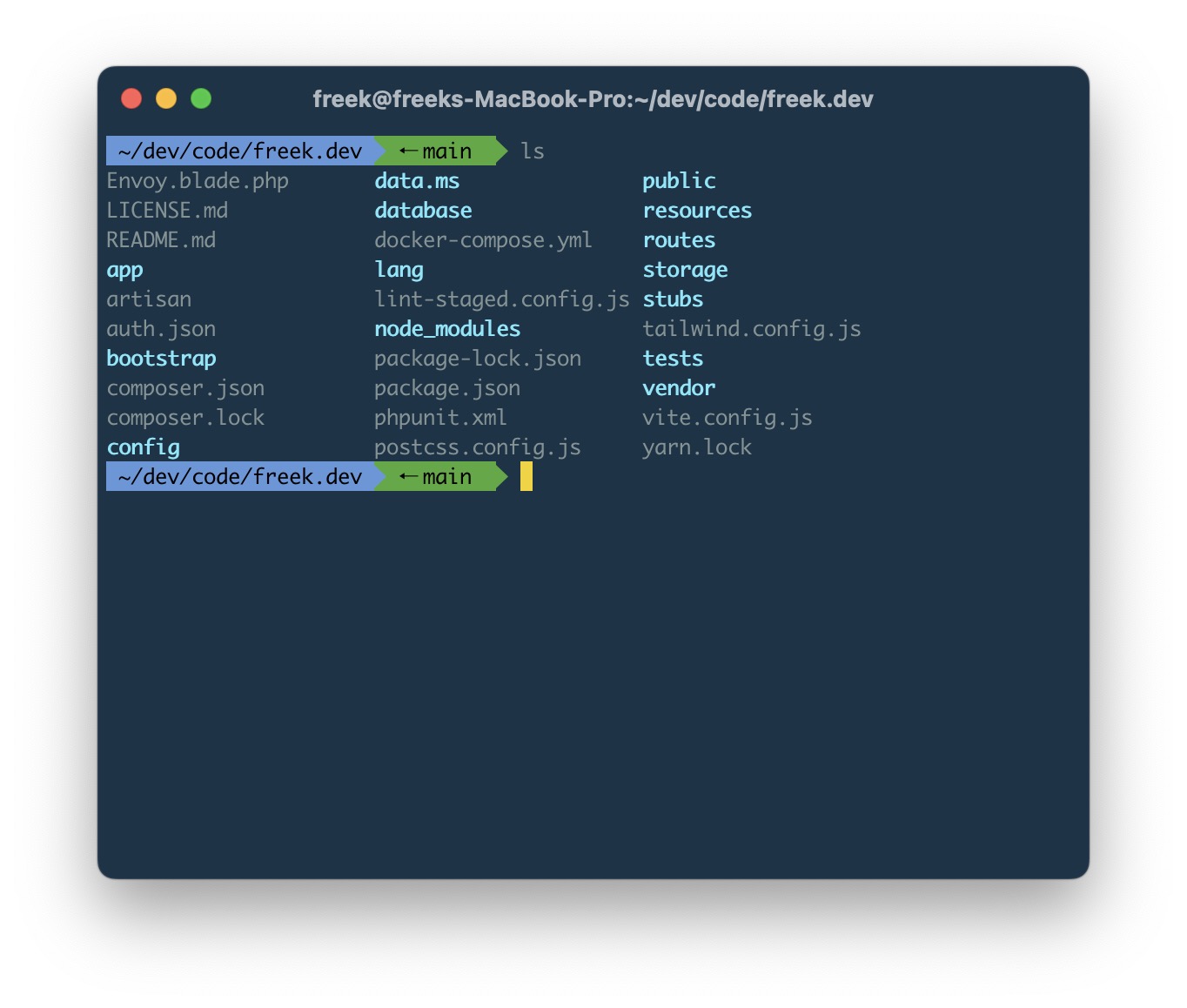

Configuring Laravel locales

Default and fallback locales for Laravel are configured in the config/app.php configuration file:

/*

|--------------------------------------------------------------------------

| Application Locale Configuration

|--------------------------------------------------------------------------

|

| The application locale determines the default locale that will be used

| by the translation service provider. You are free to set this value

| to any of the locales which will be supported by the application.

|

*/

'locale' => 'en',

/*

|--------------------------------------------------------------------------

| Application Fallback Locale

|--------------------------------------------------------------------------

|

| The fallback locale determines the locale to use when the current one

| is not available. You may change the value to correspond to any of

| the language folders that are provided through your application.

|

*/

'fallback_locale' => 'en',

But we also want to define other available locales apart from the default one. We can do that by modifying this configuration file and adding a new available_locales key after your existing locale key:

'locale' => 'en',

'available_locales' => [

'en' => 'English',

'pt' => 'Português',

'de' => 'Deutsch',

],

Redirect and switch locales

There are lots of different ways to set the application locale for every request on your application, but in this article, we’ll create a Middleware to detect the route locale and set the application language.

Creating the middleware

You can create the Middleware using the make:middleware Artisan command:

php artisan make:middleware Localized

We want to register this Middleware in order to be able to use it in our routes files, so we should add it in the app/Http/Kernel.php file. We need to add it on the application’s route middlewares:

/**

* The application's route middleware.

*

* These middleware may be assigned to groups or used individually.

*

* @var array<string, class-string|string>

*/

protected $routeMiddleware = [

// ... other middlewares

'localized' => \App\Http\Middleware\Localized::class,

];

And finally, we can add the localization features to our middleware:

<?php

namespace App\Http\Middleware;

use Closure;

use Illuminate\Http\Request;

class Localized

{

/**

* Handle an incoming request.

*

* @param \Illuminate\Http\Request $request

* @param \Closure(\Illuminate\Http\Request): (\Illuminate\Http\Response|\Illuminate\Http\RedirectResponse) $next

* @return \Illuminate\Http\Response|\Illuminate\Http\RedirectResponse

*/

public function handle(Request $request, Closure $next)

{

if (! $routeLocale = $request->route()->parameter('locale')) {

return redirect($this->localizedUrl($request->path()));

}

if (! in_array($routeLocale, array_keys(config('app.available_locales')))) {

return redirect($this->localizedUrl($request->path()));

}

$request->session()->put('locale', $routeLocale);

app()->setLocale($routeLocale);

return $next($request);

}

private function localizedUrl(string $path, ?string $locale = null) : string

{

/**

* Get the default locale if it's not defined

*/

if (! $locale and request()->session()->has('locale')) {

$locale = request()->session()->get('locale');

}

return url(trim($locale . '/' . $path, '/'));

}

}

Adding locale to routes

Modify your routes/web.php file to add the locale prefix and the redirection middleware:

<?php

use Illuminate\Support\Facades\Route;

/**

* Localized routes

*/

Route::prefix('{locale?}')

->middleware('localized')

->group(function() {

Route::get('/', function () {

return view('welcome');

});

});

/**

* Other non-localized routes

*/

Switching locale

At this point, you can simply add your locale prefix to your URL, and the middleware will set your application language. For example, /pt will set the application locale to Portuguese. Also, if you open any localized route without a locale prefix, the user will be redirected to the last used locale stored in the session.

Finally, you can loop over the available locales to create a localized navigation:

<nav>

@foreach(config('app.available_locales') as $locale => $language)

<a href=""></a>

@endforeach

</nav>

Keep in mind that this is a really simple example. A final version should generate localized links for the current URL on each request.

Parameters

When retrieving translation strings, you may wish to replace placeholders with custom parameters. In this case, you should define a placeholder with a : prefix. For example, you may define a welcome message with a placeholder name:

'welcome' => 'Welcome, :name',

And then retrieve the translation string passing an array of replacements as the second argument:

__('messages.welcome', ['name' => 'Joan']);

You can also modify your placeholder to capitalize the replacement in this way:

'welcome' => 'Welcome, :NAME', // Welcome, JOAN

'goodbye' => 'Goodbye, :Name', // Goodbye, Joan

This is especially useful when a language needs to place the placeholder at the start of the string and another in the middle of it:

'accepted' => 'The :attribute must be accepted.', // English

'accepted' => ':Attribute moet geaccepteerd zijn.', // Dutch

Make it easier with Locale

As a developer, after working on internationalization for your application, you may be involved also in the localization process with translators and your team. That could be a long process and a good reason to choose a translation management tool like Locale.

With Locale, your team and translators will be able to enjoy a next-level localization workflow built for productivity.

Get started for free now and level up your workflow.

You can learn how simple the configuration process is by following our 4-step guide.

And, if you are a visual learner and prefer to watch the process, we’ve recorded a step-by-step video showing how easy it is.

Be the first to know about our awesome new features, promotions and deals by signing up to our newsletter.

Be the first to get notified.

Be the first to know about our awesome new features, promotions and deals by signing up to our newsletter.