Disclaimer: Some of the links in this article are affiliate links. This means that if you sign up using one of my links, I’ll get a tiny kickback that helps to support the blog.

Introduction

It can sometimes be pretty difficult to decide which platform to use for hosting your PHP and Laravel applications. There are a huge number of hosting providers available (with each provider offering an equally huge number of services). So it can be daunting having to decide how and where to host your applications, especially if it’s your first time having to do it.

For example, if you’re a freelance web developer and want to host your small blog (like myself), you’ll likely opt for a completely different hosting option than if you’re a large company with a high-traffic website.

Generally, I like to stick with the most popular options that other developers are using. This usually means if I get stuck with something, it’s more likely that I can reach out to the community for help than if I was to use an obscure hosting platform that no one has ever heard of.

So I made a poll on Twitter and asked the PHP and Laravel community which hosting provider they use for their own PHP projects. I also asked them to comment if they use a provider that wasn’t listed in the poll. I was really pleased to see that we had 1292 votes!

In this Quickfire post, we’re going to take a look at the results of the poll and the most popular hosting providers.

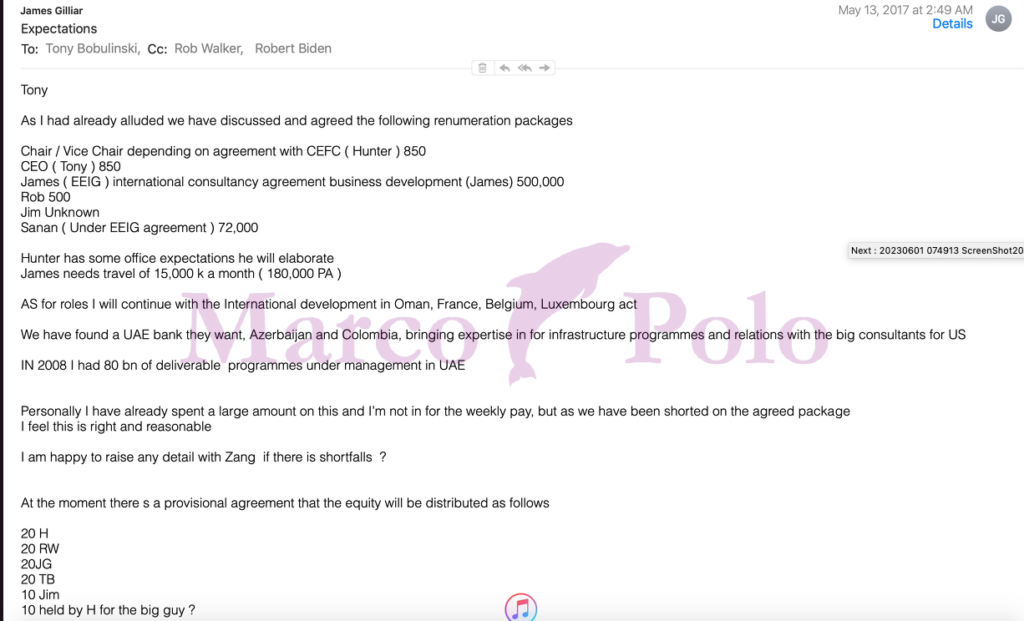

The Tweet

Here’s the tweet that I posted:

The Results

The votes were as follows:

- Digital Ocean – 40.6%

- AWS – 25.9%

- Hetzner – 16.6%

- Other – 16.9%

1. Digital Ocean

According to the poll, the most popular hosting provider for PHP applications is Digital Ocean, with 40.6% of the votes.

I actually use Digital Ocean myself for hosting my Laravel projects (and this very blog you’re reading right now) and I’m more than happy with the service.

Not only do I use their “Droplet” app servers, but I also use their “Spaces” object storage for storing my assets (such as images and videos).

One of the biggest reasons for me using Digital Ocean was that I found it nice and simple to use in comparison to AWS. DevOps and infrastructure aren’t my strong points, and they aren’t something I have a huge amount of interest in. I mainly love to work on code and build cool features. So I chose to use Digital Ocean because I felt like it was really easy to set up and get started.

In particular, it’s even easier to use when you use a tool like Laravel Forge to provision and manage the servers.

Personally, if any developer starting out in the PHP world asked me which hosting provider they should use, I’d probably recommend Digital Ocean. Of course, you may want to use something like AWS once the project starts to grow and you need access to more advanced features. But for a large number of PHP and Laravel projects, Digital Ocean is a great choice.

You can sign up to Digital Ocean and get $200 of credit by using my referral link. Even if you’re not in a position to use it right now, it could be a great chance for you to browse around the Digital Ocean dashboard and see what cool features they have on offer.

2. AWS

The second most popular hosting provider for PHP applications is AWS, with 25.9% of the votes.

In all honesty, I expected AWS to be the most popular hosting provider. I think this is because AWS is so popular in the tech world, and it’s the one that I hear about the most.

AWS is a great platform with a huge range of features. You can use it for hosting your small Laravel blog, all the way up to your large enterprise applications that require complex infrastructure.

I’ve used AWS in the past, but I’ll admit that I found it pretty overwhelming because there’s so much to learn. I think it’s a really powerful tool, but it’s definitely not for the faint-hearted! However, there are some awesome tutorials out there that can help you get started with AWS.

If you pair AWS with Laravel Forge, you can have a really powerful setup for your PHP and Laravel apps. You can have access to the infrastructure and DevOps features of AWS, but you can also have the simplicity of Forge to manage your servers. So it means that most of the time, you’ll be using Forge to interact with AWS and won’t actually need to use the AWS dashboard. As a result, it can make AWS a lot easier to use and give you the ability to scale up your infrastructure if needed.

Similarly, depending on the type of application you’re building, you may also want to pair up your AWS account with something like Laravel Vapor. This gives you the ability to deploy your Laravel apps in a serverless environment which can be really powerful, especially for applications that experience spikes in traffic.

3. Hetzner

Hetzner was the third most popular hosting provider for PHP applications, with 16.6% of the votes in the poll.

Hetzner is a German hosting provider that provides a range of different hosting options (such as dedicated servers, cloud servers, and managed servers). They were founded way back in 1997, so they have a lot of experience in the hosting world!

I’ve personally never used Hetzner itself. However, I have worked on Laravel projects for clients that use Hetzner for their hosting. I never ran into any issues with it, and it seemed to work really well.

From hearing other developers talk about Hetzner, it seems like it’s a great option for hosting your PHP and Laravel apps. In comparison to some other providers, you can often get more performant servers for lower prices. I’ve been hearing it mentioned in conversations more and more recently, so it seems like it might be something for me to check out in more depth at some point.

Laravel Forge also has the option to provision and manage servers on Hetzner. So if you’re looking for a simple way to manage your Hetzner servers, Forge could be a great option.

4. Vultr

Vultr was another popular hosting platform that was regularly mentioned in the poll’s comments.

Vultr is a cloud hosting provider that offers a range of different hosting options. At the time of writing this article, they have 31 worldwide locations, so there’s a great range of options for where you can host your applications.

I’ve never personally used Vultr for hosting real-life applications, but I have given it a spin to see what the experience of using it was like. In general, I found it really easy to use and get set up. You can also provision and manage your Vultr servers directly from Laravel Forge, which is really handy.

You can sign up for Vultr using my referral link and I’ll get a small commission that helps support the blog.

5. Akamai (previously Linode)

Akamai (previously known as Linode) was another popular hosting provider that I saw mentioned in the comments of the poll.

Akamai is a cloud hosting provider that offers a range of different hosting options. They have a huge number of worldwide locations so that you can host your applications in the location that’s closest to your users.

Similar to Vultr, I’ve never used Akamai (or Linode) to host any real-life projects. But I have given it a spin in the past to see what it was like. I found the dashboard interface really easy to understand and use.

Just like with all the other hosting providers, you can provision and manage your Akamai servers directly from Laravel Forge.

You can sign up for Akamai and get $100 free credit to play around with the platform and I’ll get a small commission that helps support the blog.

6. Other

There were also a few other honourable mentions that I saw in the comments of the poll for hosting Laravel and PHP applications. These were:

- Siteground (for hosting older WordPress projects)

- Heroku

- Contabo

- Upcloud

Limitations

It’s important to remember that these results are by no means a representation of the entire PHP community. The poll was only open for 24 hours and was on Twitter. I’m usually interacting with the Laravel community on Twitter, so I’d probably make a guess that a large portion of the answers were given by Laravel developers.

So there’s a possibility that these results could be skewed towards a certain type of developer. If I was more active in other PHP communities, such as Symfony or WordPress, the results might have looked different.

But I do still think they gave a good indication of the most popular hosting providers, even if the numbers aren’t 100% accurate.