Dear MySQL users, The MySQL developer tools team announces 8.0.13 as our general available (GA) for MySQL Workbench 8.0. For the full list of changes in this revision, visit http://dev.mysql.com/doc/relnotes/workbench/en/changes-8-0.html For discussion, join the MySQL Workbench Forums: http://forums.mysql.com/index.php?152 The release is now available in source and binary form for a number of platforms from our download pages at: http://dev.mysql.com/downloads/tools/workbench/ Enjoy!

testssl.sh – Test SSL Security Including Ciphers, Protocols & Detect Flaws

userid@somehost:~ % testssl.sh

testssl.sh <options>

–h, —help what you‘re looking at

-b, –banner displays banner + version of testssl.sh

-v, –version same as previous

-V, –local pretty print all local ciphers

-V, –local <pattern> which local ciphers with <pattern> are available?

(if pattern not a number: word match)

testssl.sh <options> URI (“testssl.sh URI” does everything except -E)

-e, –each-cipher checks each local cipher remotely

-E, –cipher-per-proto checks those per protocol

-f, –ciphers checks common cipher suites

-p, –protocols checks TLS/SSL protocols (including SPDY/HTTP2)

-y, –spdy, –npn checks for SPDY/NPN

-Y, –http2, –alpn checks for HTTP2/ALPN

-S, –server-defaults displays the server’s default picks and certificate info

–P, —server–preference displays the server‘s picks: protocol+cipher

-x, –single-cipher <pattern> tests matched <pattern> of ciphers

(if <pattern> not a number: word match)

-c, –client-simulation test client simulations, see which client negotiates with cipher and protocol

-H, –header, –headers tests HSTS, HPKP, server/app banner, security headers, cookie, reverse proxy, IPv4 address

-U, –vulnerable tests all vulnerabilities

-B, –heartbleed tests for heartbleed vulnerability

-I, –ccs, –ccs-injection tests for CCS injection vulnerability

-R, –renegotiation tests for renegotiation vulnerabilities

-C, –compression, –crime tests for CRIME vulnerability

-T, –breach tests for BREACH vulnerability

-O, –poodle tests for POODLE (SSL) vulnerability

-Z, –tls-fallback checks TLS_FALLBACK_SCSV mitigation

-F, –freak tests for FREAK vulnerability

-A, –beast tests for BEAST vulnerability

-J, –logjam tests for LOGJAM vulnerability

-D, –drown tests for DROWN vulnerability

-s, –pfs, –fs, –nsa checks (perfect) forward secrecy settings

-4, –rc4, –appelbaum which RC4 ciphers are being offered?

special invocations:

-t, –starttls <protocol> does a default run against a STARTTLS enabled <protocol>

–xmpphost <to_domain> for STARTTLS enabled XMPP it supplies the XML stream to-‘‘ domain — sometimes needed

–mx <domain/host> tests MX records from high to low priority (STARTTLS, port 25)

–ip <ip> a) tests the supplied <ip> v4 or v6 address instead of resolving host(s) in URI

b) arg “one” means: just test the first DNS returns (useful for multiple IPs)

–file <fname> mass testing option: Reads command lines from <fname>, one line per instance.

Comments via # allowed, EOF signals end of <fname>. Implicitly turns on “–warnings batch”

partly mandatory parameters:

URI host|host:port|URL|URL:port (port 443 is assumed unless otherwise specified)

pattern an ignore case word pattern of cipher hexcode or any other string in the name, kx or bits

protocol is one of the STARTTLS protocols ftp,smtp,pop3,imap,xmpp,telnet,ldap

(for the latter two you need e.g. the supplied openssl)

tuning options (can also be preset via environment variables):

–bugs enables the “-bugs” option of s_client, needed e.g. for some buggy F5s

–assume-http if protocol check fails it assumes HTTP protocol and enforces HTTP checks

–ssl-native fallback to checks with OpenSSL where sockets are normally used

–openssl <PATH> use this openssl binary (default: look in $PATH, $RUN_DIR of testssl.sh)

–proxy <host>:<port> connect via the specified HTTP proxy

-6 use also IPv6. Works only with supporting OpenSSL version and IPv6 connectivity

–sneaky leave less traces in target logs: user agent, referer

output options (can also be preset via environment variables):

–warnings <batch|off|false> ”batch” doesn’t wait for keypress, “off” or “false” skips connection warning

—quiet don‘t output the banner. By doing this you acknowledge usage terms normally appearing in the banner

–wide wide output for tests like RC4, BEAST. PFS also with hexcode, kx, strength, RFC name

–show-each for wide outputs: display all ciphers tested — not only succeeded ones

–mapping <no-rfc> don’t display the RFC Cipher Suite Name

—color <0|1|2> 0: no escape or other codes, 1: b/w escape codes, 2: color (default)

—colorblind swap green and blue in the output

—debug <0–6> 1: screen output normal but keeps debug output in /tmp/. 2–6: see “grep -A 5 ‘^DEBUG=’ testssl.sh”

file output options (can also be preset via environment variables):

—log, —logging logs stdout to <NODE–YYYYMMDD–HHMM.log> in current working directory

—logfile <logfile> logs stdout to <file/NODE–YYYYMMDD–HHMM.log> if file is a dir or to specified log file

—json additional output of findings to JSON file <NODE–YYYYMMDD–HHMM.json> in cwd

—jsonfile <jsonfile> additional output to JSON and output JSON to the specified file

—csv additional output of findings to CSV file <NODE–YYYYMMDD–HHMM.csv> in cwd

—csvfile <csvfile> set output to CSV and output CSV to the specified file

—append if <csvfile> or <jsonfile> exists rather append then overwrite

All options requiring a value can also be called with ‘=’ e.g. testssl.sh –t=smtp —wide —openssl=/usr/bin/openssl <URI>.

<URI> is always the last parameter.

Need HTML output? Just pipe through “aha” (ANSI HTML Adapter: github.com/theZiz/aha) like

“testssl.sh <options> <URI> | aha >output.html”

userid@somehost:~ %

via Darknet – The Darkside

testssl.sh – Test SSL Security Including Ciphers, Protocols & Detect Flaws

The Science of Snow Driving

If you live somewhere that snow coats roads in the wintertime, you’ll want to check out Engineering Explained’s latest clip, as Jason walks us through the variables at work when driving on slippery surfaces, and provides some tips on how to maintain control on the snow.

The Library of Congress’s Collection of Early Films

National Screening Room, a project by the Library of Congress, is a collection of early films (from the late 19th to most of the 20th century), digitized by the LOC for public use and perusal. Sadly, it’s not made clear which of the films are clearly in the public domain, and so free to remix and reuse, but it’s still fun to browse the collection for a look at cultural and cinematic history.

There’s a bunch of early Thomas Edison kinetoscopes, including this kiss between actors May Irwin and John C. Rice that reportedly brought the house down in 1896:

Or these two 1906 documentaries of San Francisco, one from shortly before the earthquake, and another just after (the devastation is really remarkable, and the photography, oddly beautiful):

There’s a silent 1926 commercial for the first wave of electric refrigerators, promoted by the Electric League of Pittsburgh, promising an exhibition with free admission! (wow guys, thanks)

There’s also 33 newsreels made during the 40s and 50s by All-American News, the first newsreels aimed at a black audience. As you might guess by the name and the dates, it’s pretty rah-rah, patriotic, support-the-war-effort stuff, but also includes some slice-of-life stories and examples of economic cooperation among working-to-middle-class black families at the time.

I hope this is just the beginning, and we can get more and more of our cinematic patrimony back into the public commons where it belongs.

More about…

via kottke.org

The Library of Congress’s Collection of Early Films

The 6 Best Websites to Learn How to Hack Like a Pro

Want to learn how to hack? Hacking isn’t a single subject that anyone can pick up overnight. If you want to hack like a pro, you won’t be able to read just one article and visit a few hacking websites.

But if you spend a lot of time studying and practicing your craft, you can learn to hack.

White Hat vs. Black Hat Hacking

There are two forms of hacking: “white hat” and “black hat“.

White hat hackers call themselves ethical hackers, in that they find vulnerabilities in an effort to make systems and applications more secure.

However, there’s a whole other community of hackers—black hat hackers—who find vulnerabilities only to exploit them as much as possible.

Now that you know what sort of community you may be entering, let’s get on with the list of top sites where you can learn to hack.

At Hacking Tutorial, you’ll find a list of resources that’ll teach you some in-depth tricks to hacking various apps, operating systems, and devices.

Some examples of the content you’ll find here include:

- Articles like, “3 Steps GMail MITM Hacking Using Bettercap”

- Tutorials like, “How to Bypass Windows AppLocker”

- Hacking news

- Phone hacking tips

- Reviews of online hacking tools

- A significant library of free hacking eBooks and reports

The articles are usually short, and the grammar isn’t always perfect. However many include highly technical, step-by-step instructions on how to do the task at hand.

The tricks and scripts work unless the exploit has been patched. You may have to dig through some non-hacking articles. But for the volume of technical tricks and resources you’ll find there it’s deserving of a mention.

Hackaday is a blog made for engineers. It’s less about hacking with code, and more about hacking just about anything.

Posts include innovative projects including robotic builds

How to Control Robots With a Game Controller and Arduino

How to Control Robots With a Game Controller and Arduino

Have you always wanted to control an Arduino with a video game controller? Well now you can with nothing more than this article and an Xbox 360 controller!

Read More

, modifying vintage electronics and gadgets, and much more.

Over the years, Hack A Day has transformed the site into a fairly popular blog.

They also have another domain called hackaday.io, where they host reader-submitted engineering projects. These include some really cool projects and innovative designs.

This site redefines the meaning of the word hacking by helping you learn how to hack electronic devices like a Gameboy or a digital camera and completely modifying it.

The encourage readers to building electronics for the sole purpose of hacking other commercial devices. They also host an annual Hackaday Prize competition. This is where thousands of hardware hackers compete to win the ultimate prize for the best build of the year.

Hack In The Box has really changed significantly over the years. The site is actually made up of four major subdomains, each with a specific purpose meant to serve hackers around the world.

The site remains focused on security and ethical hacking. The news and magazine sections showcase frequently updated content specifically for hackers or those learning to hack.

The four major sections of the site include:

- HITBSecNews: This popular blog provides security news covering every major industry. Major topics include major platforms like Microsoft, Apple, and Linux. Other topics include international hacking news, science and technology, and even law.

- HITBSecConf: This is an annual conference drawing in hacking professionals and researchers from around the word. It’s held every year in the Netherlands.

- HITBPhotos: A simple collection of photo albums, mostly covering images from the yearly conference.

- HITBMagazine: This page highlights the quarterly print magazine that Hack In The Box used to send out to subscribers until 2014. Even though the blog section of the site is still active and frequently updated, no additional print magazines are being produced.

This site is less of a place to go for actually technical hacking tips, and more of a daily spot to get your latest fix of online hacking news.

HITB is a great resource for news for anyone interested in the latest gossip throughout the international hacking community.

Hack This Site.org is one of the coolest, free programmer training sites where you can learn how to hack. Just accept one of the challenges along the left navigation pane of the main page.

The site designers offer various “missions”. This is where you need to figure out the vulnerability of a site and then attempt to use your new-found hacking skills (you’ve carefully studied all of the articles on the site, right?) to hack the web page.

Missions include Basic, Realistic, Application, Programming and many others.

If you’re able to figure out how to properly hack any of the most difficult missions on this site, then you’ve definitely earned the title of “hacker”.

If you’re looking to kick start a career in white-hat cybersecurity, Cybrary is a great resource. Here, you’ll find hundreds of free courses covering areas like Microsoft Server security, doing security assessments, penetration testing, and a collection of CompTIA courses as well.

The site includes forums, practice labs, educational resources, and even a job board. Whether you’re just starting to consider a cybersecurity career, or you’re already in the middle of one, this site is a good one to bookmark.

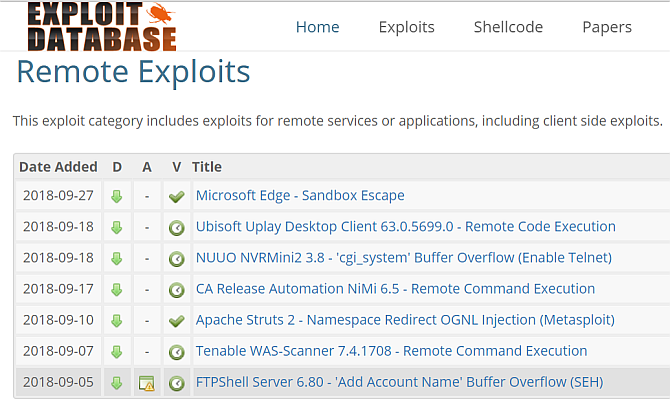

Whether you’re a white hat or a black hat hacker, the Exploit Database is an important tool in any hacker’s toolbelt.

It’s frequently updated with the latest exploits affecting applications, web services, and more. If you’re looking to learn more about how past hacks worked and were patched, the Papers section of the site is for you.

This area includes downloads of magazines that cover many of the biggest exploits to ever hit the world in the past decade.

Learning How to Be a Hacker

More industries continue to gravitate toward a cloud based approach. The world keeps moving more of its critical data to the internet. This means the world of hacking and counter-hacking is only going to grow.

Cybersecurity is a booming field, and a good one to get into if you’re looking for a lucrative, future-proof career.

If you’re interested in the history of hacking, our list of the world’s most famous hackers and what happened to them

10 of the World’s Most Famous Hackers & What Happened to Them

10 of the World’s Most Famous Hackers & What Happened to Them

Not all hackers are bad. The good guys — “white-hat hackers” — use hacking to improve computer security. Meanwhile “black-hat hackers” are the ones who cause all the trouble, just like these guys.

Read More

is a fascinating read. The lesson to learn is simple. Black hat hacking may sometimes pay more, but white hat hacking ensures that you’ll stay out of trouble.

via MakeUseOf.com

The 6 Best Websites to Learn How to Hack Like a Pro

The Best White Noise Machine

The LectroFan has 10 white noise settings. In this video, the lowest frequency (“dark noise”) is softer and rumbly, while the highest frequency (“white noise”) is about as loud as a garbage disposal.

We think the LectroFan by ASTI is the white noise machine you’ll want on your nightstand. Our testing showed that the LectroFan’s random, nonrepeating white noise settings allowed it to mask intruding noises as well as or better than the other machines in the group. It’s the second-smallest machine we tested, too, so you can pack it for travel in addition to using it at home. The LectroFan is also one of the easiest models to use, with a simple three-button interface to toggle among 10 random, nonrepeating white noise offerings and 30 volume settings in one of the widest volume ranges we found.

To be honest, all the machines we tried sounded more or less alike (except the Dohm DS, which had a more complex, layered sound). The LectroFan didn’t sound better than the other machines, but it was just as capable or slightly better at masking sound during our noise tests. It generates white noise electronically using algorithms, so the sounds it produces are truly random and won’t repeat, something that Michael Perlis, director of the behavioral sleep medicine program at the University of Pennsylvania School of Medicine, told me is a good feature of a white noise machine for sleep. The LectroFan’s 10 white noise settings, ranging from “dark noise” (low frequency) to “white noise” (high frequency), sounded like variations of low rumbles, rushing wind, or static—neither pleasant nor unpleasant, and definitely random and meaningless.

This video demonstrates how the LectroFan has a much higher white noise frequency than the Dohm DS, our runner-up. It’s also about half the size.

According to our sound-level tests, the LectroFan’s 30 volume settings ranged from a whisper-quiet 31 dBa to a thoroughly loud 80 dBa (about as loud as a garbage disposal). All the machines we tested measured under 85 dBa at their max setting (when we measured sound from 18 inches away). A machine that allows for fine volume control, like the LectroFan, can be at its lowest possible setting yet still block noise. By comparison, some of the other machines we tried had a narrower volume range that we found more difficult to adjust. To be clear, we didn’t notice a huge variation in the sound-blocking performance among the machines, and they were typically within a few decibels of one another for the minimum volume required to mask the offending noise.

Measuring just 4 inches in diameter and 2 inches high, the LectroFan is the second-smallest machine in our test group. It takes up little room on a nightstand, and it’s small enough to go into your luggage for travel. (It conveniently uses a USB cord and wall-power adapter, which you could swap for your USB wall charger to save more space when you’re packing.)

With its minimalist, three-button interface, we found changing noise settings and volume on the LectroFan easier than on the other white noise machines, which had more-complicated controls. The LectroFan was the only white noise machine we tested that was easy to adjust or turn off in the dark, without our needing to see or pick up the device. This model also has a 60-minute timer, a useful feature if you want to set the machine to run as you fall asleep and then turn off.

The LectroFan features 10 “fan sounds,” including “box fan,” “attic fan,” and “industrial fan.” Unless you particularly like fan sounds, we’re not sure why you would need or use these settings, so we ignored them, since the white noise settings worked better at masking sounds.

Flaws but not dealbreakers

Since the LectroFan is so small, we wish it had a built-in battery, which would be helpful for travel or if you don’t have an outlet nearby.

After more than a year of long-term testing the LectroFan, one of our editors has found that because the buttons each share two functions, he sometimes accidentally turns on the wrong one. This sometimes results in the machine going into the timer mode, thus turning off the machine in the middle of the night, or starting different sounds that wake his kids. He’s also used our runner-up, the Marpac Dohm and says that model is a little easier to turn on and off without accidentally starting another function.

via Wirecutter: Reviews for the Real World

The Best White Noise Machine

Atlassian launches the new Jira Software Cloud

Atlassian previewed the next generation of its hosted Jira Software project tracking tool earlier this year. Today, it’s available to all Jira users. To build the new Jira, Atlassian redesigned both the back-end stack and rethought the user experience from the ground up. That’s not an easy change, given how important Jira has become for virtually every company that develops software — and given that it is Atlassian’s flagship product. And with this launch, Atlassian is now essentially splitting the hosted version of Jira (which is hosted on AWS) from the self-hosted server version and prioritizing different features for both.

So the new version of Jira that’s launching to all users today doesn’t just have a new, cleaner look, but more importantly, new functionality that allows for a more flexible workflow that’s less dependent on admins and gives more autonomy to teams (assuming the admins don’t turn those features off).

Because changes to such a popular tool are always going to upset at least some users, it’s worth noting at the outset that the old classic view isn’t going away. “It’s important to note that the next-gen experience will not replace our classic experience, which millions of users are happily using,” Jake Brereton, head of marketing for Jira Software Cloud, told me. “The next-gen experience and the associated project type will be available in addition to the classic projects that users have always had access to. We have no plans to remove or sunset any of the classic functionality in Jira Cloud.”

The core tenet of the redesign is that software development in 2018 is very different from the way developers worked in 2002, when Jira first launched. Interestingly enough, the acquisition of Trello also helped guide the overall design of the new Jira.

“One of the key things that guided our strategy is really bringing the simplicity of Trello and the power of Jira together,” Sean Regan, Atlassian’s head of growth for Software Teams, told me. “One of the reasons for that is that modern software development teams aren’t just developers down the hall taking requirements. In the best companies, they’re embedded with the business, where you have analysts, marketing, designers, product developers, product managers — all working together as a squad or a triad. So JIRA, it has to be simple enough for those teams to function but it has to be powerful enough to run a complex software development process.”

Unsurprisingly, the influence of Trello is most apparent in the Jira boards, where you can now drag and drop cards, add new columns with a few clicks and easily filter cards based on your current needs (without having to learn Jira’s powerful but arcane query language). Gone are the days where you had to dig into the configuration to make even the simplest of changes to a board.

As Regan noted, when Jira was first built, it was built with a single team in mind. Today, there’s a mix of teams from different departments that use it. So while a singular permissions model for all of Jira worked for one team, it doesn’t make sense anymore when the whole company uses the product. In the new Jira then, the permissions model is project-based. “So if we wanted to start a team right now and build a product, we could design our board, customize our own issues, build our own workflows — and we could do it without having to find the IT guy down the hall,” he noted.

One feature the team seems to be especially proud of is roadmaps. That’s a new feature in Jira that makes it easier for teams to see the big picture. Like with boards, it’s easy enough to change the roadmap by just dragging the different larger chunks of work (or “epics,” in Agile parlance) to a new date.

“It’s a really simple roadmap,” Brereton explained. “It’s that way by design. But the problem we’re really trying to solve here is, is to bring in any stakeholder in the business and give them one view where they can come in at any time and know that what they’re looking at is up to date. Because it’s tied to your real work, you know that what we’re looking at is up to date, which seems like a small thing, but it’s a huge thing in terms of changing the way these teams work for the positive.“

The Atlassian team also redesigned what’s maybe the most-viewed page of the service: the Jira issue. Now, issues can have attachments of any file type, for example, making it easier to work with screenshots or files from designers.

Jira now also features a number of new APIs for integrations with Bitbucket and GitHub (which launched earlier this month), as well as InVision, Slack, Gmail and Facebook for Work.

With this update, Atlassian is also increasing the user limit to 5,000 seats, and Jira now features compliance with three different ISO certifications and SOC 2 Type II.

via TechCrunch

Atlassian launches the new Jira Software Cloud

SimpliSafe monitors the outdoors with its first video doorbell

SimpliSafe

There is already no shortage of options for smart doorbells, and now you can add SimpliSafe’s Video Doorbell Pro to the list. The device marks the security firm’s first venture into home security outside of the house.

There’s not much that makes the Video Doorbell Pro stand out in an already crowded market. The smart doorbell sports a super-wide angle camera that can scope out a 162-degree diagonal field of view. It captures footage in 1080p HD and offers two-way audio communication, which is pretty standard fare. The company claims that HDR technology allows the camera to adjust to extreme lighting situations, so you should still get a clear image whether the sun is beating down on the lens or it’s the dead of night.

SimpliSafe seems to have some additional plans for the camera. The company says sensors within the doorbell can capture human heat signatures, which it can do out of the box. In the future, it’ll supposedly be able to perform image classification that will improve motion triggering.

On the whole, there’s really not that much about SimpliSafe’s offering that gives it a leg up over the competition unless you’re already in the SimpliSafe ecosystem. The company does home security and nothing else, and that reputation counts for something. If you’re already paying SimpliSafe $15 a month for home monitoring, the $169 price tag makes the Video Doorbell Pro a reasonable purchase. Just make sure you factor in the $4.99 per month charge to record and store footage (or $10 per month for unlimited cameras).

The problem for SimpliSafe its competition consists of tech companies with bottomless pockets. Nest, backed by Google, has the Hello doorbell. Amazon scooped up Ring earlier this year. While it hasn’t done a ton with it yet, save for a simple product refresh, it can drop its prices to chase competitors like SimpliSafe out of the market if it really wants to. SimpliSafe does have a seemingly permanent placement as an advertiser on every podcast in existence, so it has that going for it.

via Engadget

SimpliSafe monitors the outdoors with its first video doorbell

What The Second Amendment Really Means – The Founders Weigh In

“They that can give up essential liberty to obtain a little temporary safety deserve neither liberty nor safety.”

– Benjamin Franklin, Historical Review of Pennsylvania, 1759

“The supposed quietude of a good man allures the ruffian; while on the other hand, arms, like law, discourage and keep the invader and the plunderer in awe, and preserve order in the world as well as property. The balance of power is the scale of peace. The same balance would be preserved were all the world destitute of arms, for all would be alike; but since some will not, others dare not lay them aside. And while a single nation refuses to lay them down, it is proper that all should keep them up. Horrid mischief would ensue were one-half the world deprived of the use of them; for while avarice and ambition have a place in the heart of man, the weak will become a prey to the strong. The history of every age and nation establishes these truths, and facts need but little arguments when they prove themselves.”

– Thomas Paine, “Thoughts on Defensive War” in Pennsylvania Magazine, July 1775

“The laws that forbid the carrying of arms are laws of such a nature. They disarm only those who are neither inclined nor determined to commit crimes…. Such laws make things worse for the assaulted and better for the assailants; they serve rather to encourage than to prevent homicides, for an unarmed man may be attacked with greater confidence than an armed man.”

– Thomas Jefferson, Commonplace Book (quoting 18th century criminologist Cesare Beccaria), 1774-1776

“No free man shall ever be debarred the use of arms.”

– Thomas Jefferson, Virginia Constitution, Draft 1, 1776

“Guard with jealous attention the public liberty. Suspect everyone who approaches that jewel. Unfortunately, nothing will preserve it but downright force. Whenever you give up that force, you are ruined . . . The great object is that every man be armed. Everyone who is able might have a gun.”

– Patrick Henry, speech to the Virginia Ratifying Convention, June 5, 1778

“I enclose you a list of the killed, wounded, and captives of the enemy from the commencement of hostilities at Lexington in April, 1775, until November, 1777, since which there has been no event of any consequence . . . I think that upon the whole it has been about one half the number lost by them, in some instances more, but in others less. This difference is ascribed to our superiority in taking aim when we fire; every soldier in our army having been intimate with his gun from his infancy.”

– Thomas Jefferson, letter to Giovanni Fabbroni, June 8, 1778

“Necessity is the plea for every infringement of human freedom. It is the argument of tyrants; it is the creed of slaves.”

– William Pitt (the Younger), speech in the House of Commons, November 18, 1783

“A strong body makes the mind strong. As to the species of exercise, I advise the gun. While this gives moderate exercise to the body, it gives boldness, enterprise and independence to the mind. Games played with the ball, and others of that nature, are too violent for the body and stamp no character on the mind. Let your gun therefore be your constant companion of your walks.”

– Thomas Jefferson, letter to Peter Carr, August 19, 1785

“Before a standing army can rule, the people must be disarmed, as they are in almost every country in Europe. The supreme power in America cannot enforce unjust laws by the sword; because the whole body of the people are armed, and constitute a force superior to any band of regular troops.”

– Noah Webster, An Examination of the Leading Principles of the Federal Constitution, October 10, 1787

“What country can preserve its liberties if their rulers are not warned from time to time that their people preserve the spirit of resistance? Let them take arms.”

– Thomas Jefferson, letter to James Madison, December 20, 1787

“For it is a truth, which the experience of ages has attested, that the people are always most in danger when the means of injuring their rights are in the possession of those of whom they entertain the least suspicion.”

– Alexander Hamilton, Federalist No. 25, December 21, 1787

“[I]f circumstances should at any time oblige the government to form an army of any magnitude that army can never be formidable to the liberties of the people while there is a large body of citizens, little, if at all, inferior to them in discipline and the use of arms, who stand ready to defend their own rights and those of their fellow-citizens. This appears to me the only substitute that can be devised for a standing army, and the best possible security against it, if it should exist.”

– Alexander Hamilton, Federalist No. 28, January 10, 1788

“The Constitution shall never be construed to prevent the people of the United States who are peaceable citizens from keeping their own arms.”

– Samuel Adams, Massachusetts Ratifying Convention, January 9–February 5, 1788

“If the representatives of the people betray their constituents, there is then no resource left but in the exertion of that original right of self-defense which is paramount to all positive forms of government, and which against the usurpations of the national rulers, may be exerted with infinitely better prospect of success than against those of the rulers of an individual state. In a single state, if the persons intrusted with supreme power become usurpers, the different parcels, subdivisions, or districts of which it consists, having no distinct government in each, can take no regular measures for defense. The citizens must rush tumultuously to arms, without concert, without system, without resource; except in their courage and despair.”

– Alexander Hamilton, Federalist No. 28, January 10, 1788

“A militia when properly formed are in fact the people themselves . . . and include, according to the past and general usage of the states, all men capable of bearing arms. . . To preserve liberty, it is essential that the whole body of the people always possess arms, and be taught alike, especially when young, how to use them.”

– Richard Henry Lee, Federal Farmer No. 18, January 25, 1788

“Besides the advantage of being armed, which the Americans possess over the people of almost every other nation, the existence of subordinate governments, to which the people are attached, and by which the militia officers are appointed, forms a barrier against the enterprises of ambition, more insurmountable than any which a simple government of any form can admit of.”

– James Madison, Federalist No. 46, January 29, 1788

“[T]he ultimate authority, wherever the derivative may be found, resides in the people alone . . .”

– James Madison, Federalist No. 46, January 29, 1788

“I ask who are the militia? They consist now of the whole people, except a few public officers.”

– George Mason, address to the Virginia Ratifying Convention, June 4, 1788

“To disarm the people . . . [i]s the most effectual way to enslave them.”

– George Mason, referencing advice given to the British Parliament by Pennsylvania governor Sir William Keith, The Debates in the Several State Conventions on the Adoption of the Federal Constitution, June 14, 1788

“The right of the people to keep and bear arms shall not be infringed. A well-regulated militia, composed of the body of the people, trained to arms, is the best and most natural defense of a free country.”

– James Madison, I Annals of Congress 434, June 8, 1789

“What, Sir, is the use of a militia? It is to prevent the establishment of a standing army, the bane of liberty . . . Whenever Governments mean to invade the rights and liberties of the people, they always attempt to destroy the militia, in order to raise an army upon their ruins.”

– Rep. Elbridge Gerry of Massachusetts, I Annals of Congress 750, August 17, 1789

“As civil rulers, not having their duty to the people before them, may attempt to tyrannize, and as the military forces which must be occasionally raised to defend our country, might pervert their power to the injury of their fellow citizens, the people are confirmed by the article in their right to keep and bear their private arms.”

– Tench Coxe, Philadelphia Federal Gazette, June 18, 1789

“A free people ought not only to be armed, but disciplined…”

– George Washington, First Annual Address, to both House of Congress, January 8, 1790

“This may be considered as the true palladium of liberty…. The right of self-defense is the first law of nature: in most governments it has been the study of rulers to confine this right within the narrowest limits possible. Wherever standing armies are kept up, and the right of the people to keep and bear arms is, under any color or pretext whatsoever, prohibited, liberty, if not already annihilated, is on the brink of destruction.”

– St. George Tucker, Blackstone’s Commentaries on the Laws of England, 1803

“On every occasion [of Constitutional interpretation] let us carry ourselves back to the time when the Constitution was adopted, recollect the spirit manifested in the debates, and instead of trying [to force] what meaning may be squeezed out of the text, or invented against it, [instead let us] conform to the probable one in which it was passed.”

– Thomas Jefferson, letter to William Johnson, 12 June 1823

“The Constitution of most of our states (and of the United States) assert that all power is inherent in the people; that they may exercise it by themselves; that it is their right and duty to be at all times armed.”

– Thomas Jefferson, letter to John Cartwright, 5 June 1824

“The right of the citizens to keep and bear arms has justly been considered, as the palladium of the liberties of a republic; since it offers a strong moral check against the usurpation and arbitrary power of rulers; and will generally, even if these are successful in the first instance, enable the people to resist and triumph over them.”

– Joseph Story, Commentaries on the Constitution of the United States, 1833

Lorence (Larry) Trick, MD is a retired orthopedic surgeon, and an avid upland bird hunter and clays shooter.

This post was originally published at drgo.us and is reprinted here with permission.

via The Truth About Guns

What The Second Amendment Really Means – The Founders Weigh In

The Best Food Dehydrator

Dehydrators are deceptively simple machines, involving little more than a low-wattage heating element, a fan, and open racks that let air flow throughout. But some models perform much better than others (and price isn’t necessarily an indicator of performance). To find the best dehydrator, we considered these factors:

Even drying

The best dehydrators are ones that evenly dry food without requiring you to rotate or rearrange the trays much, if at all. The amount of work and attention you have to invest throughout the drying process depends on how well your dehydrator circulates air. We found that the best results came from round vertical-flow dehydrators with the motor at the base. This makes sense because heat rises, and a bottom-mounted motor pushes hot air where it naturally wants to go (up, it wants to go up). Top-mounted dehydrators struggle to push heated air down to the lower trays and require more attention and rotation.

In general, horizontal-flow dehydrators dry unevenly and require you to rotate the trays throughout the process. Jeff Wilker, engineering and QA manager at The Metal Ware Corporation (Nesco’s parent company), told us that the hard right-angled corners on these box-shaped models don’t promote even airflow. The result: dead spots of stagnant air, usually in the corners.

How often you have to rotate the trays depends on a few factors, the most important being what you’re drying and the dehydrator itself. The best horizontal-flow dehydrators have bigger fans that move more air, which means less tray rotation—once or twice during the entire process versus every hour. But the best horizontal-flow dehydrator still doesn’t dry food as evenly as a round vertical-flow model with a base-mounted motor.

Size and capacity

It takes a lot of time to prep and then dry your own food, and the results shrink to just a fraction of the weight you started with. So you want to be able to dehydrate a lot at once. But that doesn’t mean you have to deal with a giant space hog. We prefer dehydrators that strike a good balance of bulk and drying volume. This boils down to smart design. For example, we tested two round, vertical-flow dehydrators that had 15-inch-diameter trays—but one offered 1 square foot of drying area per tray while the other had only three-fourths of a square foot per tray. That’s because the latter model had a wider gap around the perimeter of its trays that took up valuable usable space.

Accessories

Extra pieces like fine-mesh mats and fruit-roll trays are handy accessories for your dehydrator. Fine-mesh mats keep small items like herbs from falling through the trays as they dry. And fruit-roll trays aren’t just for making fruit leather; they’re also handy for hikers and campers who want to dehydrate lightweight packable meals. Some companies include these accessories with the dehydrator, while others charge extra. Think about how you’ll use your dehydrator so you’ll get the most for your money.

Cleanup

The most common dehydrator trays are made from plastic and can be a bear to wash because they have lots of nooks and crannies to clean. Generally, plastic trays also aren’t safe to run through the dishwasher, because the excess heat can warp them. If you have a spacious sink, a good soak in hot soapy water followed with a dish brush will do the trick. If you require dishwasher-safe trays, your choices are limited to horizontal-flow dehydrators with stainless steel racks either included or offered for extra cost. We haven’t come across a vertical-flow dehydrator with stainless steel trays.

Automatic shutoff

Some folks might prefer a dehydrator with an automatic shutoff feature. But we found that dehydrating times varied by batch, so this feature is useful only if you have a lot of experience dehydrating or will be away from the machine for a very long time. Otherwise, if the machine cuts off before the food is adequately dried, you run the risk of mold growth or spoilage. There’s really no such thing as overdrying from a food preservation standpoint. But snacks—such as jerky and fruit leather—are much more enjoyable when they’re still a bit pliable and chewy, and not yet dried to a crisp.

Temperature settings

Different types of foods have their own sweet spot when it comes to drying temperature. We found that most dehydrators have six to seven temperature settings, ranging from 90 to 160 degrees Fahrenheit, which is enough flexibility to dry most foods. Although you need not worry about the exact temperature inside your dehydrator, you should stay within the correct range for the type of food you’re drying. For example, you can’t dehydrate fruits and vegetables above 140 degrees because you’ll run the risk of case hardening (when the surface dries too quickly and prevents moisture in the center from escaping).

Low temperatures—90 to 100 degrees Fahrenheit—are best for delicate herbs and flowers. Nuts also dehydrate best in this zone because hotter temperatures can cause the oils to go rancid. Most vegetables and fruits dry between 125 and 140 degrees. Meat and fish require the highest temperature setting on your dehydrator, which is usually around 160 degrees.

Dehydrators with digital control panels and dual-stage capabilities (the option to start at a higher temperature for an hour to speed up the drying process) look modern and sound useful, but we found that neither of these things are worth the extra cost that comes with them. Some digital dehydrators let you set the temperature to the exact degree, but that kind of precision isn’t necessary for successful and even drying. And we found that dual-stage drying doesn’t shave more than an hour off the total time. When you’re drying something for 10 hours, that time savings is a drop in the bucket.

Noise

All dehydrators use a fan, so if you’re sensitive to the sound of a room fan on high speed droning on for hours on end, consider the noise factor. Running the dehydrator in your garage or laundry room with the door closed is an easy fix. But folks with limited square footage might not have that option. You can opt for a quieter dehydrator (one of our picks is pretty quiet), or you can put yours in the location farthest from your bedroom door and dehydrate at night.

via Wirecutter: Reviews for the Real World

The Best Food Dehydrator