https://static1.makeuseofimages.com/wordpress/wp-content/uploads/2021/05/remove-duplicate-liens.png

Have you ever come across text files with repeated lines and duplicate words? Maybe you regularly work with command output and want to filter those for distinct strings. When it comes to text files and the removal of redundant data in Linux, the uniq command is your best bet.

In this article, we will discuss the uniq command in-depth, along with a detailed guide on how to use the command to remove duplicate lines from a text file.

What Is the uniq Command?

The uniq command in Linux is used to display identical lines in a text file. This command can be helpful if you want to remove duplicate words or strings from a text file. Since the uniq command matches adjacent lines for finding redundant copies, it only works with sorted text files.

Luckily, you can pipe the sort command with uniq to organize the text file in a way that is compatible with the command. Apart from displaying repeated lines, the uniq command can also count the occurrence of duplicate lines in a text file.

How to Use the uniq Command

There are various options and flags that you can use with uniq. Some of them are basic and perform simple operations such as printing repeated lines, while others are for advanced users who frequently work with text files on Linux.

Basic Syntax

The basic syntax of the uniq command is:

uniq option input output…where option is the flag used to invoke specific methods of the command, input is the text file for processing, and output is the path of the file that will store the output.

The output argument is optional and can be skipped. If a user doesn’t specify the input file, uniq takes data from the standard output as the input. This allows a user to pipe uniq with other Linux commands.

Example Text File

We’ll be using the text file duplicate.txt as the input for the command.

127.0.0.1 TCP

127.0.0.1 UDP

Do catch this

DO CATCH THIS

Don't match this

Don't catch this

This is a text file.

This is a text file.

THIS IS A TEXT FILE.

Unique lines are really rare.

Note that we have already sorted this text file using the sort command. If you are working with some other text file, you can sort it using the following command:

sort filename.txt > sorted.txtRemove Duplicate Lines

The most basic use of uniq is to remove repeated strings from the input and print unique output.

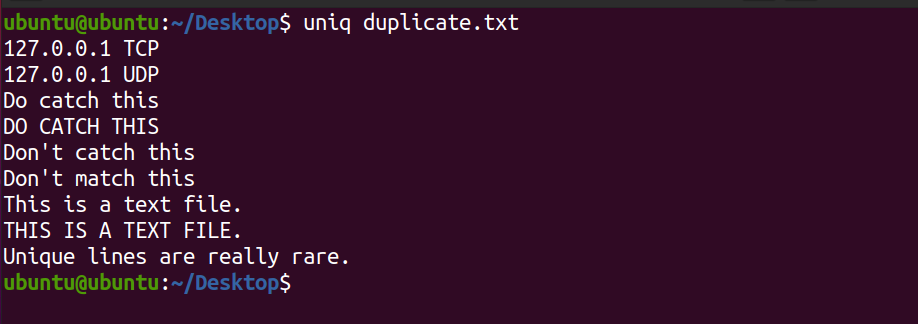

uniq duplicate.txtOutput:

Notice that the system doesn’t display the second occurrence of the line This is a text file. Also, the aforementioned command only prints the unique lines in the file and doesn’t affect the content of the original text file.

Count Repeated Lines

To output the number of repeated lines in a text file, use the -c flag with the default command.

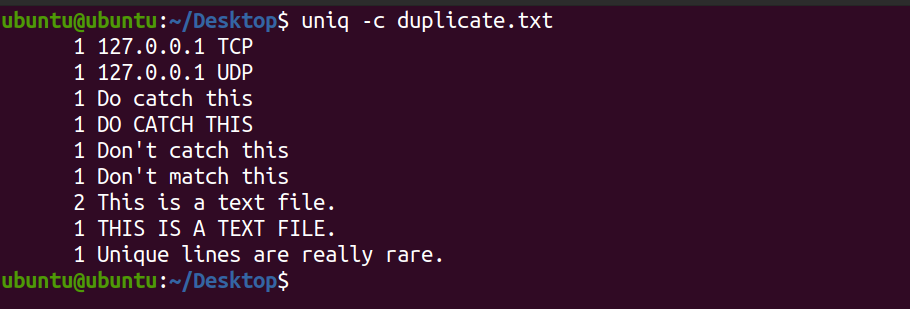

uniq -c duplicate.txtOutput:

The system displays the count of each line that exists in the text file. You can see that the line This is a text file occurs two times in the file. By default, the uniq command is case-sensitive.

Print Only Repeated Lines

To only print duplicate lines from the text file, use the -D flag. The -D stands for Duplicate.

uniq -D duplicate.txtThe system will display output as follows.

This is a text file.

This is a text file.Skip Fields While Checking for Duplicates

If you want to skip a certain number of fields while matching the strings, you can use the -f flag with the command. The -f stands for Field.

Consider the following text file fields.txt.

192.168.0.1 TCP

127.0.0.1 TCP

354.231.1.1 TCP

Linux FS

Windows FS

macOS FSTo skip the first field:

uniq -f 1 fields.txtOutput:

192.168.0.1 TCP

Linux FSThe aforementioned command skipped the first field (the IP addresses and OS names) and matched the second word (TCP and FS). Then, it displayed the first occurrence of each match as the output.

Ignore Characters When Comparing

Like skipping fields, you can skip characters as well. The -s flag allows you to specify the number of characters to skip while matching duplicate lines. This feature helps when the data you are working with is in the form of a list as follows:

1. First

2. Second

3. Second

4. Second

5. Third

6. Third

7. Fourth

8. FifthTo ignore the first two characters (the list numberings) in the file list.txt:

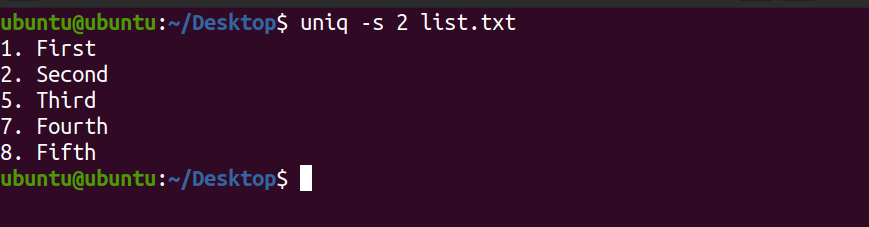

uniq -s 2 list.txtOutput:

In the output above, the first two characters were ignored and the rest of them were matched for unique lines.

Check First N Number of Characters for Duplicates

The -w flag allows you to check only a fixed number of characters for duplicates. For example:

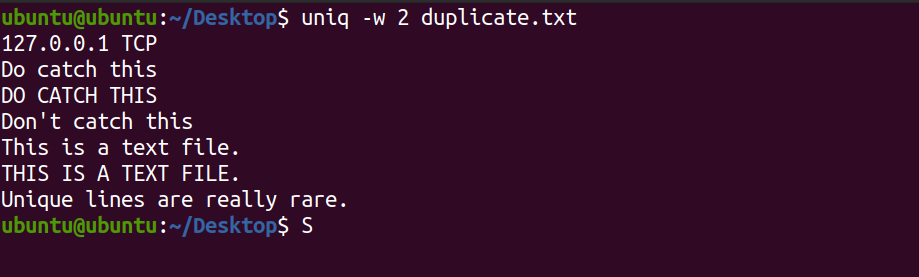

uniq -w 2 duplicate.txtThe aforementioned command will only match the first two characters and will print unique lines if any.

Output:

Remove Case Sensitivity

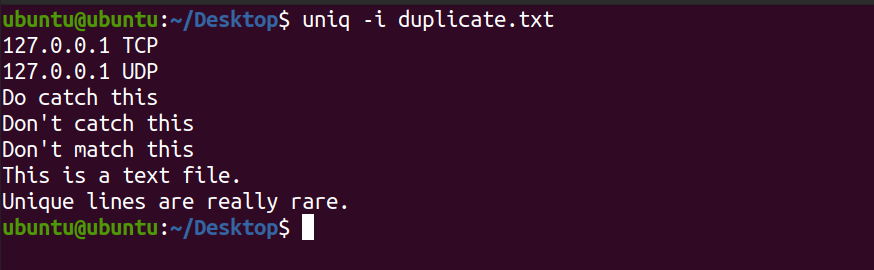

As mentioned above, uniq is case-sensitive while matching lines in a file. To ignore the character case, use the -i option with the command.

uniq -i duplicate.txtYou will see the following output.

Notice in the output above, uniq did not display the lines DO CATCH THIS and THIS IS A TEXT FILE.

Send Output to a File

To send the output of the uniq command to a file, you can use the Output Redirection (>) character as follows:

uniq -i duplicate.txt > otherfile.txtWhile sending an output to a text file, the system doesn’t display the output of the command. You can check the content of the new file using the cat command.

cat otherfile.txtYou can also use other ways to send command line output to a file in Linux.

Analyzing Duplicate Data With uniq

Most of the time while managing Linux servers, you will be either working on the terminal or editing text files. Therefore, knowing how to remove redundant copies of lines in a text file can be a great asset to your Linux skill set.

Working with text files can be frustrating if you don’t know how to filter and sort text in a file. To make your work easier, Linux has several text editing commands such as sed and awk that allow you to work efficiently with text files and command-line outputs.

MUO – Feed