https://www.pewpewtactical.com/wp-content/uploads/2021/12/2.-Remington-Factory-Ammo-1024×578.jpg

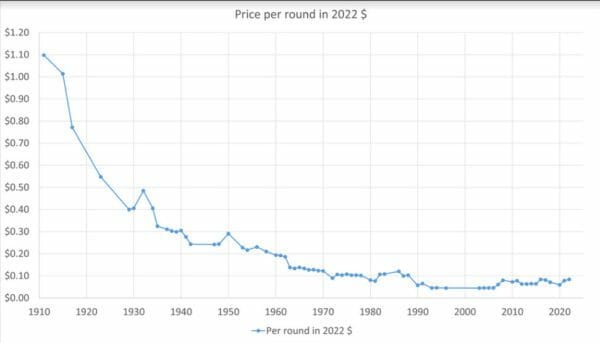

Man, it’s been almost two years now, and ammo prices still suck. A lot of us have chewed through our stash and are faced with paying higher prices or seeking training alternatives.

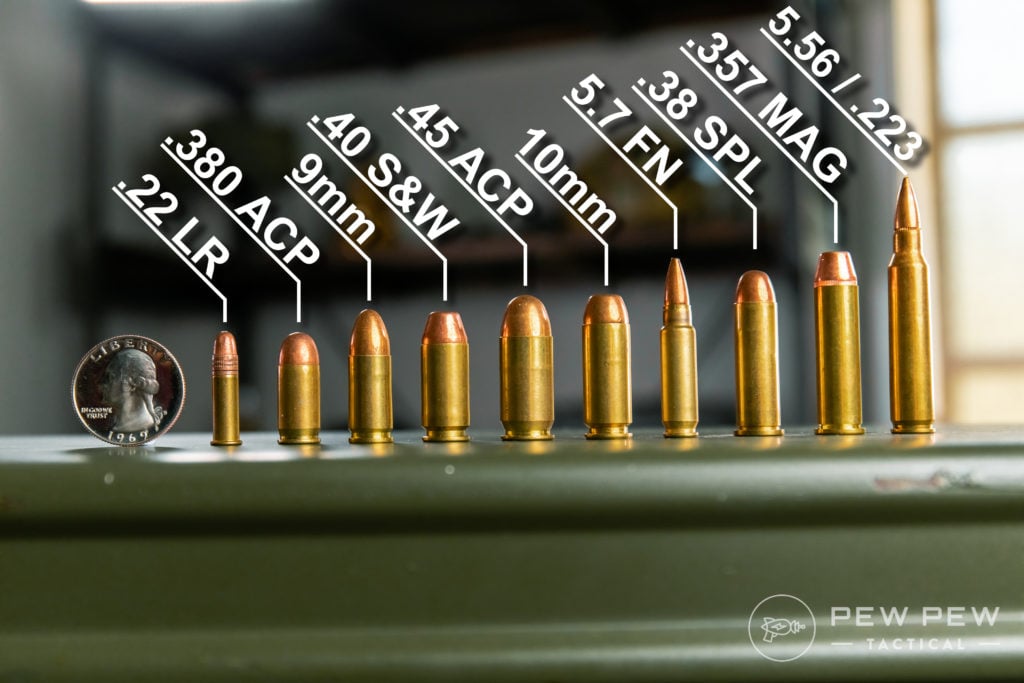

I mean, no one expects us to just stop shooting, right? Well, maybe we can turn to the old classic, .22 LR. While the price of .22 LR has risen, it hasn’t reached the crazy levels of 9mm and 5.56.

With that in mind, I’ve started searching out some of the better options for .22 LR trainers. These guns can replicate the feel and handling of a larger caliber firearm and offer you some training value without emptying your wallet.

Summary of Our Top Picks

Best .22 LR Training Handguns

1. FN 502

The FN 502 hit the ground running and is part of FN’s 500 series pistols. It shrinks things down to rimfire size and goes from a striker-fired gun to a hammer-fired gun.

The hammer-fired action is single action only, so it’s roughly similar to a striker-fired trigger. And it fits most of the FN 509 holsters and matches the look and feel of the FN 509. However, it replicates the feel of most striker-fired modern pistols.

There isn’t much difference between models, and I believe the FN 502 could stand in for the Glock, S&W, Walther, etc.

One of the more interesting features is the weapon’s optics compatibility. Toss on whatever optic you want, and now you can effectively replicate a modern MRDS-equipped handgun.

The sights are suppressor height sights, and the barrel is also threaded.

Prices accurate at time of writing

Prices accurate at time of writing

All in all, it’s one of the best rimfire handguns on the market. It comes with a flush 10-round magazine and an extended 15-round magazine.

This is perfect for training for defensive handgun use. It also works well when it comes to some competition training.

Set up a cardboard Steel Challenge course, and you can work your way through it with ease. The FN 502 allows you to work on your red dot presentation, your draw, and trigger control.

You can read more about the FN 502 in our full review here.

2. Smith & Wesson 317 Kit Gun

Every little boy and girl needs a .22 LR revolver. Seriously, the S&W 317 Kit gun might be the best to double as both a training .22 LR and a practical gun. It’s also just a ton of fun.

This revolver is a double-action model with an exposed hammer, and it sits on a rather compact frame.

Shooters get a nice 3-inch barrel and the 317 packs eight rounds of the little .22 LR. It’s a handy little gun that uses the J-frame, but don’t mistake it for a snub nose.

At 11.7 ounces, it’s not tough for younger shooters to handle.

The single-action hammer makes it a sweet shooter, especially when you factor in the Hi-Viz front sight and adjustable rear sight.

The Kit gun is perfect for learning how to use a modern revolver. Its trigger design allows you to train for both a standard exposed hammer and an enclosed hammer. And the gun uses a modern swing-out cylinder.

Heck, they even make speed loaders for it.

The 317 Kit gun gives you a handy little gun for hunting, fishing, and other tasks where you might want a portable pest remover. This gun is plenty robust and perfect for general purpose outdoorsy, plus it’s a lot of fun.

Prices accurate at time of writing

Prices accurate at time of writing

3. Tikka T1x

The Tikka T1x brings the training rimfire rifle to a bolt-action design. Like all Tikka firearms, the 1X is a fantastic example of what a bolt gun should be.

Shooters get a well-built rifle with a medium contour barrel, a 10-round magazine, and a very smooth bolt-action system.

The modular stock allows you to swap pistol grips at various angles, and you can attach a wider forend at the front of the gun for a better grip.

Tikka utilizes a crossover-style barrel system that mixes the stability and accuracy of a heavy barrel without being unbalanced and heavy.

For making those accurate shots, the barrel is cold hammer-forged and threaded, and ready for a suppressor.

The Tikka T1x provides a rimfire stand-in for the already awesome Tikka T3x rifles. Tikka uses the same bedding surfaces and inlay footprint as the centerfire T3x.

Besides being a stand-in for the T3x, it’s also just a great stand-in for any bolt action rifle.

Bolt-action rifles are known for their accuracy, and the Tikka T1x is plenty accurate. It might not provide the range of a centerfire rifle; however, with a reduced-sized target and some good glass, you can work your accuracy skills well.

Beyond that, the Tikka T1x is just a great general-purpose training tool for new shooters.

As a bolt-action, shooters aren’t tempted to rapid-fire. New shooters can safely and easily learn accuracy fundamentals and gun safety.

Prices accurate at time of writing

Prices accurate at time of writing

4. Smith & Wesson M&P 15-2

Lots of .22 LR AR rifles exist, but it’s tough to find a better, more affordable option that matches the look, feel, and controls of a real AR-15 than the M&P 15-22 at a great price point.

S&W also makes various models with handguard options including quad rails and M-LOK rails. The magazines are as close as you get to the real thing and hold up to 25 rounds.

The S&W M&P 15-22 keeps the price point low by using hefty amounts of polymer in their design. It’s clearly not as heavy as an actual AR-15 but tries its best to match the real thing.

The gun uses a blowback action, which you come to expect from .22 LR rifles.

The presence of a last-round bolt hold open makes reloads accurate, and it’s a great feature to have for training purposes.

You can toss on a cheaper red dot and even light to replicate your actual gun at a lower price. An S&W M&P 15-22 makes your AR-15 training efficient and cheap.

On top of that, the S&W M&P 15-22 can be used at indoor ranges, which often prohibit the use of rifle rounds, but allow the humble .22 LR. Plus, it’s also a lot of fun to shoot and perfect for plinking cans and squirrel hunting.

Prices accurate at time of writing

Prices accurate at time of writing

5. Taurus TX22

The Taurus TX22 might be my favorite .22 LR pistol on the market.

This striker-fired, polymer frame wonder 9 is the generic of generic pistols, and the Taurus TX22 replicates that generic pistol well enough to be a training pistol for nearly anything.

A shooter with a TX22 can easily replicate their Glock, their FN, their SIG, or beyond with the TX22.

Its simple controls make using the TX22 in place of any other gun easy. The TX22 did find a way to implement 16 rounds in a flush fighting magazine, which again brings the capacity up to a realistic count.

With a rock-solid price point, the TX22 provides a great training pistol that not only replicates your basic 9mm handgun but makes generalized training easy.

The TX22 is well suited for new shooters learning the art of the handgun without the recoil of a 9mm. It’s much cheaper to train with, and the TX22 has proven itself to be reliable and frustration-free.

Taurus’ latest model incorporates a red dot, but it’s a little less traditional. However, you can still train that valuable red dot presentation and learn to effectively use a red dot.

Plus, the TX22 comes in at a fantastic price.

Prices accurate at time of writing

Prices accurate at time of writing

What do you think of the Taurus TX22? Rate it below!

Readers’ Ratings

5.00/5

(1)

Your Rating?

6. Henry Classic .22 LR Lever Gun

If you are a fan of the classics and use a lever gun, you know the ammo is expensive. Lever guns don’t come in 9mm-friendly price points. .44 Mag, .45 Colt, .357 Mag, and more cost a pretty penny.

If you shoot SASS, then you are familiar with the high cost of ammunition. The Henry Classic Lever-Action .22 LR brings you all the fun of a lever gun without the cost.

It’s perfect for learning to run the lever gun fast and hard. Working that smooth action and sending a pile of .22 LR downrange is both a ton of fun and a big challenge. Pair it with a shot timer, and you will both effectively train and have the time of your life.

The Classic lever gun doesn’t implement some crazy manual safeties or other features that detract from the classic lever gun.

The only downside is that it’s tube-loaded, and it doesn’t utilize a side gate. Side gates are tough with .22 LR because the projectiles don’t take pressure well without bending, which will cause feeding issues.

Luckily, even with this slight issue, the Henry Classic lever gun gives you all the feeling and joy of a standard lever gun without the high price of lever gun ammo.

Prices accurate at time of writing

Prices accurate at time of writing

Need more Henry? Check out our list of Henry rifles you might want to know.

7. CZ Kadet Kits

The CZ Kadet kits are not firearms. Instead, they are conversion kits for CZ series pistols.

Five exist, and they allow you to outfit your CZ 75, your P09, your P07, your SP01, and your CZ Shadow with a new complete slide and .22 LR magazines.

These kits vary in price but offer an affordable way to convert the gun you already have to a .22 LR pistol.

The Kadet kits are drop-in designs and make the conversion absolutely painless. Heck, it’s easy enough to swap at the range and warm up with .22 LR and start training with 9mm.

If you’ve already joined the CZ master race, why not turn your 9mm into a .22 LR for training purposes? CZ firearms are well represented in the fields of defensive use and competition, both of which require a ton of practice to be sufficient.

Tossing a Kadet Kit on your favorite CZ allows you to use the same trigger, controls, and everything else, but with a different slide and maybe different sights.

Hammer-fired CZs are a natural and easy conversion to .22 LR. The kits will pay for themselves quite quickly.

Prices accurate at time of writing

Prices accurate at time of writing

8. CMMG .22 LR AR Conversion Kit

The CMMG .22 LR AR Conversion Kit is exactly what it sounds like. This drop-in bolt conversion kit allows you to take any 5.56 caliber AR-15 and chamber it in .22 LR.

It’s one of the most affordable ways to train with rimfire in your AR 15 rifle.

It’s a drop-in kit that utilizes an integral everything, including a buffer. The magazines are proprietary, 25-round magazines that work almost flawlessly. They tend to prefer jacketed ammunition over bare lead.

This kit allows you to use your optic, your handguard, your stock, your trigger, etc.

Drop it in, and go!

There are a few downsides, mostly that the zero won’t be the same as your 5.56, and the .22 LR through a 5.56 barrel won’t be the most accurate thing.

Mine shows that the gun’s about 4 MOA with good ammunition. That’s still good enough to train, especially at close range.

It’s an affordable option that’s tough to beat, and the magazines are cheap and perfect for working reloads and getting in your training.

Prices accurate at time of writing

Prices accurate at time of writing

Final Thoughts

.22 LR training firearms offer you the opportunity to utilize much cheaper ammunition to build and maintain skills.

The industry has acknowledged this and has provided us with a rock-solid selection of awesome firearms perfect for both training and plinking.

I’ve picked my favorites, but what are yours? Let me know in the comments below. Check out the Best .22 LR Ammo to feed your training gun.

The post Best .22 LR Training Guns [Rifles, Handguns & Conversion Kits] appeared first on Pew Pew Tactical.

Pew Pew Tactical

As you might know, mysqldump is single-threaded and STDOUT is its default output. As MyDumper is multithreaded, it has to write on different files. Since version 0.11.3 was released in Nov 2021, we have the possibility to stream our backup in MyDumper. We thought for several months until we decided what was the simplest way to implement it and we also had to add support for compression. So, after fixing several bugs, and we now consider it is stable enough, we can explain how it works.

As you might know, mysqldump is single-threaded and STDOUT is its default output. As MyDumper is multithreaded, it has to write on different files. Since version 0.11.3 was released in Nov 2021, we have the possibility to stream our backup in MyDumper. We thought for several months until we decided what was the simplest way to implement it and we also had to add support for compression. So, after fixing several bugs, and we now consider it is stable enough, we can explain how it works.

A critical skill for any rifle shooter, mounting your own scope will save you time, money, and teach a core skill for long range shooting.Recoil

A critical skill for any rifle shooter, mounting your own scope will save you time, money, and teach a core skill for long range shooting.Recoil