https://media.notthebee.com/articles/648c8c87d755b648c8c87d755c.jpg

This is one of the most frightening videos I have ever seen.

Not the Bee

Just another WordPress site

https://media.notthebee.com/articles/648c8c87d755b648c8c87d755c.jpg

This is one of the most frightening videos I have ever seen.

Not the Bee

https://media.notthebee.com/articles/648cb2fc3ae39648cb2fc3ae3a.jpg

Nothing here but power and majesty.

Not the Bee

https://theawesomer.com/photos/2023/06/colliding_bullets_smarter_every_day_t.jpg

Inspired by a pair of Civil War-era bullets that collided and fused together, Destin from Smarter Every Day wanted to see if he could replicate the unlikely situation on camera. It took an impressive amount of planning and engineering to set up the shot and perform the experiment in a safe and precise way.

The Awesomer

Creating generated hash columns in MySQL for faster strict equality lookups.

Planet MySQL

https://laravelnews.s3.amazonaws.com/images/working-with-third-party-services-in-laravel.png

So a little over two years ago, I wrote a tutorial on how you should work with third-party services in Laravel. To this day, it is the most visited page on my website. However, things have changed over the last two years, and I decided to approach this topic again.

So I have been working with third-party services for so long that I cannot remember when I wasn’t. As a Junior Developer, I integrated API into other platforms like Joomla, Magento, and WordPress. Now it mainly integrates into my Laravel applications to extend business logic by leaning on other services.

This tutorial will describe how I typically approach integrating with an API today. If you have read my previous tutorial, keep reading as a few things have changed – for what I consider good reasons.

Let’s start with an API. We need an API to integrate with. My original tutorial was integrating with PingPing, an excellent uptime monitoring solution from the Laravel community. However, I want to try a different API this time.

For this tutorial, we will use the Planetscale API. Planetscale is an incredible database service I use to get my read-and-write operations closer to my users in the day job.

What will our integration do? Imagine we have an application that allows us to manage our infrastructure. Our servers run through Laravel Forge, and our database is over on Planetscale. There is no clean way to manage this workflow, so we created our own. For this, we need an integration or two.

Initially, I used to keep my integrations under app/Services; however, as my applications have gotten more extensive and complicated, I have needed to use the Services namespace for internal services, leading to a polluted namespace. I have moved my integrations to app/Http/Integrations. This makes sense and is a trick I picked up from Saloon by Sam Carrè.

Now I could use Saloon for my API integration, but I wanted to explain how I do it without a package. If you need an API integration in 2023, I highly recommend using Saloon. It is beyond amazing!

So, let’s start by creating a directory for our integration. You can use the following bash command:

mkdir app/Http/Integrations/Planetscale

Once we have the Planetscale directory, we need to create a way to connect to it. Another naming convention I picked up off of the Saloon library is to look at these base classes as connectors – as their purpose is to allow you to connect to a specific API or third party.

Create a new class called PlanetscaleConnector in the app/Http/Integrations/Planetscale directory, and we can flesh out what this class needs, which will be a lot of fun.

So we must register this class with our container to resolve it or build a facade around it. We could register this to “long” way in a Service Provider – but my latest approach is to have these Connectors register themselves – kind of …

declare(strict_types=1);

namespace App\Http\Integrations\Planetscale;

use Illuminate\Contracts\Foundation\Application;

use Illuminate\Http\Client\PendingRequest;

use Illuminate\Support\Facades\Http;

final readonly class PlanetscaleConnector

{

public function __construct(

private PendingRequest $request,

) {}

public static function register(Application $app): void

{

$app->bind(

abstract: PlanetscaleConnector::class,

concrete: fn () => new PlanetscaleConnector(

request: Http::baseUrl(

url: '',

)->timeout(

seconds: 15,

)->withHeaders(

headers: [],

)->asJson()->acceptJson(),

),

);

}

}

So the idea here is that all the information about how this class is registered into the container lives within the class itself. All the service provider needs to do is call the static register method on the class! This has saved me so much time when integrating with many APIs because I don’t have to hunt for the provider and find the correct binding, amongst many others. I go to the class in question, which is all in front of me.

You will notice that currently, we have nothing being passed to the token or base url methods in the request. Let’s fix that next. You can get these in your Planetscale account.

Create the following records in your .env file.

PLANETSCALE_SERVICE_ID="your-service-id-goes-here"

PLANETSCALE_SERVICE_TOKEN="your-token-goes-here"

PLANETSCALE_URL="https://api.planetscale.com/v1"

Next, these need to be pulled into the application’s configuration. These all belong in config/services.php as this is where third-party services are typically configured.

return [

// the rest of your services config

'planetscale' => [

'id' => env('PLANETSCALE_SERVICE_ID'),

'token' => env('PLANETSCALE_SERVICE_TOKEN'),

'url' => env('PLANETSCALE_URL'),

],

];

Now we can use these in our PlanetscaleConnector under the register method.

declare(strict_types=1);

namespace App\Http\Integrations\Planetscale;

use Illuminate\Contracts\Foundation\Application;

use Illuminate\Http\Client\PendingRequest;

use Illuminate\Support\Facades\Http;

final readonly class PlanetscaleConnector

{

public function __construct(

private PendingRequest $request,

) {}

public static function register(Application $app): void

{

$app->bind(

abstract: PlanetscaleConnector::class,

concrete: fn () => new PlanetscaleConnector(

request: Http::baseUrl(

url: config('services.planetscale.url'),

)->timeout(

seconds: 15,

)->withHeaders(

headers: [

'Authorization' => config('services.planetscale.id') . ':' . config('services.planetscale.token'),

],

)->asJson()->acceptJson(),

),

);

}

}

You need to send tokens over to Planetscale in the following format: service-id:service-token, so we cannot use the default withToken method as it doesn’t allow us to customize it how we need to.

Now that we have a basic class created, we can start to think about the extent of our integration. We must do this when creating our service token to add the correct permissions. In our application, we want to be able to do the following:

List databases.

List database regions.

List database backups.

Create database backup.

Delete database backup.

So, we can look at grouping these into two categories:

Databases.

Backups.

Let’s add two new methods to our connector to create what we need:

declare(strict_types=1);

namespace App\Http\Integrations\Planetscale;

use App\Http\Integrations\Planetscale\Resources\BackupResource;

use App\Http\Integrations\Planetscale\Resources\DatabaseResource;

use Illuminate\Contracts\Foundation\Application;

use Illuminate\Http\Client\PendingRequest;

use Illuminate\Support\Facades\Http;

final readonly class PlanetscaleConnector

{

public function __construct(

private PendingRequest $request,

) {}

public function databases(): DatabaseResource

{

return new DatabaseResource(

connector: $this,

);

}

public function backups(): BackupResource

{

return new BackupResource(

connector: $this,

);

}

public static function register(Application $app): void

{

$app->bind(

abstract: PlanetscaleConnector::class,

concrete: fn () => new PlanetscaleConnector(

request: Http::baseUrl(

url: config('services.planetscale.url'),

)->timeout(

seconds: 15,

)->withHeaders(

headers: [

'Authorization' => config('services.planetscale.id') . ':' . config('services.planetscale.token'),

],

)->asJson()->acceptJson(),

),

);

}

}

As you can see, we created two new methods, databases and backups. These will return new resource classes, passing through the connector. The logic can now be implemented in the resource classes, but we must add another method to our connector later.

<?php

declare(strict_types=1);

namespace App\Http\Integrations\Planetscale\Resources;

use App\Http\Integrations\Planetscale\PlanetscaleConnector;

final readonly class DatabaseResource

{

public function __construct(

private PlanetscaleConnector $connector,

) {}

public function list()

{

//

}

public function regions()

{

//

}

}

This is our DatabaseResource; we have now stubbed out the methods we want to implement. You can do the same thing for the BackupResource. It will look somewhat similar.

So the results can be paginated on the listing of databases. However, I will not deal with this here – I would lean on Saloon for this, as its implementation for paginated results is fantastic. In this example, we aren’t going to worry about pagination. Before we fill out the DatabaseResource, we need to add one more method to the PlanetscaleConnector to send the requests nicely. For this, I am using my package called juststeveking/http-helpers, which has an enum for all the typical HTTP methods I use.

public function send(Method $method, string $uri, array $options = []): Response

{

return $this->request->send(

method: $method->value,

url: $uri,

options: $options,

)->throw();

}

Now we can go back to our DatabaseResource and start filling in the logic for the list method.

declare(strict_types=1);

namespace App\Http\Integrations\Planetscale\Resources;

use App\Http\Integrations\Planetscale\PlanetscaleConnector;

use Illuminate\Support\Collection;

use JustSteveKing\HttpHelpers\Enums\Method;

use Throwable;

final readonly class DatabaseResource

{

public function __construct(

private PlanetscaleConnector $connector,

) {}

public function list(string $organization): Collection

{

try {

$response = $this->connector->send(

method: Method::GET,

uri: "/organizations/{$organization}/databases"

);

} catch (Throwable $exception) {

throw $exception;

}

return $response->collect('data');

}

public function regions()

{

//

}

}

Our list method accepts the parameter organization to pass through the organization to list databases. We then use this to send a request to a specific URL through the connector. Wrapping this in a try-catch statement allows us to catch potential exceptions from the connectors’ send method. Finally, we can return a collection from the method to work with it in our application.

We can go into more detail with this request, as we can start mapping the data from arrays to something more contextually useful using DTOs. I wrote about this here, so I won’t repeat the same thing here.

Let’s quickly look at the BackupResource to look at more than just a get request.

declare(strict_types=1);

namespace App\Http\Integrations\Planetscale\Resources;

use App\Http\Integrations\Planetscale\Entities\CreateBackup;

use App\Http\Integrations\Planetscale\PlanetscaleConnector;

use JustSteveKing\HttpHelpers\Enums\Method;

use Throwable;

final readonly class BackupResource

{

public function __construct(

private PlanetscaleConnector $connector,

) {}

public function create(CreateBackup $entity): array

{

try {

$response = $this->connector->send(

method: Method::POST,

uri: "/organizations/{$entity->organization}/databases/{$entity->database}/branches/{$entity->branch}",

options: $entity->toRequestBody(),

);

} catch (Throwable $exception) {

throw $exception;

}

return $response->json('data');

}

}

Our create method accepts an entity class, which I use to pass data through the application where needed. This is useful when the URL needs a set of parameters and we need to send a request body through.

I haven’t covered testing here, but I did write a tutorial on how to test JSON:API endpoints using PestPHP here, which will have similar concepts for testing an integration like this.

I can create reliable and extendible integrations with third parties using this approach. It is split into logical parts, so I can handle the amount of logic. Typically I would have more integrations, so some of this logic can be shared and extracted into traits to inherit behavior between integrations.

Laravel News

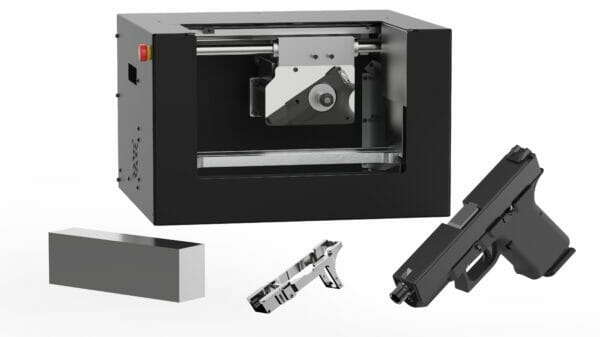

https://www.ammoland.com/wp-content/uploads/2023/06/0percentpistol-500×281.jpg

AUSTIN, Texas — In 2013, Cody Wilson printed the Liberator. The Liberator was the first 3D-printed firearm. His goal was simple. It was to make all gun control obsolete.

Wilson hoped that the gun world would embrace 3D printing and other methods of getting around gun control. Wilson’s dream came to fruition. Talented gun designers used computer-aided drawing (CAD) software to design and print firearms at home on 3D printers that cost as low as $100. At the same time, companies like Polymer80 sprung up to sell kits that let home users finish a piece of plastic into an unserialized firearm frame.

The revolution caused the Biden administration to order the Bureau of Alcohol, Tobacco, Firearms and Explosives (ATF) to make new rules to prevent the dissemination of these kits that he and other anti-gun zealots demonized as “ghost guns.” The ATF rolled out a new rule that would make it a crime to sell a frame blank with a jig, but the market adapted. This adaptation once again forced the ATF’s hand. Two days after Christmas in 2022, the ATF would give anti-gun groups a belated gift. It would unilaterally declare frame blanks firearms. Giffords, Brady, and Everytown celebrated the closing of the so-called “ghost gun loophole” and the banning of “tools of criminals.”

Their victory would be short-lived as the injunctions from Federal courts in Texas started rolling in. First, it was 80% Arms, then Wilson’s Defense Distributed, and finally, Polymer80, meaning the original kits were back on the market. Although most of the industry was now back to selling the original product, liberal states started banning the sale of unfinished firearm frames and receivers.

Defense Distributed would take these states head-on by releasing a 0% AR-15 lower receiver for the company’s Ghost Gunner, a desktop CNC machine. The 0% lower was a hit with the gun-building community. All the user had to do was mill out the middle section of the lower and attach it to a top piece and a lower portion. Even if a state were to ban 80% AR-15 lowers, it would be impossible to ban a block of aluminum, although states like California have tried to ban the Ghost Gunner itself.

All the user has to do is mill out the FCU using the Ghost Gunner 3 (aluminum) or the Ghost Gunner 3S (stainless steel) and install Gen 3 Glock parts. The user can then print the chassis on a 3D printer using the files supplied by Defense Distributed or buy a pre-printed chassis from the Ghost Gunner website and add a complete slide and barrel.

Mr. Wilson, who has faced some controversy over a relationship with a 16-year-old girl who lied about her age and claimed to be 18, recently had the case against him dropped, which frees him up to keep attacking ATF regulations.

“This is a homecoming ten years in the making,” Wilson told AmmoLand News. “The 0% pistol allows anyone to make a Glock type pistol in their own home with just a Ghost Gunner and a 3D-printer.”

This move by Defense Distributed, along with advancements in 3D printing, is the downfall of gun control. No matter what bans the government institutes, the market will adapt. With the rise of cheap 3D printers and machines like the Ghost Gunner, it has never been easier to circumvent gun control laws.

Let me make it clear, 3D-printing and CNC machining firearms are not illegal on a federal level. I also do not believe there is a will in Congress to attack that aspect of gun control because it will highlight that technology is empowering the people. The machines are available everywhere, from Amazon to Microcenter. The files live in cyberspace, where anyone can download them. Even if the files were banned (huge First Amendment legal challenge), they still would be traded anonymously on the Dark Web and by using VPN services.

The signal cannot be stopped. The internet has ushered in the fall of gun control, and there is nothing the Biden administration or states like California can do about it.

About John Crump

John is a NRA instructor and a constitutional activist. John has written about firearms, interviewed people of all walks of life, and on the Constitution. John lives in Northern Virginia with his wife and sons and can be followed on Twitter at @crumpyss, or at www.crumpy.com.

AmmoLand Shooting Sports News

https://res.cloudinary.com/dwinzyahj/image/upload/v1686431555/by9pmeuxnj9rwdtuxeku.jpg

In the last post, we added the ability to list all products, search with Scout and Spatie Query Builder, the ability to delete products. This part 6.3 of the on building an ecommerce website in Laravel from start to deployment.

In this post we will continue to add the ability to edit products.

Let’s dive in

Head over to Admin\ProductController and edit the edit action and tell laravel to return the edit page

/**

* Show the form for editing the specified resource.

*

* @param Product $product

* @return Renderable

*/

public function edit(Product $product)

{

$categories = Category::all();

return view('admin.products.edit', [

'product' => $product,

'categories' => $categories

]);

}

Then let’s edit the admin.products.edit view, the markup will be the same as the create view except this time we pre populate the fields and submit the form to the update action

Add the following snippet to your edit view

@extends('layouts.app')

@section('title')

Edit Product

@endsection

@section('content')

<section class="section">

<div class="section-header">

<div class="section-header-back">

<a href=""

class="btn btn-icon"><i class="fas fa-arrow-left"></i></a>

</div>

<h1>

Edit Product

</h1>

<div class="section-header-breadcrumb">

<div class="breadcrumb-item active"><a href="#">Dashboard</a></div>

<div class="breadcrumb-item"><a href="">Products</a></div>

<div class="breadcrumb-item">

Edit Product

</div>

</div>

</div>

<div class="section-body">

<h2 class="section-title">

Edit Product

</h2>

<p class="section-lead">

On this page you can edit a product and fill in all fields.

</p>

<div class="container">

<div class="row">

<div class="col-12 col-md-7">

<div class="card rounded-lg">

<div class="card-header">

<h4>Basic Info</h4>

</div>

<div class="card-body">

<form class=""

action=""

method="post"

id="storeProduct">

@csrf

@method('PATCH')

<div class="form-group mb-3">

<label class="col-form-label"

for='name'>Name</label>

<input type="text"

name="name"

id='name'

class="form-control @error('name') is-invalid @enderror"

value="">

@error('name')

<div class="invalid-feedback">

</div>

@enderror

</div>

<div class="form-group mb-3">

<label for='description'

class="col-form-label">

Description

</label>

<textarea name="description"

id='description'

rows="8"

cols="80"

></textarea>

@error('description')

<div class="invalid-feedback">

</div>

@enderror

</div>

</form>

</div>

</div>

<div class="card rounded-lg">

<div class="card-header">

<h4>Media</h4>

</div>

<div class="card-body">

<div class="form-group"

data-controller="filepond"

data-filepond-process-value=""

data-filepond-restore-value=""

data-filepond-revert-value=""

data-filepond-current-value="">

<input type="file"

data-filepond-target="input">

<template data-filepond-target="template">

<input data-filepond-target="upload"

type="hidden"

name="NAME"

form="storeProduct"

value="VALUE">

</template>

</div>

</div>

</div>

<div class="card rounded-lg">

<div class="card-header">

<h4>Pricing</h4>

</div>

<div class="card-body">

<div class="row mb-3">

<div class="form-group col-md-6">

<label class="form-label"

for='price'>Price</label>

<div class="input-group">

<div class="input-group-text">

$

</div>

<input form="storeProduct"

type="text"

name="price"

id='price'

placeholder="0.00"

class="form-control @error('price') is-invalid @enderror"

value="">

@error('price')

<div class="invalid-feedback">

</div>

@enderror

</div>

</div>

<div class="form-group col-md-6">

<label class="form-label"

for='discounted_price'>Discounted price</label>

<div class="input-group">

<div class="input-group-text">

$

</div>

<input form="storeProduct"

type="text"

name="discounted_price"

id='discounted_price'

placeholder="0.00"

class="form-control @error('compare_price') is-invalid @enderror"

value="">

@error('compare_price')

<div class="invalid-feedback">

</div>

@enderror

</div>

</div>

</div>

<div class="form-group">

<label class="form-label"

for='cost'>Cost per item</label>

<div class="input-group">

<div class="input-group-text">

$

</div>

<input form="storeProduct"

type="text"

name="cost"

id='cost'

placeholder="0.00"

class="form-control @error('cost') is-invalid @enderror"

value="">

@error('cost')

<div class="invalid-feedback">

</div>

@enderror

</div>

<span class="text-sm text-secondary d-block mt-2">Customers won't see this</span>

</div>

</div>

</div>

<div class="card rounded-lg"

data-controller="inventory">

<div class="card-header">

<h4>Inventory</h4>

</div>

<div class="card-body">

<div class="form-group">

<label class="form-label"

for='sku'>SKU</label>

<input id='sku'

form="storeProduct"

type="text"

name="sku"

class="form-control @error('sku') is-invalid @enderror"

value="">

@error('sku')

<div class="invalid-feedback">

</div>

@enderror

</div>

<div class="form-group mb-3">

<label class="custom-switch pl-0">

<input form="storeProduct"

checked

type="checkbox"

@checked(old('track_quantity', $product->track_quantity))

name="track_quantity"

data-action="input->inventory#toggle"

class="custom-switch-input @error('track_quantity')

is-invalid

@enderror">

<span class="custom-switch-indicator"></span>

<span class="custom-switch-description">Track quantity</span>

</label>

@error('track_quantity')

<div class="invalid-feedback">

</div>

@enderror

</div>

<div class="form-group"

data-inventory-target="checkbox">

<label class="custom-switch pl-0">

<input form="storeProduct"

@checked(old('sell_out_of_stock', $product->sell_out_of_stock))

type="checkbox"

name="sell_out_of_stock"

class="custom-switch-input @error('sell_out_of_stock')

is-invalid

@enderror">

<span class="custom-switch-indicator"></span>

<span class="custom-switch-description">Continue selling when out of stock</span>

</label>

@error('sell_out_of_stock')

<div class="invalid-feedback">

</div>

@enderror

</div>

<div class="form-group"

data-inventory-target="quantity">

<label class="form-label"

for='quantity'>Quantity</label>

<input form="storeProduct"

type="text"

name="quantity"

id='quantity'

class="form-control @error('quantity') is-invalid @enderror"

value="">

@error('quantity')

<div class="invalid-feedback">

</div>

@enderror

</div>

</div>

</div>

<div class="card rounded-lg">

<div class="card-header">

<h4>Variants</h4>

</div>

<div class="card-body">

</div>

</div>

<div class="card rounded-lg">

<div class="card-header">

<h4>Search Engine Optimization</h4>

</div>

<div class="card-body">

</div>

</div>

</div>

<div class="col-12 col-md-5">

<div class="card rounded-lg">

<div class="card-header">

<h4>Product status</h4>

</div>

<div class="card-body">

<div class="form-group">

<select form="storeProduct"

name="status"

class="form-select @error('status') is-invalid @enderror">

<option value="draft"

@selected(old('status', $product->status) == 'draft')>Draft</option>

<option value="review"

@selected(old('status', $product->status) == 'review')>Review</option>

<option value="active"

@selected(old('status', $product->status) == 'active')>Active</option>

</select>

@error('status')

<div class="invalid-feedback">

</div>

@enderror

</div>

</div>

</div>

<div class="card rounded-lg">

<div class="card-header">

<h4>Product organization</h4>

</div>

<div class="card-body">

<div class="form-group">

<label class="form-label"

for='category_id'>Category</label>

<select form="storeProduct"

name="category_id"

id='category_id'

class="form-select @error('category_id') is-invalid @enderror">

@foreach ($categories as $category)

@if ($category->id == old('category_id', $product->category->id) || strtolower($category->name) == 'default')

<option selected

value=""></option>

@else

<option value=""></option>

@endif

@endforeach

</select>

@error('category_id')

<div class="invalid-feedback">

</div>

@enderror

</div>

</div>

</div>

</div>

</div>

<div class="row">

<div class="col-12">

<div class="form-group text-right">

<input type="submit"

class="btn btn-primary btn-lg"

value="Save"

form="storeProduct">

</div>

</div>

</div>

</div>

</div>

</section>

@endsection

Next, we edit the UpdateProductRequest to add some validation and authorizations to the request before it reaches the controller

Edit the UpdateProductRequest to the following

<?php

namespace App\Http\Requests;

use Illuminate\Foundation\Http\FormRequest;

class UpdateProductRequest extends FormRequest

{

/**

* Determine if the user is authorized to make this request.

*

* @return bool

*/

public function authorize(): bool

{

return $this->user()->can('update', $this->route('product'));

}

/**

* Prepare input for validation

*

* @return void

*/

protected function prepareForValidation(): void

{

$this->merge([

'track_quantity' =>

$this->has('track_quantity') &&

$this->input('track_quantity') == 'on',

'sell_out_of_stock' =>

$this->has('sell_out_of_stock') &&

$this->input('sell_out_of_stock') == 'on',

]);

}

/**

* Get the validation rules that apply to the request.

*

* @return array<string, mixed>

*/

public function rules(): array

{

return [

'name' => 'required|string|max:255',

'description' => 'required|string',

'sku' =>

'sometimes|nullable|string|unique:products,sku,' .

$this->route('product')->id,

'track_quantity' => 'sometimes|nullable|boolean',

'quantity' => 'required_if:track_quantity,true|nullable|int',

'sell_out_of_stock' => 'required_if:track_quantity,true|boolean',

'category_id' => 'required|int|exists:categories,id',

'price' => 'required|numeric|min:0',

'cost' => 'sometimes|nullable|numeric',

'discounted_price' => 'sometimes|nullable|numeric',

'status' => 'required|string|in:active,draft,review',

'images' => 'sometimes|nullable|array',

'images.*' => 'string',

];

}

}

So on the authorize method we check if the user authorized to update products, we don’t anyone just products if they are not authorized.

The prepareForValidation method simply turns the input checks (“on”) into real booleans and then your everyday validation on the rules method

If validation passes let’s make the actual updating in the Admin\ProdocutController.

Edit the update method and put the following snippet

/**

* Update the specified resource in storage.

*

* @param UpdateProductRequest $request

* @param Product $product

* @return RedirectResponse

* @throws Exception

*/

public function update(UpdateProductRequest $request, Product $product)

{

$product->update($request->safe()->except(['images']));

collect($request->validated('images'))->each(function ($image) use (

$product,

) {

$product->attachMedia(new File(storage_path('app/' . $image)));

Storage::delete($image);

});

return to_route('admin.products.index')->with(

'success',

'Product was successfully updated',

);

}

First we get the validated fields from the request except the images key and pass that to the update method of the product model

If there were images uploaded with this request we loop through all of them and then attach them to this product

Lastly, we return the user to the products index page with a toast message.

This is all we need to be able to edit products and in the upcoming tutorials we will add the ability to create product variations such as color, size etc

As I was using the products section of the ecommerce website I noticed a few issues if a user doesn’t include optional fields which we will rectify in the next post

To make sure you don’t miss the next post in this series subscribe to the newsletter and get notified when it comes out

Like before, Happy coding!

Laravel News Links

Files are everywhere in the modern world. They’re the medium in which data is digitally stored and transferred. Chances are, you’ve opened dozens, if not hundreds, of files just today! Now it’s time to read and write files with Python.

In this video course, you’ll learn how to:

open(), Path.open(), and the with statementcsv module to manipulate CSV dataThis video course is part of the Python Basics series, which accompanies Python Basics: A Practical Introduction to Python 3. You can also check out the other Python Basics courses.

Note that you’ll be using IDLE to interact with Python throughout this course. If you’re just getting started, then you might want to check out Python Basics: Setting Up Python before diving into this course.

[ Improve Your Python With ð Python Tricks ð â Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Planet Python

https://leopoletto.com/assets/images/generate-laravel-migrations.png

One of the common challenges when migrating a legacy PHP application to Laravel is creating database migrations based on the existing database.

Depending on the size of the database, it can become an exhausting task.

I had to do it a few times, but recently I stumbled upon a database with over a hundred tables.

As a programmer, we don’t have the patience to do such a task,

and we shouldn’t.

The first thought is how to automate it.

With that in mind, I searched for an existing solution, found some packages,

and picked one by kitloong,

the Laravel migration generator package.

CREATE TABLE permissions

(

id bigint unsigned auto_increment primary key,

name varchar(255) not null,

guard_name varchar(255) not null,

created_at timestamp null,

updated_at timestamp null,

constraint permissions_name_guard_name_unique

unique (name, guard_name)

)

collate = utf8_unicode_ci;

CREATE TABLE roles

(

id bigint unsigned auto_increment primary key,

team_id bigint unsigned null,

name varchar(255) not null,

guard_name varchar(255) not null,

created_at timestamp null,

updated_at timestamp null,

constraint roles_team_id_name_guard_name_unique

unique (team_id, name, guard_name)

)

collate = utf8_unicode_ci;

CREATE TABLE role_has_permissions

(

permission_id bigint unsigned not null,

role_id bigint unsigned not null,

primary key (permission_id, role_id),

constraint role_has_permissions_permission_id_foreign

foreign key (permission_id) references permissions (id)

on delete cascade,

constraint role_has_permissions_role_id_foreign

foreign key (role_id) references roles (id)

on delete cascade

)

collate = utf8_unicode_ci;

CREATE INDEX roles_team_foreign_key_index on roles (team_id);

composer require --dev kitloong/laravel-migrations-generator

You can specify or ignore the tables you want using --tables= or --ignore= respectively.

Below is the command I ran for the tables we created above.

To run for all the tables, don’t add any additional filters.

php artisan migrate:generate --tables="roles,permissions,role_permissions"

Command output

Using connection: mysql

Generating migrations for: permissions,role_has_permissions,roles

Do you want to log these migrations in the migrations table? (yes/no) [yes]:

> yes

Setting up Tables and Index migrations.

Created: /var/www/html/database/migrations/2023_06_08_132125_create_permissions_table.php

Created: /var/www/html/database/migrations/2023_06_08_132125_create_role_has_permissions_table.php

Created: /var/www/html/database/migrations/2023_06_08_132125_create_roles_table.php

Setting up Views migrations.

Setting up Stored Procedures migrations.

Setting up Foreign Key migrations.

Created: /var/www/html/database/migrations/2023_06_08_132128_add_foreign_keys_to_role_has_permissions_table.php

Finished!

Permissions table: 2023_06_08_132125_create_permissions_table.php

...

Schema::create('roles', function (Blueprint $table) {

$table->bigIncrements('id');

$table->unsignedBigInteger('team_id')

->nullable()

->index('roles_team_foreign_key_index');

$table->string('name');

$table->string('guard_name');

$table->timestamps();

$table->unique(['team_id', 'name', 'guard_name']);

});

...

Roles table: 2023_06_08_132125_create_role_has_permissions_table.php

...

Schema::create('roles', function (Blueprint $table) {

$table->bigIncrements('id');

$table->unsignedBigInteger('team_id')

->nullable()

->index('roles_team_foreign_key_index');

$table->string('name');

$table->string('guard_name');

$table->timestamps();

$table->unique(['team_id', 'name', 'guard_name']);

});

...

Pivot table: 2023_06_08_132125_create_roles_table.php

...

Schema::create('role_has_permissions', function (Blueprint $table) {

$table->unsignedBigInteger('permission_id');

$table->unsignedBigInteger('role_id')

->index('role_has_permissions_role_id_foreign');

$table->primary(['permission_id', 'role_id']);

});

...

Add foreign key to the pivot table: 2023_06_08_132128_add_foreign_keys_to_role_has_permissions_table.php

...

Schema::table('role_has_permissions', function (Blueprint $table) {

$table->foreign(['permission_id'])

->references(['id'])

->on('permissions')

->onUpdate('NO ACTION')

->onDelete('CASCADE');

$table->foreign(['role_id'])

->references(['id'])

->on('roles')

->onUpdate('NO ACTION')

->onDelete('CASCADE');

});

...

This is just one of the challenges when migrating a legacy PHP application to Laravel.

The following post will be about password hashing algorithm incompatibility.

Laravel News Links

http://img.youtube.com/vi/zktqtTabJgk/0.jpg

Here’s a nice, short rant from Paul Chato (who I’d not heard of before) on why Disney’s social justice re-imagining of classic franchises fail: It’s not just their woeful ignorance of their own franchise, it’s the woeful ignorance of the vaster connected universe of fandom/geekdom/nerdom.

Put a bunch of us nerds together, even complete strangers, into a room, well, Heaven help you, and soon we’ll be talking about Cowboy Bebop or Akira Kurosawa, or NES, Atari, ColecoVision, Ultraman, Kirby, Adams, McFarland, Studio Ghibli, second breakfasts, Plan 9 From Outer Space, Fireball XL5, Scooby-Doo, The Day The Earth Stood Still, Matrix (only the first one), Terminator, Blade Runner, Aliens, Herge, Miller, Robert E. Howard, Harryhausen, Lasseter, good scotch. Has anyone heard Kathleen Kennedy talk about any of those things? Of course not, and I can hear you laughing.

That’s a pretty good name check list, though I’d add Robert A. Heinlein and H. P. Lovecraft (among others).

But it’s an interesting point: Social justice showrunners are woefully ignorant of vast swathes of knowledge held by the fandoms they hold in such withering contempt.

Lawrence Person’s BattleSwarm Blog