"AR-15s ARE WEAPONS OF WAR THAT MAKE ER DOCTORS CRY, JUST BUY A SHOTGUN!"

Shotguns: pic.twitter.com/7kgpIYtxop

— planefag (@planefag) June 14, 2021

The guys who get shot with shotguns go straight to the morgue.

Just another WordPress site

"AR-15s ARE WEAPONS OF WAR THAT MAKE ER DOCTORS CRY, JUST BUY A SHOTGUN!"

Shotguns: pic.twitter.com/7kgpIYtxop

— planefag (@planefag) June 14, 2021

The guys who get shot with shotguns go straight to the morgue.

https://s3files.core77.com/blog/images/1190988_81_109150_BgO_t6oxP.jpg

Portland-based Scott Seelye was an industrial designer for clients like Nike, InFocus and Hewlett-Packard for about twenty years before he decided to start his own thing, together with wife Jennifer. With the unique idea of producing ukuleles tough enough to withstand "backpacking, camping, traveling" and plain ol’ extreme conditions, the Seelye worked with several manufacturers in an effort to produce a durable, injection molded, glass-filled polycarbonate ukulele.

Glass-filled polycarbonate is tough stuff; the problem is, it can be a bitch to mold. Seelye spent two years looking at mold-flow computer simulations telling him it his designs wouldn’t work, and struggling with manufacturers who couldn’t produce the object to his standards.

Finally Seelye discovered Sea-Lect Plastics, a manufacturer also located in Portland. "With the help and cooperation of our exceptional material and color suppliers," that company writes, "plus utilizing two brand new Milacron molding machines, we were able to meet Outdoor Ukulele’s expectations."

Today the Seelyes’ Outdoor Ukulele has been going strong for four years, and has expanded into banjoleles and guitars, and carbon fiber models in addition to the polycarbonate ones. Their models have "played in the Arctic Ocean, paddled down the Amazon River, and hiked both the Appalachian and Pacific Crest Trails," the Seelyes write.

With the body parts delivered from Sea-Lect, Scott can assemble 16 units per day, at the tidy workstation he’s designed and that you can see below:

Congratulations to the Seelyes! Check out their wares here.

Core77

https://s.yimg.com/os/creatr-uploaded-images/2021-06/83441500-cdf9-11eb-bcbf-9c2ec7aee421

As it did in 2019, Nintendo closed out its E3 2021 Direct presentation with a look at its next Legend of Zelda game. And yet again, Nintendo kept details about the project close to the chest. But what we did learn is that the company plans to release the sequel to Breath of the Wild in 2022, and that the game will expand on the open world seen in the first game to include the skies above Hyrule. We’ll likely learn more about the sequel as we get close to 2022.

Engadget

https://assets.amuniversal.com/bb40daa091b101395d45005056a9545d

salesman: i’ll need you to sign a nondisclosure agreement before i can show you our new product.

dilbert: you wasted a trip here because i won’t be doing that. the fact that you even asked me to sign an nda tells me your company is incompetent.

dilbert: i prefer giving my business to a vendor who can show me their product without getting a lawyer involved.

salesman: you could sign it without having your lawyer review it.

dilbert yelling: do i look like an idiot?

salesman holding out nda toward dilbert.

dilbert: well? do i?

salesman: only form your chin to your forehead area.

Dilbert Daily Strip

https://theawesomer.com/photos/2021/06/a_brief_history_of_the_devil_t.jpg

From his horns to his red suit to his pitchfork, we all have a pretty specific image in our minds of what The Devil looks like. In this TED-Ed video by educator and Episcopal priest Brian A. Pavlac, he delves into the origins of the ultimate evil dude and his various depictions over the years.

The Awesomer

https://i.kinja-img.com/gawker-media/image/upload/c_fill,f_auto,fl_progressive,g_center,h_675,pg_1,q_80,w_1200/b742175538d2532d1fdd6825bfbbc027.jpg

I have been very wary of Kevin Smith’s Masters of the Universe: Revelation for two reasons. The biggest one is that Smith kept calling it a “continuation” of the original 1983 He-Man and the Masters of the Universe cartoon, which seemed impossible because the original was completely episodic, meaning there was never any story to continue. The second reason is that Netflix originally called it an anime series, which would have been even less of a continuation (Netflix has since stopped). But watching this very, very good first trailer for the series, I think I finally get it.

Yes, this means I’ve had a revelation about Revelation. Shut up and watch:

From that opening shot of Castle Grayskull, which is pretty much picture-perfect with the original series, everything looks exactly like it did in the ‘80s series just…much better. The characters are using the same design and (reasonably) simple palettes, but they’re more detailed, more cool-looking, and more accurate representations of the original action figures—they haven’t been updated, just improved. Well, with a few exceptions that are for the best; it looks like Prince Adam is now visible younger and smaller than He-Man, which makes his transformation much more impressive and meaningful, while He-Man has his more recent “H” symbol on his chest instead of an Iron Cross, which has always been an extremely good idea for multiple reasons. Admittedly, I’m not sure about He-Man’s new, Sailor Moon-esque transformation sequence.

But it’s hard to get too concerned when Revelation has all the action the original cartoon lacked, and then some. Back then, kid’s animation really couldn’t show graphic violence—I don’t mean gore, I mean that He-Man literally never used a sword on or punched another living being in the entirety of the original ‘80s series. The worst he would do was pick up a bad guy and toss him into a barrel or puddle of water.

G/O Media may get a commission

As you can see, this is no longer the case. He-Man, Skeletor, and the other no longer have any problem trying to beat the hell out of each other with fists, weapons, and in Skeletor’s case, some truly badass sorcery. Hell, even Orko does something cool! Sure, Beast-Man still jumps out of the Land Shark a second before a laser blows it up, classic cartoon-style, but that’s fine! We ‘80s kids never needed the Masters of the Universe to murder each other, we just wanted He-Man to punch Skeletor in the face…and it looks like we’re going to get that in spades.

In news that hasn’t sent me into paroxysms of delight, Netflix has also announced the existence of Revelations: The Masters of the Universe Revelation Aftershow, which sounds like it’s going to be a single 25-minute special that will arrive alongside Revelation’s first five episodes. It’ll be hosted by Smith, “executive producer and Vice President, Content Creative, Mattel Television Rob David,” and actor Tiffany Smith, who plays Andra (an effectively new character for the series. It’s a long story). They’ll be joined by cast members Mark Hamill (Skeletor), Chris Wood (He-Man), Sarah Michelle Gellar (Teela), Lena Headey (Evil-Lyn), Henry Rollins (Tri-Klops), Griffin Newman (Orko), and more.

The first half of Masters of the Universe: Revelation will premiere on Netflix on July 23. Suddenly, that feels far, far away.

For more, make sure you’re following us on our Instagram @io9dotcom.

Gizmodo

https://www.louderwithcrowder.com/media-library/eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJpbWFnZSI6Imh0dHBzOi8vYXNzZXRzLnJibC5tcy8yNjYwNjA3My9vcmlnaW4uanBnIiwiZXhwaXJlc19hdCI6MTYzMDEzMjg0OH0.mFJA7Igjv0Vd_16Do7rqERVoGDnELoHWg3UPus7NTIk/image.jpg?width=980

Parents have grown increasingly concerned with school districts implementing critical race theory into their curriculums. CRT plays off the progressive belief that anything they don’t like is racist. With CRT, they can start teaching kids at a young age that their entire existence is racism-based depending on their skin color. Videos of school boards being told to shine up their indoctrination and stick it up their arse are common. Usually, it’s from parents who think that we SHOULDN’T be teaching kids to hate each other. Today, in Florida, that parent was Gov. Ron DeSantis.

It was a meeting of the State Board as opposed to a local district. And everyone there was rather cordial. But the message from America’s Governor is clear. He believes we should be teaching FACT and not NARRATIVE. He also gives specifics of what some other school districts are trying to expose kids too.

WEB EXTRA: Gov. Ron DeSantis Addressed Florida Board Of Education

youtu.be

Basically using critical race theory to bring ideology and political activism to the forefront of education. … We need to be educating people, not trying to indoctrinate them. …

In upstate New York … they forced kindergartners to watch videos saying that "racist police" and "state-sanctioned violence" will kill people at any time based on race.

In Arizona, they had an equity toolkit saying that babies show racism at three months old, and white children are racist by age 5. …

[CRT] is basically teaching kids that the country is rotten and that our institutions are illigitamte. That is not worth any taxpayer dollars. It’s wrong. It’s not something that we should do.

DeSantis does one thing here that more people need to do when they speak out. He gives specific examples. If more parents started doing that rather than more broad-based attacks, it would put a lot of these school boards on defense. Let the upstate New York superintendent defend teaching children that cops will kill them. Put the Arizona teacher who is teaching kids that they’re racist before they reach first grade on blast. Leftists need to be forced to defend specifics. Otherwise, they paint criticism of CRT as criticism of teaching history. Which, as DeSantis also points out, is bullshit.

Other Republican governors in other states have banned it outright. DeSantis hasn’t done that yet. Or hasn’t had the chance to do that yet. But more important than winning a political fight over CRT, we need to win the argument. Putting the Marxist school boards on defense instead of offense is a start.

Get your content free from Big Tech’s filter. Bookmark this website and sign up for our newsletter!

The NFL Admits To Being RACIST…and WOKE!? | Louder With Crowder

youtu.be

Louder With Crowder

https://media.notthebee.com/articles/1f0829f9-3a8f-4a39-8a64-c9a06820455b.jpg

Here’s your daily dose of courage:

Not the Bee

https://i.ytimg.com/vi/HKM_TAi7DLQ/maxresdefault.jpgCreate Your Own Log Files In Laravel and Know About Different Levels Of LoggingLaravel News Links

https://www.mywebtuts.com/upload/blog/barcodethumb.png

Hi Friends,

Today, In this article i will share you how to generate barcode using laravel 8. so you can just follow my step and generate barcode using milon/barcode package let’s implement it.

In this Tutorial i would like to show you how to generate barcode in laravel 8 milon/barcode package.

In this blog, i will use milon/barcode package to generate simple text, numeric and image barcode in laravel 8 app.

So, let’s start the example and follow my all steps.

Step 1 : Install Laravel 8 Application

In the first step first of all we are learning from scratch, So we need to get fresh Laravel application using bellow command, So open your terminal OR command prompt and run bellow command:

composer create-project --prefer-dist laravel/laravel blog

Step 2 : Database Configuration

In this second step, we are require configure database with your downloded/installed laravel 8 app. So, you need to find .env file and setup database details as following:

Path : .env

DB_CONNECTION=mysql DB_HOST=127.0.0.1 DB_PORT=3306 DB_DATABASE=db name DB_USERNAME=db user name DB_PASSWORD=db password

Step 3 : Installing milon/barcode Package

Now,In this third step we will install laravel milon/barcode package via following command. so open your command prompt and paste the bellow code.

composer require milon/barcode

Step 4 : Configure Barcode Generator Package

Here, In this step,we will configure the milon/barcode package in laravel 8 app. So, Open the providers/config/app.php file and register the provider and aliases for milon/barcode and paste the bellow code.

'providers' => [

....

Milon\Barcode\BarcodeServiceProvider::class,

],

'aliases' => [

....

'DNS1D' => Milon\Barcode\Facades\DNS1DFacade::class,

'DNS2D' => Milon\Barcode\Facades\DNS2DFacade::class,

]

Step 5 : Create Route

Here, in this step we will create a two route index() method define a simple barcode generate with getBarcodeHTML method and second routes define a image barcode generator using getBarcodePNG. in web.php file which located inside routes directory:

Path : routes/web.php

<?php

use App\Http\Controllers\BarcodeGeneratorController;

use Illuminate\Support\Facades\Route;

/*

|--------------------------------------------------------------------------

| Web Routes

|--------------------------------------------------------------------------

|

| Here is where you can register web routes for your application. These

| routes are loaded by the RouteServiceProvider within a group which

| contains the "web" middleware group. Now create something great!

|

*/

Route::get('barcode',[BarcodeGeneratorController::class,'index'])->name('barcode');

Route::get('barcode-img',[BarcodeGeneratorController::class,'imgbarcode'])->name('barcode-img');

Step 6 : Creating BarcodeGeneratorController

Now this step,I will engender generate BarcodeGeneratorController file by using the following command.

php artisan make:controller BarcodeGeneratorController

After,successfully created BarcodeGeneratorController after app/http/controllers and open BarcodeGeneratorController.php file. And add the simple barcode generation code into it.

Path : app/Http/Controllers/BarcodeGeneratorController.php

<?php

namespace App\Http\Controllers;

use Illuminate\Http\Request;

class BarcodeGeneratorController extends Controller

{

/**

* Write code on Method

*

* @return response()

*/

public function index()

{

return view('barcode');

}

/**

* image generate barcode

*

* @return response()

*/

public function imgbarcode()

{

return view('img-barcode');

}

}

Step 7 : Create a Blade File

In this step we will make a two different blade file first is barcode.blade.php file in this blade file i explain a different barcode generate 1D and 2D dimension using getBarcodeHTML method.

Path : resources/views/barcode.blade.php

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Laravel 8 Barcode Generator Example - MyWebTuts.com</title>

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/4.5.2/css/bootstrap.min.css">

</head>

<body>

<div class="container container justify-content-center d-flex">

<div class="row">

<div class="col-md-12">

<h3 class="">Laravel 8 Barcode Generator Example - MyWebTuts.com</h3>

<div>{!! DNS1D::getBarcodeHTML('0987654321', 'C39') !!}</div><br>

<div>{!! DNS1D::getBarcodeHTML('7600322437', 'POSTNET') !!}</div></br>

<div>{!! DNS1D::getBarcodeHTML('8780395141', 'PHARMA') !!}</div></br>

<div>{!! DNS2D::getBarcodeHTML('https://www.mywebtuts.com/', 'QRCODE') !!}</div><br><br>

<div>{!! DNS2D::getBarcodeSVG('https://www.mywebtuts.com/', 'DATAMATRIX') !!}</div>

</div>

</div>

</div>

</body>

</html>

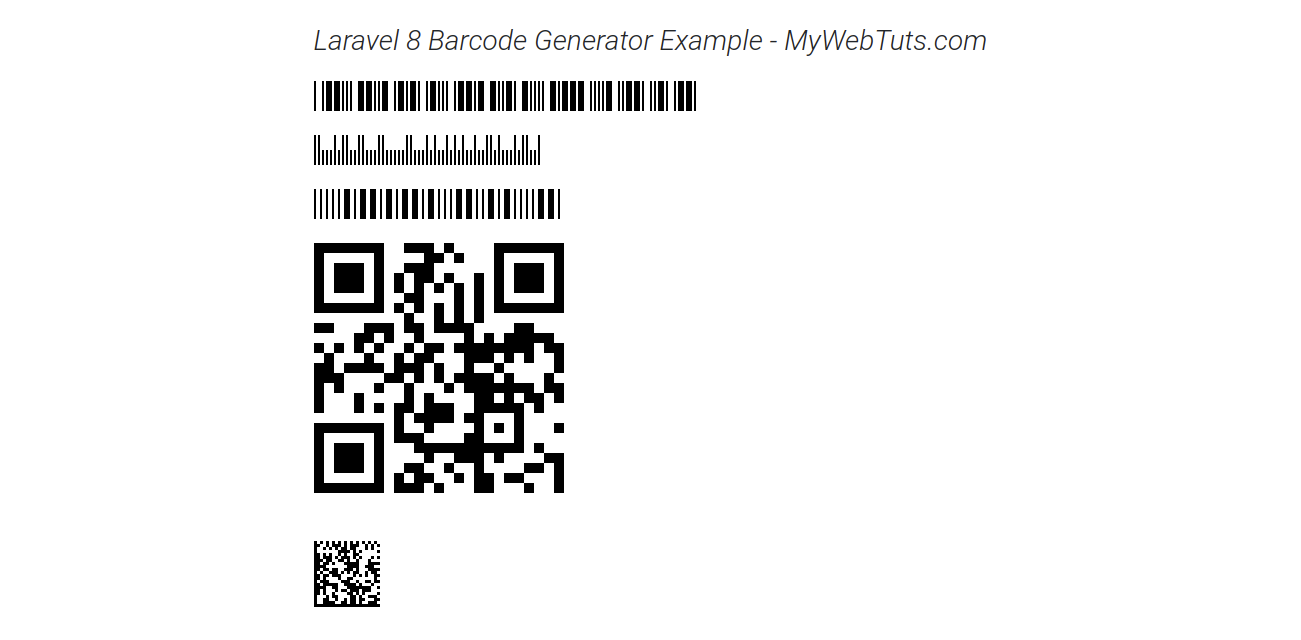

Preview

In this second img-barcode.blade.php file i will define a barcode generate using getBarcodePNG method.

Path : resources/views/img-barcode.blade.php

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Laravel 8 Barcode Generator Example - MyWebTuts.com</title>

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/4.5.2/css/bootstrap.min.css">

</head>

<body>

<div class="container text-center mt-5">

<div class="row">

<div class="col-md-12">

<h3 class="mb-4">Laravel 8 Barcode Generator Example - MyWebTuts.com</h3>

<img src="data:image/png;base64," alt="barcode" /><br/><br/>

<img src="data:image/png;base64," alt="barcode" /><br/><br/>

<img src="data:image/png;base64," alt="barcode" />

</div>

</div>

</div>

</body>

</html>

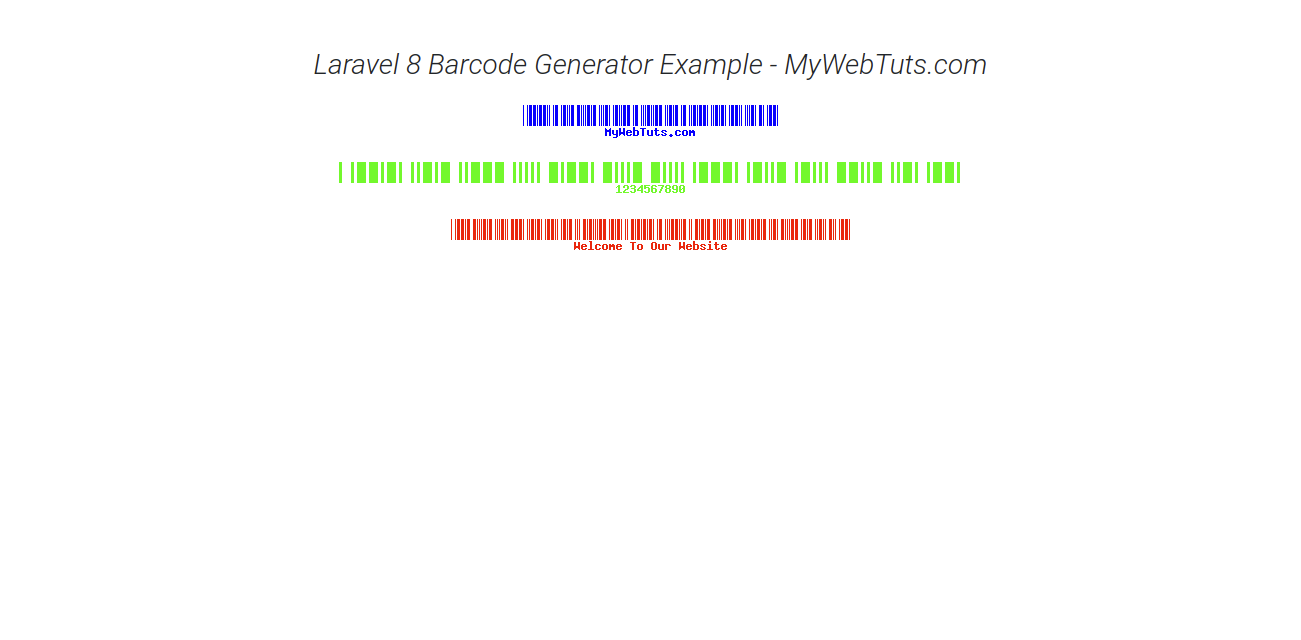

Preview

Now we are ready to run our bar code laravel 8 example so run bellow command for quick run:

php artisan serve

Now you can open bellow URL on your browser:

Browser url run : http://localhost:8000/barcode

It Will Help You..

Laravel News Links