Ooni’s Koda 16 pizza oven is the rare kitchen gadget that delivers on its promise

https://ift.tt/2VHP3pV

Ooni (nee Uuni), has been around for a few years now, but its latest oven, the Koda 16, launched in March. Just like everyone else, I’ve been cooped up at home for weeks with nothing but all of the projects I would get around to one day.

At the top of my list was learning how to make decent pizza at home (we don’t have many decent pizzaiolo’s in my town). I’d been hearing about the Ooni oven for a while — mostly via Neven Mrgan’s great Instagram feed — so I spring for the Koda 13” and started firing some pies.

I was immediately enamored with the eye popping results. Chewy, crispy, well cooked Neopolitain-style pizza within 30 minutes of taking it out of the box. And I’m not exaggerating. After a couple of pizza launching disasters (this is not as easy as it looks, people), I was eating the product of my own hands and the Ooni’s 800+ degree baking surface. While not even an advanced amateur chef, I have always had somewhat of an aversion to single-use gadgets. Technique always wins, right?

The problem with that thinking is that it is really impossible to cook true Neopolitain pizza at home in the US because our ovens just don’t get hot enough. A ton of experimental dough situations have resulted in a few workable New York style pizza recipes for 500 degree ovens. But for thinner crusts there is zero substitute for that true 800-1000 degree cooking environment.

The Ooni delivers that in under 20 minutes attached to a bog standard propane tank. It’s brilliant.

Ooni co-founder Kristian Tapaninaho started messing around with building a decent pizza oven in 2010. He got into making home pies and realized that there was pretty much no way to do it other than building a large, expensive oven in his back yard. He began prototyping what became the company’s original oven in 2012, and he says that the original oven’s design stemmed from a super simple yet super obvious (in hindsight) design constraint: what could they ship affordably?

Due to shipping restrictions, it had to be under 10kg and had to fit in a certain footprint. Everything piece of design work on the first oven stemmed from those constraints. Why, for instance, does the Ooni oven have 3 legs? Because the 4th one would have put them over weight.

Within those constraints, the original oven took shape — delivering that super high-heat surface with a simple wood-fired unit that more than doubled its original funding goal on Kickstarter. Kristian and co-founder Darina Garland defined this high-heat, high results at-home outdoor pizza oven market at scale, along with other later entrants like Roccbox.

I had a bit of a chat with Kristian about how Ooni was doing lately, with the specter of coronavirus and the new business realities that have resulted.

“This COVID-19 situation began for us in mid January as our suppliers started informing us that they were delaying return to work from Chinese New Year,” Kristian said. “At the time the worry was if we’d have enough supply for the summer which is of course peak season for us. As our supply chain was restarting, it was clear that we’d have similar lockdowns in our main markets as well. Overall, however, we started the year at a strong inventory position which helped buffer any interruptions.”

He says that Ooni was lucky given that the initial production run of the Ooni 16 was already in warehouses by the time things got really hairy in Edinburgh and the surrounding areas. And the team was fairly ready for the new challenge of stay-at-home work.

“Much of our team comms already happened over Slack so the team’s been really quite well setup for working from home,” he told me. “We have great relationships with our 3rd party logistics providers and while they’ve been incredibly busy, they’ve been able to maintain a good level of service, at least in the grand scheme of things.”

Yeah, but how does it work?

Once Kristian saw that I was playing with my Ooni 13 he offered to send the newly launched 16″ model over to play with. I jumped at the chance to make a bigger pie.

My experiences with the Ooni ovens so far have been nothing short of revelatory. Though I’ve pondered indoor options like the Breville Smart Oven, I knew in my heart that I wanted that brilliant taste that comes from live fire and the high heat that would let me enjoy super thin crust pizzas. I’ve now fired over three dozen pizzas in the Ooni and am coming to know it a bit better. Its recovery time, rotation needs and cooking characteristics. I have never used a more enjoyable cooking utensil.

I’ve tried a few dough recipes, because I know I’ll get questions about it, but I’ve used two to good effect. Ooni’s own recommended dough (though I hydrate a bit more) and this Peter Reinhart recipe, recommended to me by Richie Nakano.

The pizzas that result are bursting with umami. The oven enables that potent combination of cheese, sauce and randomly distributed carbonization that combines into the perfect bite. Your pie goes in somewhat pedestrian — whitish dough, red sauce, hunks of fresh mozzarella — and you see it come to life right in front of your eyes. Within 60-90 seconds, you’ve transmogrified the simple ingredients into a hot endocrine rush of savory, chewy flavor.

As I mentioned before, the setup is insanely simple. Flip out the legs, put it on an outdoor surface with some support and attach a propane tank. An instant of lighting knob work and you’re free to step away. Fifteen minutes later and you’ve got a cooking environment to die for. The flip down legs make the 13” model super great for taking camping or anywhere you want to go to create your own pizza party. Ooni even sells a carrying case.

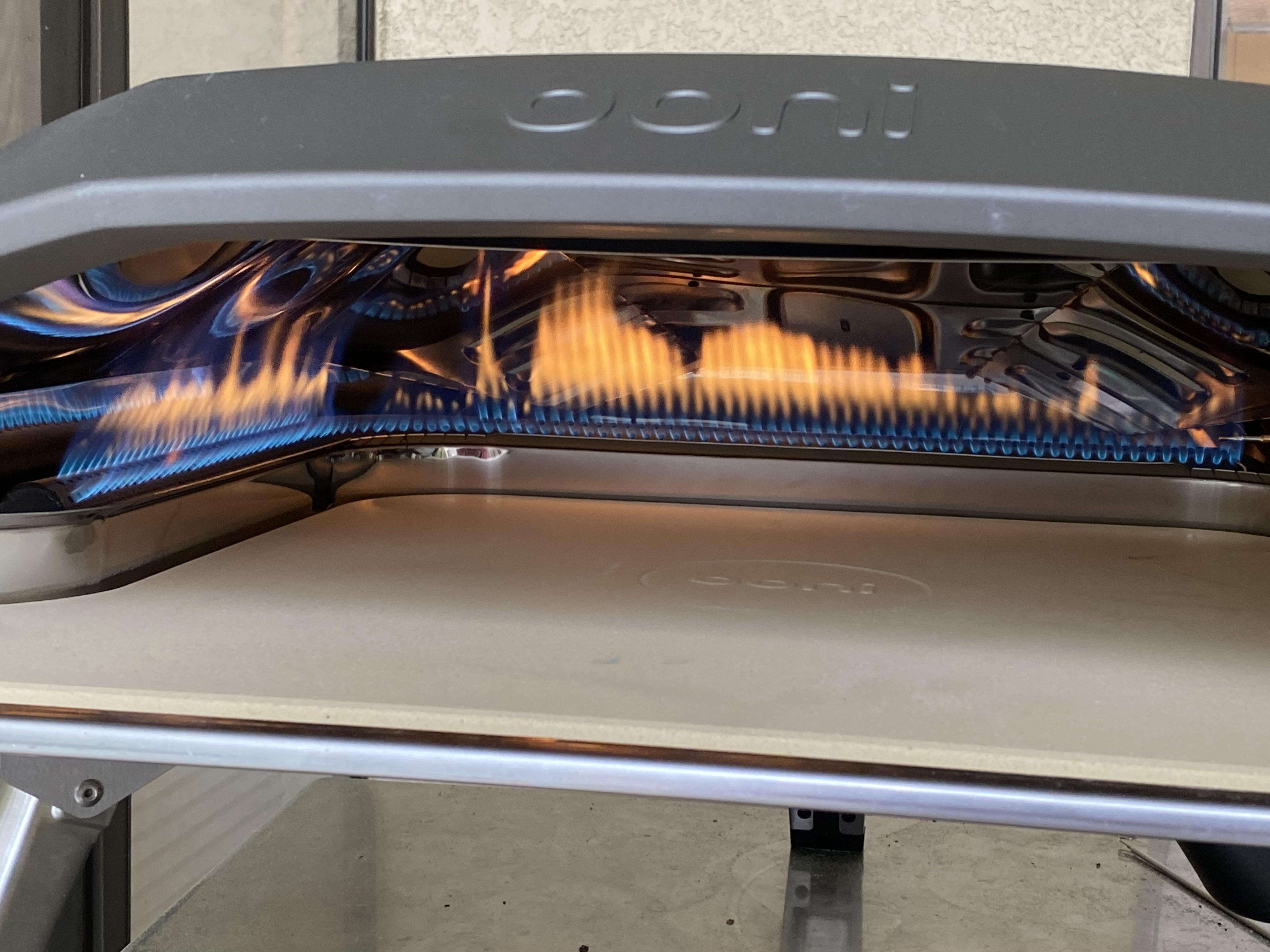

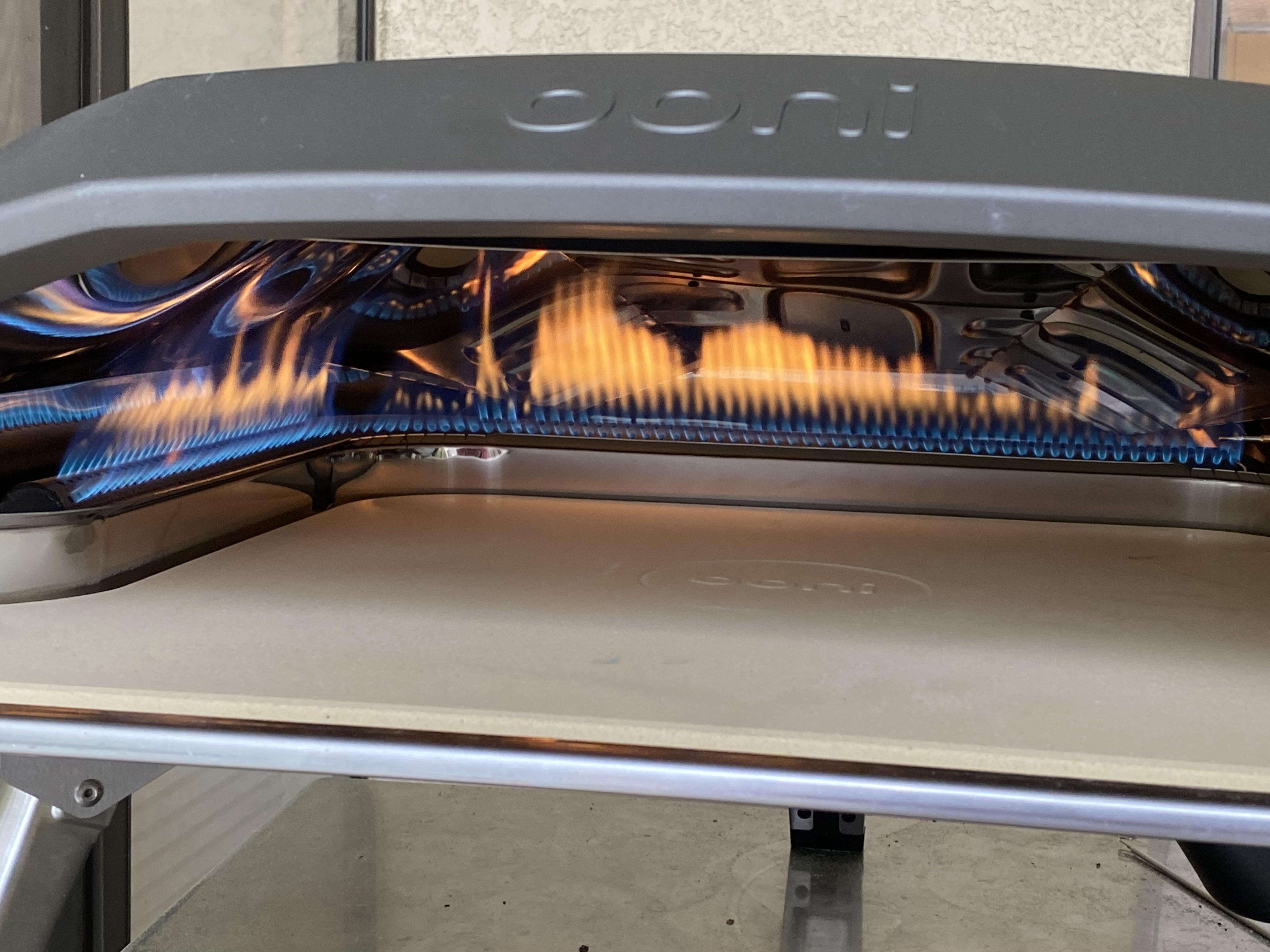

The design of the oven’s upper shell means that all of the heat is redirected inwards, letting the baking surface reach 850 degrees easily in the center, up to 1000 degrees near the back. The Koda 16 has such an incredibly roomy cooking surface that it is easy to see to the sides and around your pizza a bit to tell how the crust is rising and how the leoparding is coming along. Spinning your pie mid-cook is such an important part of this kind of oven and the bigger mouth is smashing for this.

Heck I even cooked steak in it, to mouth watering results.

“Our core message has always been ‘great restaurant quality pizza at home’ and while the situation is what it is, more people spending more time at home looking for great home cooking options has been strong for our online sales,” Kristian said when I asked him about whether more people were discovering Ooni now. “Pizza making is a great way to have fun family time together. It’s about those shared experiences that bring people together.”

This mirrors my experiences so far. I’m not precisely ‘good’ at this yet, but I’m plugging away and the Ooni makes even my misses delicious. This weekend I was even confident enough to hold a socially distanced pizza pick-up party. Friends and family put in their orders and I fired a dozen pies of all kinds. Though I couldn’t hug them, I could safely hand them a freshly fired pizza and to most Italians like me, that’s probably better.

In my mind, the Ooni Koda pulls off a rare trifecta of kitchen gadgets: It retains the joy and energy of live flame, delivers completely on its core premise and still remains incredibly easy to use. Highly recommend.

technology

via TechCrunch https://techcrunch.com

April 14, 2020 at 09:30PM