https://media.notthebee.com/articles/6981038e1a2c56981038e1a2c6.jpg

This is a real ad:

Not the Bee

Just another WordPress site

https://media.notthebee.com/articles/6981038e1a2c56981038e1a2c6.jpg

This is a real ad:

Not the Bee

Laravel News Links

https://theawesomer.com/photos/2026/01/dying_by_lightsaber_t.jpg

When people in Star Wars get killed or dismembered by a lightsaber, it’s a pretty neat, tidy, and speedy event. But the morbid Mr. Death explains what’s more likely to happen to a human struck by a 20,000°C plasma beam when taking real-world physics into account. It sounds quite awful compared to what we’ve seen on screen.

The Awesomer

https://media.notthebee.com/articles/697b85b916795697b85b916796.jpg

Rogan’s gonna Rogan!

Not the Bee

https://gizmodo.com/app/uploads/2026/01/computer-history-museum-1280×853.jpg

The Computer History Museum, based in Mountain View, California, looks like a fine way to spend an afternoon for anyone interested in, well, the history of computers. And if that description fits you but you’re not in California, then rejoice, because CHM recently launched OpenCHM, an excellent online portal designed to allow exploration of the museum from afar.

You can, of course, just click around to see what catches your eye, but if that feels too unfocused, you can also go straight to the collection highlights. As you might expect, these include a solid selection of early computers and microcomputers, along with photos, records, and other objects of historic import. Several objects predate the information age, including a Jacquard loom and a copy of The Adams Cable Codex, a fascinating 1894 book that catalogs hundreds of code words that were used to save space when sending messages via cable. Happily, there’s a full scan of the same book at the Internet Archive, because the CHM’s documentation on the latter is rather minimal.

This is the case throughout the site. In fairness, OpenCHM is still in beta, and hopefully the item descriptions will be fleshed out as the site develops—but as it stands, their terse nature means that some of the objects on show are disappointingly inscrutable. For example, it took a bit of googling to work out what on earth a klystron is, and the CHM’s description isn’t much help, noting only that “This item is mounted on a wooden base.” (For the record, a klystron is a vacuum tube amplifier that looks cool as hell.)

Still, such quibbles aside, there’s a wealth of material to explore here, and on the whole, Open CHM makes doing so both easy and enjoyable. It provides multiple entry points to the collection. In addition to the aforementioned highlights page and a series of curated collections, there’s something called the “Discovery Wall”. This is described as “a dynamic showcase of artifacts chosen by online visitors”, and it’s certainly interesting to see what catches people’s attention. At the time of our virtual visit, items on display on the Discovery Wall included an alarmingly yellow Atari t-shirt from 1977, a Tamagotchi (in its original packaging!), a placard from the 2023 Writers’ Guild strike (“Don’t let bots write your shows!”) and a Microsoft PS/2 mouse, the mere sight of which is likely to cause shudders in anyone with memories of flipping one of these over to pull out the trackball and clean months’ worth of accumulated crud from the two little rollers inside.

Perhaps the single most poignant item we came across, however, is a copy of Ted Nelson’s self-published 1974 opus Computer Lib/Dream Machines, which promoted computer literacy and the liberation Nelson hoped it would bring. The document is strikingly forward-thinking—amongst other things, it predicted hypertext, of which Nelson was an early proponent—but the technoutopianism on display seems both charmingly innocent and painfully naïve today. “New Freedoms Through Computer Screens”, promises the rear cover. If only they knew.

Gizmodo

https://www.louderwithcrowder.com/media-library/image.jpg?id=63361689&width=980

Watch Louder with Crowder every weekday at 11:00 AM Eastern, only on Rumble Premium!

1. ICE is not law enforcement.

ICE stands for Immigration & Customs Enforcement.

ICE was established in 2003 as part of the Homeland Security Act of 2002. This act also established the Department of Homeland Security.

Section 441 of the Homeland Security Act transfers immigration enforcement functions to the Under Secretary for Border and Transportation Security. This included Border Patrol, INS, detention and removal amongst others.

Section 442 of the Homeland Security Act establishes a Bureau of Border Security, headed by an assistant secretary to the Under Secretary.

These, amongst other provisions, allowed the Department of Homeland Security to form the Bureau of Immigration and Customs Enforcement (ICE).

Congress expanded ICE’s authorities the Intelligence Reform and Terrorism Prevention Act of 2004, the Trafficking Victims Protection Act Reauthorizations Acts of 2003 and 2005 and the Immigration and Nationality Act, amongst others.

Not only is ICE a law enforcement agency, its jurisdictional authority and enforcement role have been constantly expanding since its creation.

ICE has the authority to arrest and detain illegal aliens under US Code Title 8 Chapter 12 Subchapter II Part IV Section 1226 and US Code Title 8 Chapter 12 Subchapter II Part IX Section 1357. They also have broader enforcement authority as sworn agents under US Code Title 18, which includes an entire chapter that defines obstruction of justice. This allows for them to arrest people, including citizens, who are impeding ICE actions and committing obstruction of justice.

Not only is ICE an arm of federal law enforcement, they are specifically tasked to enforce immigration and customs laws.

2. Immigrants commit fewer crimes.

The study that claims that immigrants commit fewer crimes was conducted by Northwestern University titled Law-Abiding Immigrants: The Incarceration Gap Between Immigrants and the U.S.-Born, 1870-2020.

The study, as would seem obvious, takes the data of immigrants and U.S. born citizens who are incarcerated in the American prison system.

Problem #1: The study ends in 2020.

While illegal immigration has been an increasing problem, there has been a significant increase of the undocumented crossing into America, especially during Joe Biden’s administration. In 1969, illegal immigrants made up 0.3% of the population. In 2020, Customs and Border Patrol reported 646,822 enforcement actions. In 2021, they reported 1,956,519. In 2022, they recorded 2,766,582 actions. In 2023, they recorded 3,201,144 actions. In 2024, they recorded 2,901,142 actions.

We also have arrest statistics from CBP.

Every metric from the Department of Homeland Security shows significant demographic changes to the illegal immigrant population during the Biden administration. After 2020. Consider, as well, that the defund the police movement coincided with the migrant surge under Joe Biden, this all leads to a recipe for disaster.

Problem #2: The study doesn’t differentiate between illegal and legal immigrants.

Considering the restrictions and caveats to legal immigration, it would stand to reason that we aren’t allowing the criminal element to immigrate here. For example, one of the requirements to eligibility is to be a person of good moral character. There is an expectation in the vetting process for a legal immigrant that they are not the kind of person who would commit a crime.

Problem #3: Illegal immigrant crime calculations leave out crimes related to fraudulent social security numbers, fake driver’s licenses, fraudulent green cards and improperly accessing public benefits. The State Criminal Alien Assistance Program is a Bureau of Justice Assistance program that provides federal payments to states and localities that incurred correction officer salary costs for incarcerating undocumented criminal aliens. Yes, the federal government is subsidizing the incarceration of illegal aliens. SCAAP has far different numbers on illegal immigration. SCAAP’s data shows that illegals actually ARE committing more crimes. The Federation for American Immigration Reform found that illegals are twice as likely to be in prison in California and New York, four times as likely in New Jersey and almost five times more likely in Arizona.

Problem #4: A crime can only be counted if it’s reported. Illegal immigrants are less likely to report crimes and appear in court as witnesses because of the fear of deportation. As recent ICE arrests have found and history tells us, immigrants form ethnic enclaves, which means if crimes are being committed by illegal immigrants in illegal immigrant enclaves, we can assume that some of them, perhaps much larger than the general population, are not going reported.

Even factcheck.org admits there aren’t nationwide statistics on all crimes committed by illegal immigrants, only estimates extracted from smaller samples.

Then again, every person who has entered the country illegally has committed a crime, making the illegal immigrant crime rate 100%. Which leads us to:

3. Crossing the border is not a crime, and no human is illegal.

You’ve heard it before: "Entering the United States is not a crime; it’s just a misdemeanor."

Okay, so, misdemeanors ARE crimes. The word ‘misdemeanor’ is a designation that refers to the seriousness of the offense. You have misdemeanors, and you have felonies. Felonies typically carry bigger punishments but are also crimes.

The designation of improper (or illegal) entry into the US is designated in US Code Title 8 Chapter 12 Subchapter II Part VII Section 1325. The misdemeanor carries fines and prison time. Marriage fraud and entrepreneur fraud carry heftier penalties. But that’s just for the first offense.

If you have been removed and reenter, things get worse. And, depending on why you were ordered removed, the penalties can be even worse than that.

Who can be removed? Anyone who came here by illegal means, including people who have violated conditions of entry. Unlawful voters, traffickers, drug abusers…there are a lot of offenses that are deportable. Please peruse at your leisure.

As for no human being illegal… Humans can be criminals. Again. That’s how crime works. If you are committing a crime, you are subject to legal action. The word "alien" as a legal term for foreign nationals appeared in the Naturalization Act of 1790 and the Alien and Sedition Acts of 1798. The word "illegal" added on simply becomes the descriptor that it is an foreign national in the country illegally. "Illegal alien" can be found as far back as 1924, the same year the United States Border Patrol was established. The Supreme Court used the in a 1976 case United States v. Martinze-Fuerte. Bill Clinton used the term in his 1995 State of the Union address. As the term "alien" is still used in federal statutes and regulations, the term "illegal alien" is still appropriate when referring to people who have entered and/or are in the United States illegally.

Bottom line: The United States of America is a country with laws and a border. It is illegal to cross the border in any way that the United States does not define as lawful. If it is not lawful, it is a crime. Anyone who has come to the United States of America in a way that does not follow US law has committed a crime. That’s how crime works. I don’t know why I have to explain that.

Louder With Crowder

https://blog.laragent.ai/content/images/size/w1200/2026/01/ChatGPT-Image-Jan-22–2026–03_15_35-PM.png

This major release takes LarAgent to the next level – focused on structured responses, reliable context management, richer tooling, and production-grade agent behavior.

Designed for both development teams and business applications where predictability, observability, and scalability matter

LarAgent introduces DataModel-based structured responses, moving beyond arrays to typed, predictable output shapes you can rely on in real apps.

Example

use LarAgent\Core\Abstractions\DataModel;

use LarAgent\Attributes\Desc;

class WeatherResponse extends DataModel

{

#[Desc('Temperature in Celsius')]

public float $temperature;

#[Desc('Condition (sunny/cloudy/etc.)')]

public string $condition;

}

class WeatherAgent extends Agent

{

protected $responseSchema = WeatherResponse::class;

}

$response = WeatherAgent::ask('Weather in Tbilisi?');

echo $response->temperature;

v1.0 introduces a pluggable storage layer for chat history and context, enabling persistent, switchable, and scalable storage drivers.

class MyAgent extends Agent

{

protected $history = [

CacheStorage::class, // Primary: read first, write first

FileStorage::class, // Fallback: used if primary fails on read

];

}Long chats are inevitable, but hitting token limits shouldn’t be catastrophic. LarAgent now provides smart context management strategies.

class MyAgent extends Agent

{

protected $enableTruncation = true;

protected $truncationThreshold = 50000;

}👉 Save on token costs while preserving context most relevant to the current conversation.

Context now supports identity-based sessions which is created by user id, chat name, agent name and group. Identity storage holds all identity keys that makes context of any agent available via the Context facade to manage. For example:

Context::of(MyAgent::class)

->forUser($userId)

->clearAllChats();✔ Better support for multi-tenant SaaS, shared agents, and enterprise apps.

Generate fully-formed custom tool classes with boilerplate and IDE-friendly structure

php artisan make:agent:tool WeatherToolThis generates a ready tool with name, description, and handle() stub. Ideal for quickly adding capabilities to your agents.

Now the CLI chat shows tool calls as they happen — invaluable when debugging agent behavior.

You: Find me Laravel queue docs

Tool call: web_search

Tool call: extract_content

Agent: Here’s the documentation…

👉 Easier debugging and More transparency into what your agent actually does

MCP (Model Context Protocol) tools now support automatic caching.

Add to .env:

MCP_TOOL_CACHE_ENABLED=true

MCP_TOOL_CACHE_TTL=3600

MCP_TOOL_CACHE_STORE=redis

Clear with:

php artisan agent:tool-clear✔ Great for production systems where latency matters

Track prompt tokens, completion tokens, and usage stats per agent — ideal for cost analysis and billing

$agent = MyAgent::for('user-123');

$usage = $agent->usageStorage();

$totalTokens = $usage->getTotalTokens();

Usage tracking is based on session identity – it means that you can check token usage by user, by agent and/or by chat – allowing you to implement comprehensive statistics and reporting capabilities.

v1.0 includes a few breaking API changes. Make sure to check the migration guide.

Production-focused improvements:

LarAgent v1.0 is all about reliability, predictability, and scale — turning AI agents into first-class citizens of your Laravel application.

Happy coding! 🚀

Laravel News Links

Laravel News Links

https://picperf.io/https://laravelnews.s3.amazonaws.com/featured-images/laravel-debugbar-v4.png

Release Date: January 23, 2025

Package Version: v4.0.0

Summary

Laravel Debugbar v4.0.0 marks a major release with package ownership transferring from barryvdh/laravel-debugbar to fruitcake/laravel-debugbar. This version brings php-debugbar 3.x support and includes several new collectors and improvements for modern Laravel applications.

This release adds a new collector that tracks HTTP client requests made through Laravel’s HTTP client. The collector provides visibility into outbound API calls, making it easier to debug external service integrations and monitor response times.

For applications using Inertia.js, the new Inertia collector tracks shared data and props passed to Inertia components. This helps debug data flow in Inertia-powered applications.

The debugbar now includes improved component detection for Livewire versions 2, 3, and 4. This provides better visibility into Livewire component lifecycle events and data updates across all currently supported Livewire versions.

This version includes better handling for Laravel Octane and other long-running server processes. The debugbar now properly manages state across requests in persistent application environments.

The cache widget now displays estimated byte usage, giving developers better insight into cache memory consumption during request processing.

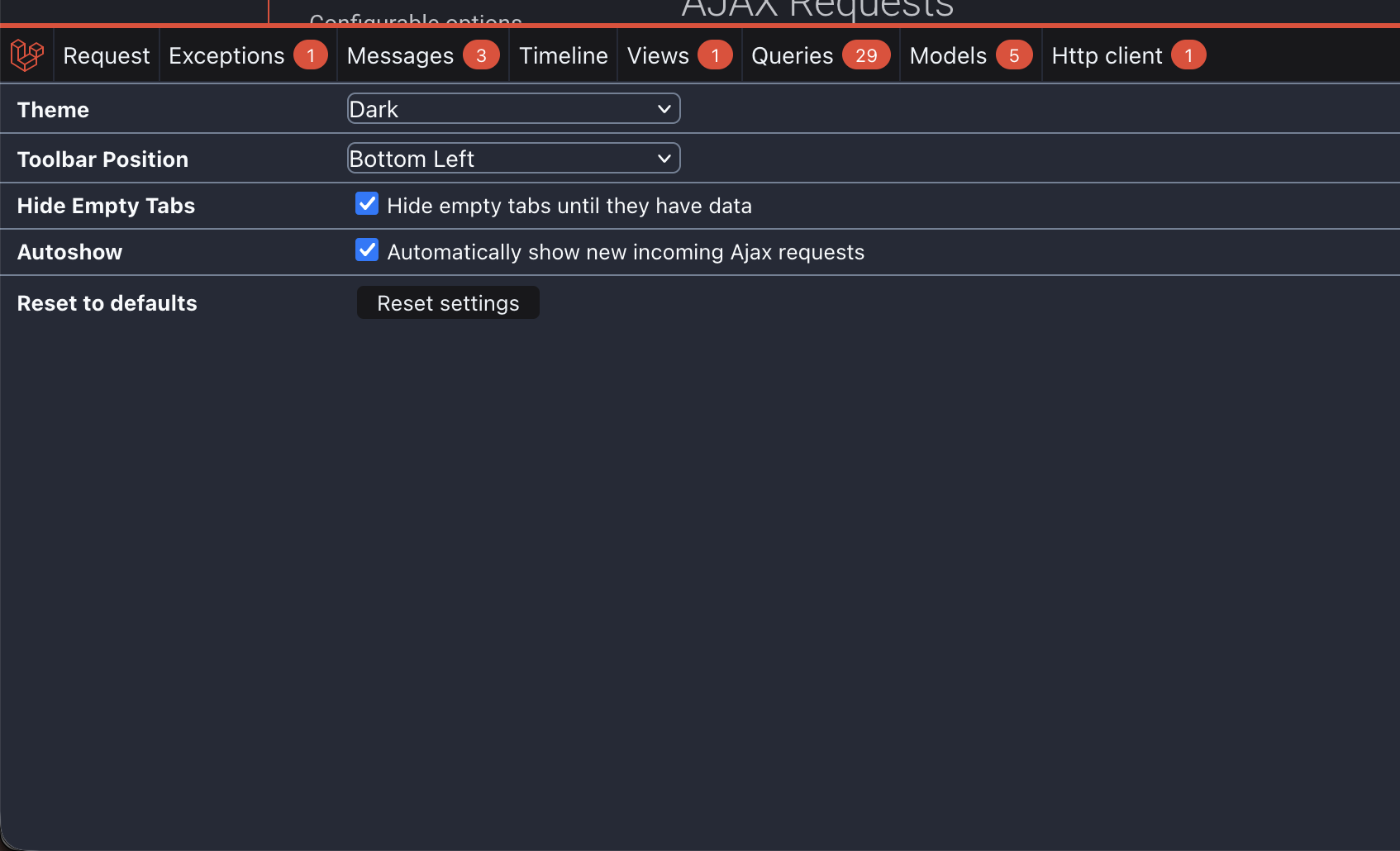

This version has many UI improvements and settings like debugbar position, auto-hiding empty collectors, themes (Dark, Light, Auto), and more:

The package has moved from barryvdh/laravel-debugbar to fruitcake/laravel-debugbar, requiring manual removal and reinstallation:

composer remove barryvdh/laravel-debugbar --dev --no-scripts

composer require fruitcake/laravel-debugbar --dev --with-dependencies

The namespace has changed from the original structure to Fruitcake\LaravelDebugbar. You’ll need to update any direct references to debugbar classes in your codebase.

Several features have been removed in this major version:

Default configuration values have been updated, and deprecated configuration options have been removed. Review your config/debugbar.php file and compare it with the published configuration from the new package.

This is not a standard upgrade. You must manually remove the old package and install the new one using the commands shown above. After installation, update any namespace references in your code from the old barryvdh namespace to Fruitcake\LaravelDebugbar.

Review your configuration file for deprecated options and compare with the new defaults. The package maintains compatibility with Laravel 9.x through 12.x. See the upgrade docs for details on upgrading from 3.x to 4.x.

Laravel News

https://techversedaily.com/storage/posts/MzQOunegn6DppLlmQlHsC7Mp4l52cV55BAhrUwPY.png

Your Laravel application felt fast during development.

Pages loaded instantly. Queries returned results in milliseconds. Everything seemed under control.

Then you deployed to production.

Traffic increased. Data grew. Users started complaining: “The site feels slow.”

This is a classic Laravel problem — and no, it’s usually not caused by PHP or Blade templates.

👉 The real bottleneck is almost always the database.

In production, inefficient queries don’t just slow down a page — they compound under load, drain server resources, and quietly kill performance.

In this guide, you’ll learn how to systematically optimize Laravel databases in production using three essential tools:

Database Indexes

EXPLAIN (Query Execution Plans)

MySQL Slow Query Log

Used together, these tools turn guessing into measurable optimization.

A few uncomfortable truths:

A 1-second delay can reduce conversions by 7%

Full table scans grow exponentially with data

What works with 10,000 rows fails miserably at 1 million

Laravel doesn’t automatically fix bad queries

Your database doesn’t care how elegant your Eloquent code looks — it only cares how much work it has to do.

Optimization is about reducing work.

Without an index, MySQL must scan every row to find matching data.

Think of it like this:

Indexes turn O(n) scans into O(log n) lookups.

Create indexes on columns that are:

Avoid indexing:

$orders = Order::where('user_id', $userId)->get();If orders.user_id is not indexed, MySQL scans the entire table.

Schema::table('orders', function (Blueprint $table) {

$table->index('user_id');

});Now MySQL can jump straight to relevant rows.

Real queries rarely filter on just one column.

$orders = Order::where('user_id', $userId)

->where('status', 'paid')

->orderBy('created_at', 'desc')

->get();Schema::table('orders', function (Blueprint $table) {

$table->index(['user_id', 'status', 'created_at']);

});⚠️ Index order matters

MySQL can use (user_id, status)

It cannot efficiently use (status, created_at) alone

Always index columns in the same order your queries filter them.

❌ Indexing every column

❌ Guessing instead of measuring

❌ Ignoring write performance

❌ Indexing low-cardinality fields alone

Indexes speed up reads but slow down writes. Balance is key.

Writing a query doesn’t mean MySQL executes it the way you expect.

EXPLAIN shows the truth.

$plan = DB::select(

'EXPLAIN SELECT * FROM orders WHERE user_id = ?',

[$userId]

);

dd($plan);EXPLAIN SELECT * FROM orders WHERE user_id = 10;type (Scan Method)keyThe index actually used

NULL = no index used ❌

rowsEstimated rows scanned

Smaller is always better

ExtraUsing filesort → Slow sorting

Using temporary → Temp table created

Using index → Index-only query (excellent)

EXPLAIN SELECT * FROM products WHERE category_id = 5;Result:

type = ALL

key = NULL

rows = 600000

🚨 MySQL scanned the entire table.

CREATE INDEX idx_category_id ON products (category_id);EXPLAIN SELECT * FROM products WHERE category_id = 5;Result:

type = ref

key = idx_category_id

rows = 120

✔ Massive improvement with zero code changes.

Some performance issues only appear in production.

That’s where Slow Query Log shines.

It records queries that exceed a time threshold.

Think of it as a black box recorder for your database.

SET GLOBAL slow_query_log = 1;

SET GLOBAL long_query_time = 1;

SET GLOBAL log_queries_not_using_indexes = 1;Queries taking longer than 1 second will be logged.

Edit MySQL config:

[mysqld]

slow_query_log = 1

slow_query_log_file = /var/log/mysql/mysql-slow.log

long_query_time = 1

log_queries_not_using_indexes = 1Restart MySQL:

sudo systemctl restart mysqlQuery_time: 2.94

Rows_examined: 184732

SELECT * FROM orders

WHERE user_id = 123

ORDER BY created_at DESC;This query scanned 184,732 rows to return a few records.

That’s your optimization target.

mysqldumpslow -s t -t 10 /var/log/mysql/mysql-slow.logpt-query-digest /var/log/mysql/mysql-slow.logThis gives:

Query frequency

Total execution time

Average latency

Rows examined

composer require laravel/telescope

php artisan telescope:install

php artisan migrateView query execution time directly in the dashboard.

composer require barryvdh/laravel-debugbar --devNever use Debugbar in production.

Enable slow query log

Identify worst queries

Run EXPLAIN

Add or adjust indexes

Refactor queries if needed

Measure before & after

Deploy and monitor

Optimization without measurement is guesswork.

Product::where('name', 'LIKE', '%laptop%')

->where('is_active', 1)

->orderBy('created_at', 'desc')

->paginate(20);EXPLAIN showed:

Full table scan

Filesort

800k rows scanned

Schema::table('products', function (Blueprint $table) {

$table->fullText('name');

});

Product::whereFullText('name', 'laptop')

->where('is_active', 1)

->paginate(20);Schema::table('products', function (Blueprint $table) {

$table->index(['is_active', 'created_at']);

});| Metric | Before | After |

| ------------ | ------- | ------- |

| Rows scanned | 850,000 | 220 |

| Query time | 3.1s | 0.04s |

| CPU usage | High | Minimal |Same app. Same data.

Just smarter database usage.

Laravel News Links