https://opengraph.githubassets.com/39693553b35358a87726adca6a50014c0dfefe0e3a5738c27770fbc91f7d8954/Tautulli/Tautulli

Planet Python

Just another WordPress site

https://i.ytimg.com/vi/wqMeAWhPHOY/maxresdefault.jpg

Laravel News Links

https://areaocho.com/wp-content/uploads/2024/01/GEsj3KjaIAIbh3U.jpg

Here are pictures from someone who went and made one of the conversion kits for an AR. It is a 3D printed, drop in kit that converts a semiautomatic AR into select fire.

To the ATF: These are not my photos, they have never been in my physical presence, and I don’t even own a dog. I don’t have any weapons that you would consider illegal. I am publishing this strictly for educational purposes. I am advising people to never make one of these, because they are illegal and we are law abiding citizens.

Area Ocho

https://hashnode.com/utility/r?url=https%3A%2F%2Fcdn.hashnode.com%2Fres%2Fhashnode%2Fimage%2Fupload%2Fv1705570376993%2Fe99c8069-d576-4833-9b4c-723042366749.png%3Fw%3D1200%26h%3D630%26fit%3Dcrop%26crop%3Dentropy%26auto%3Dcompress%2Cformat%26format%3Dwebp%26fm%3Dpng

In Laravel, the @yield directive is used in blade templates to define a section that can have code injected or "yielded" by child views.

Here’s a basic explanation of how @yield works:

Defining a section with @yield('name')

In a blade file typically used for layouts (can be any *.blade.php file), you can use the @yield directive to define a section where content can be injected. For example:

layouts/app.blade.php

<html>

<head>

<title>@yield('title') - </title>

</head>

<body>

<div class="container">

@yield('content')

</div>

</body>

</html>

In this example, there are two @yield directives: one for the ‘title’ section and another for the ‘content’ section.

In a child view that extends the parent view, you can use the @section directive to fill the sections defined in the parent view.

For example:

@extends('layouts.app')

@section('title', 'Page Title')

@section('content')

<p>This is the content of the page.</p>

@endsection

In this example, the view extends from layouts/app.blade.php the blade.php is left off the path as Laravel will know the file by its name only.

Then fill the ‘title’ and ‘content’ sections using a @section directive. This directive can self-close like in the title example or enclose multiple lines like in the ‘content’ example.

When you render the child view, Laravel will combine the content of the child view with the layout defined in the parent view. The @yield directives in the parent view will be replaced with the content provided in the child view.

php

The resulting HTML will be:

<html>

<head>

<title>Page Title</title>

</head>

<body>

<div class="container">

<p>This is the content of the page.</p>

</div>

</body>

</html>

For more flexibility, you can break your views into smaller chunks using multiple views, for example:

@include('layouts.partials.header')

<div class="wrapper">

@yield('content')

</div>

@include('layouts.partials.footer')

This layout view uses both @include and @yield directives. An include is used to bring in other files into the current file where the @include is placed.

@include takes 2 arguments. A file path and optionally an array. By default, anything defined in the parent page is available to the included file but it’s better to be explicit by passing in an array.

For example:

@include('pages', ['items' => $arrayOfItems])

The above example would include a pages.blade.php file and pass in an array called items. Inside the view $pages could then be used.

@include('layouts.partials.header') would include a file called header.blade.php located in layouts/partials

Typically I define multiple yields in a header like this:

<html>

<head>

@yield('meta')

<title>@yield('title') - </title>

@yield('css')

</head>

<body>

</body>

@yield('js')

</html>

This allows me to inject code into various parts of my layout.

For example in a blog I want to use meta tags for that post only and no other pages. This means I cannot hardcode the meta tags and the contents should change from post to post.

Typically I would inject meta tags in a post view like this:

@section('meta')

<meta itemprop="name" content="">

<meta itemprop="description" content="{!! strip_tags(Str::limit($post->description, 100)) !!}">

@if (!empty($post->image))

<meta itemprop="image" content="">

@endif

<meta name='description' content='{!! strip_tags(Str::limit($post->description, 100)) !!}'>

<meta property="article:published_time" content="" />

<meta property="article:modified_time" content="" />

<!-- Open Graph / Facebook -->

<meta property="og:type" content="website">

<meta property="og:url" content="">

<meta property="og:title" content="">

<meta property="og:description" content="{!! strip_tags(Str::limit($post->description, 100)) !!}">

@if (!empty($post->image))

<meta property="og:image" content="">

@endif

<!-- Twitter -->

<meta property="twitter:card" content="summary_large_image">

<meta name="twitter:site" content="@dcblogdev">

<meta name="twitter:creator" content="@dcblogdev">

<meta property="twitter:url" content="">

<meta property="twitter:title" content="">

<meta property="twitter:description" content="{!! strip_tags(Str::limit($post->description, 100)) !!}">

@if (!empty($post->image))

<meta property="twitter:image" content="">

@endif

<link rel="canonical" href=''>

<link rel="webmention" href='https://webmention.io/dcblog.dev/webmention'>

<link rel="pingback" href="https://webmention.io/dcblog.dev/xmlrpc" />

<link rel="pingback" href="https://webmention.io/webmention?forward=" />

@endSection

I may also inject CSS or Javascript from a single view, this is done in the same way:

@section('js')

<script>

...

</script>

@endSection

When working with components or Livewire you will come across the concept of slots.

A $slot variable is a special variable used to reference the content that is passed into a component. Components are a way to create reusable and encapsulated pieces of view logic.

Here’s a brief explanation of how $slot works within Laravel components:

When you create a Blade component, you can define a slot within it using the syntax.

For example:

<!-- resources/views/components/alert.blade.php -->

<div class="alert">

</div>

In this example, the alert component has a slot where content can be injected.

When you use the component in another view, you can pass content into the slot using the component tag.

For example:

<!-- resources/views/welcome.blade.php -->

<x-alert>

This is the content for the alert.

</x-alert>

In this example, the content "This is the content for the alert." is passed into the $slot of the alert component.

When the Blade view is rendered, Laravel will replace the in the component with the content provided when using the component. The resulting HTML will look like this:

<div class="alert">

This is the content for the alert.

</div>

The $slot variable essentially acts as a placeholder for the content passed to the component.

Using $slot allows you to create flexible and reusable components that can accept different content each time they are used. It provides a convenient way to structure and organize your Blade templates while maintaining the reusability of components.

Components can also be layout files, for example making a new component called AppLayout using Artisan:

php artisan make:component AppLayout

Will create 2 files:

Replace the contents of AppLayout.php with:

<?php

namespace App\View\Components;

use Illuminate\View\Component;

use Illuminate\View\View;

class AppLayout extends Component

{

public function render(): View

{

return view('layouts.app');

}

}

This will load a layouts/app.blade.php when rendered.

To have a view use this layout inside a view use the <x- directive followed by the component name in the kebab case. So AppLayout becomes app-layout

<x-app-layout>

The content goes here.

</x-app-layout>

Now inside app.blade.php since this used the AppLayout component we don’t use @yield to have placeholders instead, we use or named slots

For example:

<!DOCTYPE html>

<html lang="">

<head>

<title> - </title>

</head>

<body>

</body>

</html>

In components, named slots provide a way to define and pass content into specific sections of a component. Unlike the default slot, which is referenced using, named slots allow you to define multiple distinct sections for injecting content. This can be particularly useful when you need to organize and structure different parts of your component.

Here’s a basic explanation of how named slots work.

When creating a blade component, you can define named slots using the @slot directive. For example:

<!-- resources/views/components/alert.blade.php -->

<div class="alert">

<div class="header">

@slot('header')

@endslot

</div>

<div class="content">

</div>

<div class="footer">

@slot('footer')

@endslot

</div>

</div>

In this example, the alert component has three named slots: ‘header’, ‘footer’, and the default slot, which is simply referred to as $slot.

When using the component in another blade view, you can pass content into the named slots using the component tag. For example:

<!-- resources/views/welcome.blade.php -->

<x-alert>

<x-slot name="header">Alert Header</x-slot>

This is the content for the alert.

<x-slot name="footer">Alert Footer</x-slot>

</x-alert>

Here, content is provided for the ‘header’ and ‘footer’ named slots, in addition to the default slot.

When the blade view is rendered, Laravel will replace the content of the named slots in the component with the content provided when using the component. The resulting HTML will look like this:

<div class="alert">

<div class="header">Alert Header</div>

<div class="content">This is the content for the alert.</div>

<div class="footer">Alert Footer</div>

</div>

Named slots provide a way to structure and organize the content of your components more explicitly. They make it easier to manage complex components with multiple distinct sections by giving each section a meaningful name.

Laravel News Links

https://www.percona.com/blog/wp-content/uploads/2024/01/Introduction-to-Vector-Databases-200×112.jpg Imagine that winter is coming to the south of the planet, that you are going on a vacation trip to Patagonia, and you want to buy some cozy clothes. You go to that nice searcher page that says “do no evil” and write in the search field “Jackets for Patagonia weather,” not thinking of a […]Percona Database Performance Blog

Imagine that winter is coming to the south of the planet, that you are going on a vacation trip to Patagonia, and you want to buy some cozy clothes. You go to that nice searcher page that says “do no evil” and write in the search field “Jackets for Patagonia weather,” not thinking of a […]Percona Database Performance Blog

https://static1.makeuseofimages.com/wordpress/wp-content/uploads/2024/01/nintendo-switch-lite-in-a-dock.jpg

The Nintendo Switch is one of the easiest consoles to take on the go, but the Nintendo Switch Dock? Not so much. Thankfully, the technology involved isn’t proprietary, and there are plenty of Nintendo Switch dock alternatives that can fill a niche you need, from portability to extra features like an Ethernet port.

Considering the color scheme and available ports, you might mistake the SIWIQU TV Dock Station as the official Nintendo Switch Dock, but it isn’t. Luckily, it’s just as good, if not better, in the right circumstances. It has a rather low profile, making it easy to find a place and equally easy to transport in a Nintendo Switch travel case. The anti-slip feet are also a welcome addition to prevent sudden, unwanted movements.

As for ports, the SIWIQU TV Dock Station has one fewer USB-A port than the original Nintendo Switch Dock, but the trade-off is that one of the ports is USB 3.0. That is a boon if you want to plug in an Ethernet adapter for faster internet speeds, or you can pay a little extra for the LAN model, which has a gigabit Ethernet port. And the best part? It’s also an excellent choice for the Nintendo Switch OLED!

Best Overall

Being both low profile and portable, the SIWIQU TV Dock Station is an excellent alternative for the Nintendo Switch, with a color scheme to match, too. From short-circuit to overheating, it also comes with a myriad of protections in place.

If you’re pressed for cash, or you’re looking for an adorable way to charge your console, the Heiying Docking Station is an easy option to recommend, especially if you have the Nintendo Switch Lite. Designed to look like a Mario mushroom or PokeBall, it totally makes sense to pair the two together.

While the Heiying Docking Station is essentially a glorified charger, it isn’t without a handful of handy features. Along the bottom, you’ll find a large, non-slip rubber ring that holds the charging dock in place. When you dock your Nintendo Switch, you’ll notice the silicone padding around the USB-C connector that the console sits comfortably against, preventing unwanted scratching.

Best Budget

The Heiying Docking Station charges your Nintendo Switch with style, either atop a mushroom or a red and blue Pokeball. It’s not without features, like non-slip rubber feet and padding that protects your console from scratches.

What better option than the official Nintendo Switch Dock itself? Sure, it may not be as portable as some other options on our list, but with it being the official dock, it comes directly from Nintendo, and that means reliability. You’re guaranteed to get a docking station that works flawlessly with the Nintendo Switch, Switch OLED, and Switch Lite.

The original Nintendo Switch Dock—because there are two models—comes with two USB 2.0 ports and one USB 3.0 port. The USB 3.0 port is hidden at the back, behind the docking station’s door. With that port having improved performance over USB 2.0, it’s the prime candidate for a USB-A to Ethernet adapter if you want to connect your switch directly to a modem.

However, the Nintendo Switch OLED Dock has an Ethernet port built right in, but one fewer USB-A port. It also sports a white finish, which is arguably better looking than the all-black. Despite being the “OLED model,” it supports all Switch models. You’ll have to supply your own AC adapter and HDMI cable, though.

The Official Option

$74 $90 Save $16

If your main concern is reliability, the official Nintendo Switch Dock Set is a no-brainer. It comes with everything you need to charge, display, and connect your Nintendo Switch to the big screen.

Most alternative Switch docks sacrifice a feature or two, but not the D.Gruoiza Switch Dock. Like the original Switch dock, you have three USB-A ports at your disposal, one of which is USB 3.0. And with access to USB-C, you can also charge your Nintendo Switch at the same time.

One feature the original Switch dock lacks is an Ethernet port, which was rectified in the later OLED model. Instead of picking up another Switch dock, you only need to purchase the D.Gruoiza Switch Dock once to have a LAN port. Altogether, the D.Gruoiza Switch Dock lets you charge your Nintendo Switch, connect directly to a modem, and switch to TV mode.

Best Dock with Extra Ports

The D.Gruoiza Switch Dock combines the best aspects of the OLED and original Switch dock models, squeezed into a rather small package, such as a LAN port, HDMI, USB-A 3.0, and charging capabilities.

Given its small stature, the Hagibis Switch Dock is the perfect portable option if you frequently take your Nintendo Switch on the road. Its built-in features are almost identical to the original Switch dock, just in a much slimmer package that’s also quite pleasing to the eyes.

You still retain the ability to connect to the big screen with the Hagibis Switch Dock, in addition to charging it at the same time, thanks to its 100W power delivery port. Sadly, you only get one USB-A port, as opposed to the original dock’s three. It’s a fair trade, and the USB-A port you do get is USB 3.0, which comes in handy for faster data transfer speeds.

Best Portable Dock

With its small form factor and low weight, the Hagibis Switch Dock is the perfect solution for a portable docking station. It functions like the original Nintendo Switch Dock, with power delivery, a USB 3.0 port, and HDMI to cast the console to an external monitor or television.

Yes. You can use any Nintendo Switch model interchangeable with all Switch docks. This is due to the fact that every model—Lite, OLED, and original—charges in the same manner via USB-C.

Yes. If you buy a second Nintendo Switch, the two consoles can share the same dock, but keep in mind only one handheld can charge at a time. This works no matter which model you purchase.

Yes, but you’ll still need some extra equipment to make it happen. If you want to connect your Nintendo Switch to a TV without a dock, you at least need a USB-C to HDMI adapter.

Third-party Nintendo Switch docks are perfectly safe as long as they come from a reputable company. When choosing a third-party Nintendo Switch dock, you want to be sure that the dock will not scratch the screen and has overcharging protection.

MakeUseOf

https://www.laraveldeploy.com/twitter-image.png?dafab169a202642f

Laravel News Links

https://picperf.io/https://laravelnews.s3.amazonaws.com/featured-images/Filament_3.2_Social_Image_1.png

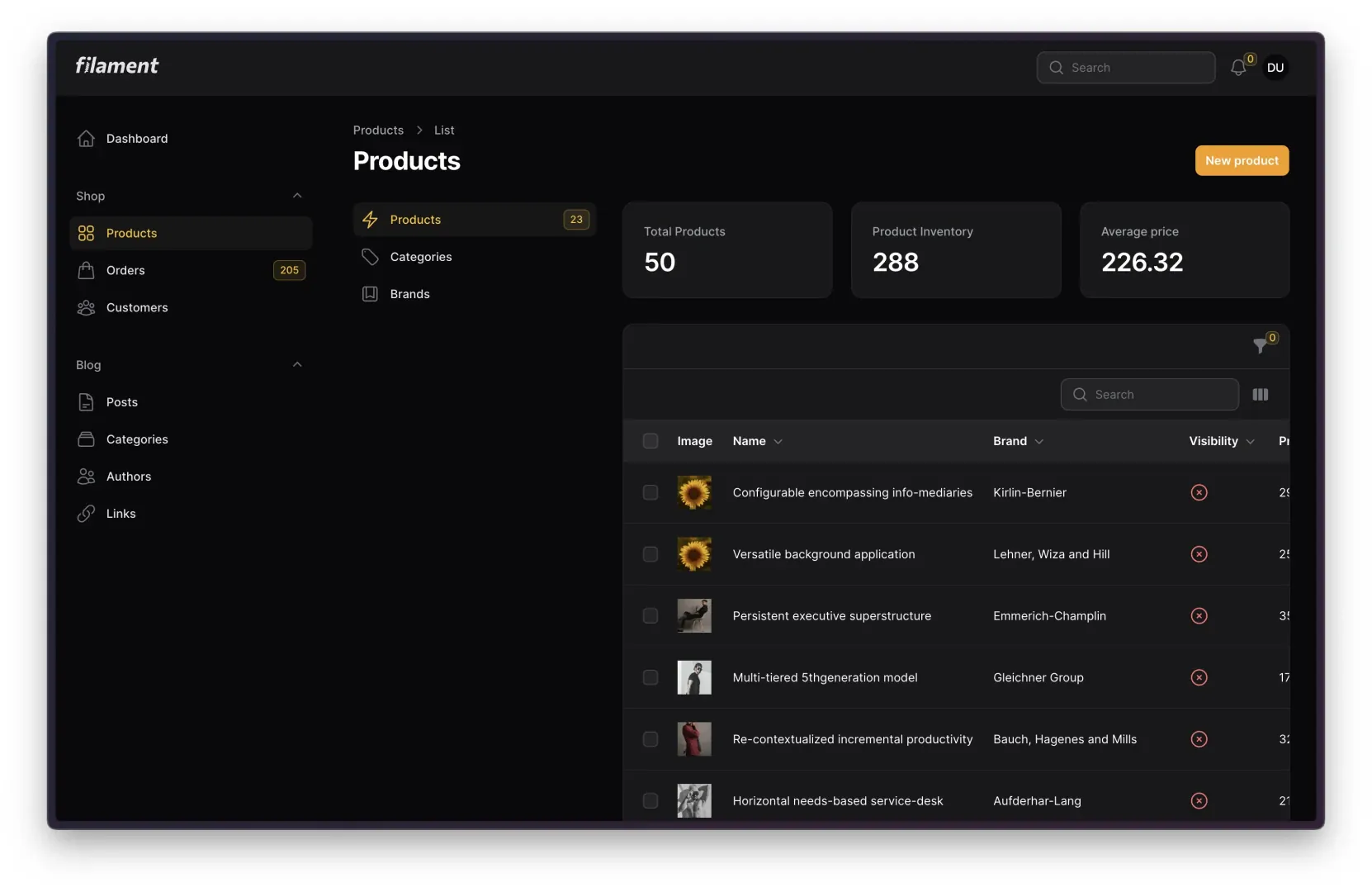

We’re only 15 days into January, and Filament v3.2 has already launched! This release is packed with a bunch of really exciting updates, so let’s dive right in and take a look!

As always, if you prefer to read about the changes directly on Github, check out the v3.2 changelog here.

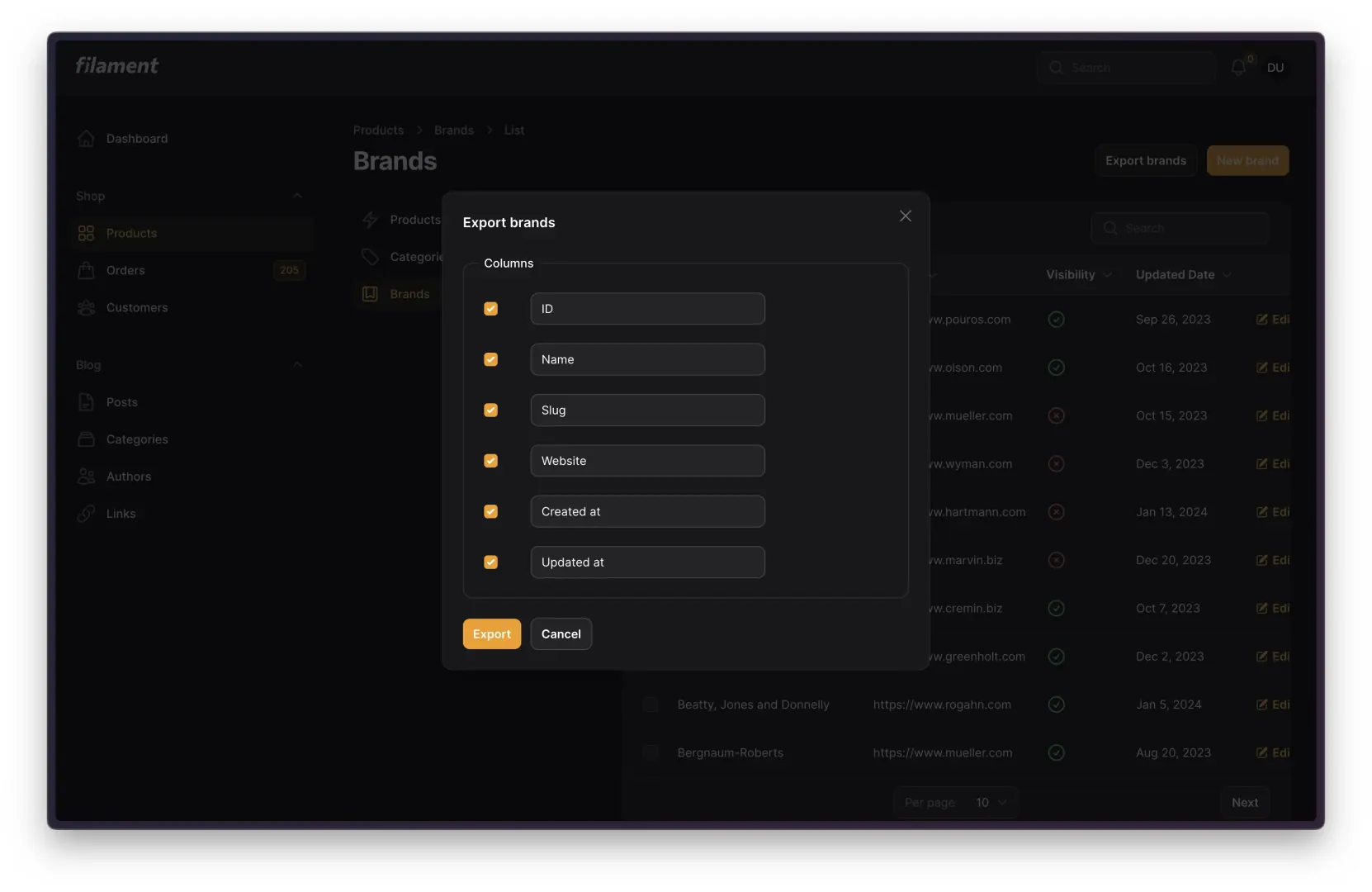

In Filament v3.1, we introduced the ability to import large amounts of data using a CSV. Since then, one of our most commonly requested features has been to add the exact opposite feature–exports! Now, in v3.2, a prebuilt ExportAction has been added to do just that.

Using a very similar API to the Import feature added in v3.1, developers can create Exporters that tell Filament how to export a given set of data.

Within the context of an Exporter, developers can define which columns should be included from the data, how they should be labeled, and even tweak the data itself that should be displayed in each table cell. Additionally, just like with Importers, Exporters can handle displaying data from related models via ExportColumn objects. At the most simple, they can be configured to display data from related models (the name of the User who wrote a Post object), but ExportColumns can even go so far as to aggregate relationships and output data based on the existence of a relationship.

This all just scratches the surface, so make sure to check out the official Export Action documentation on the Filament website for more details!

Clusters are one of the most exciting additions to Filament in v3.2! Building on the sub-navigation feature added in v3.1, clusters are a hierarchical structure within Filament panels that allow developers to group resources and custom pages together. To give a less abstract definition, clusters help to quickly and easily group multiple pages across your Filament panel together, bundling them into a single entry in your main Filament sidebar.

Creating and using clusters is incredibly simple and can be set up in a few easy steps:

discoverClusters() method in the Panel configuration file to tell Filament where to find your Cluster classesphp artisan make:filament-cluster YourClusterName to have Filament set up a new Cluster classprotected static ?string $cluster = YourClusterName::class property to any resource or page class you want within a given clusterOnce you have completed these three steps, you’ll see your cluster appear on the main sidebar. And when clicked, Filament will open to a page with sub-navigation containing all resources and pages you gave the $cluster property.

This unlocks an incredible amount of flexibility and customizability when building panels in Filament. For more details on clusters and how best to use them, check out the documentation:

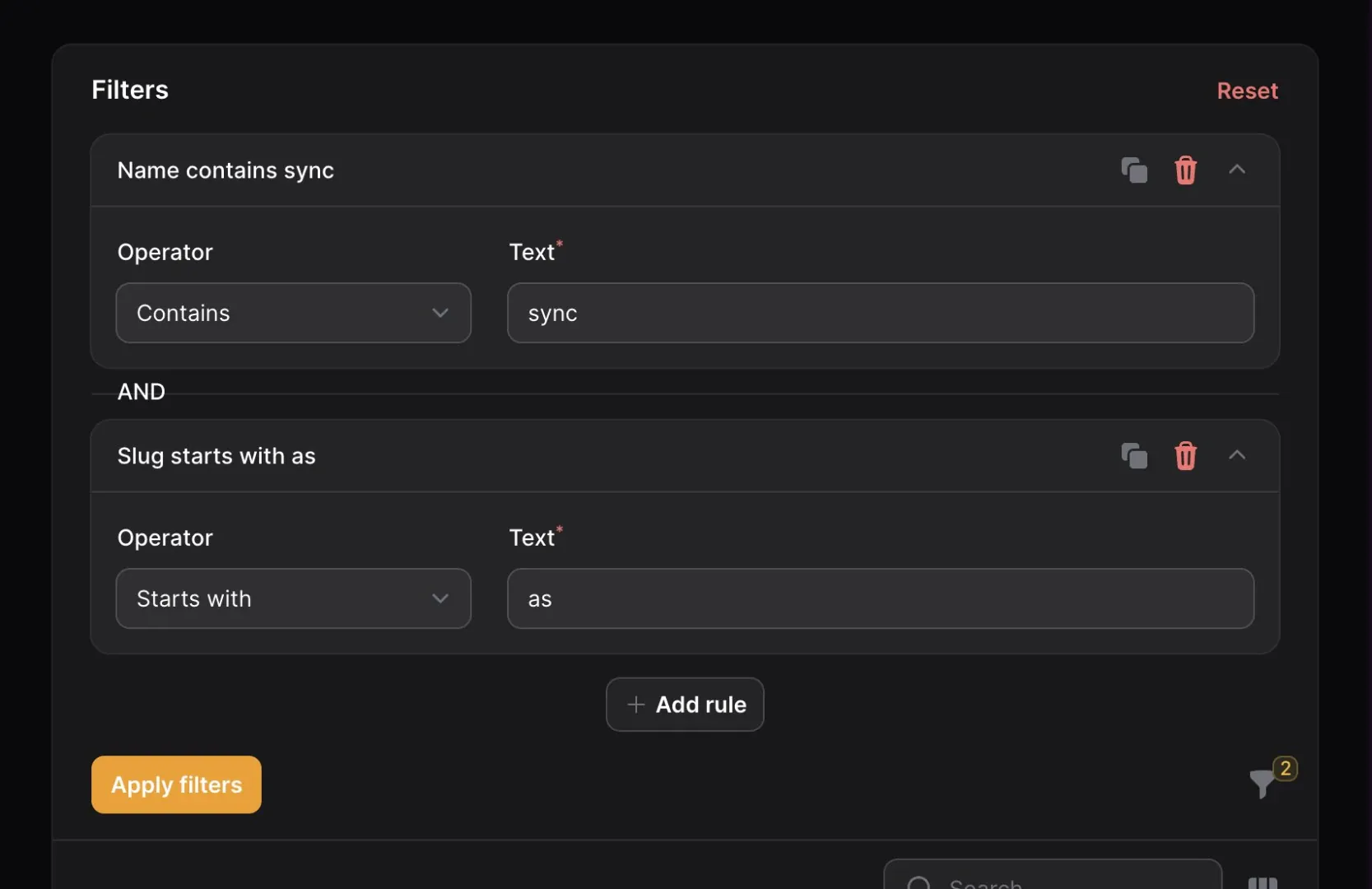

One of the most important features of the Filament tables package is the ability to quickly and easily create filters for the table data. In the past, whenever one of these filters was interacted with on the front-end, it would immediately fire off a request for new, filtered data. A lot of times, this was perfectly fine and users wouldn’t notice much of a delay (if any). However, there are certain occasions where interacting with a filter would cause a noticeable delay in the interface due to heavy queries, immense datasets, etc.

Now, in v3.2, table filters can now be deferred! When a table’s filters are deferred, the user can click around and toggle as many filters as they desire, but the actual query only gets run when the user clicks “Apply”. Enabling deferred filters for a table is as simple as adding one line of code to the $table object: ->deferFilters().

For more information on deferring table filters, take a look at the documentation:

We’ve all been there. We’ve all been 90% of the way through a long and complex form when we accidentally navigate away from the page, wiping out all of our hard work. Well, thanks to the v3.2 release, this will no longer be a problem for Filament panels!

By chaining the unsavedChangesAlerts() method onto a $panel configuration object, Filament will automatically send an alert to users who try to navigate away from any given page without saving their in-progress changes. As you’d expect, these alerts are enabled for “Create” and “Edit” pages, but they are also enabled for any open action modals as well!

For more details on these alerts, see the following section of the panel configuration documentation:

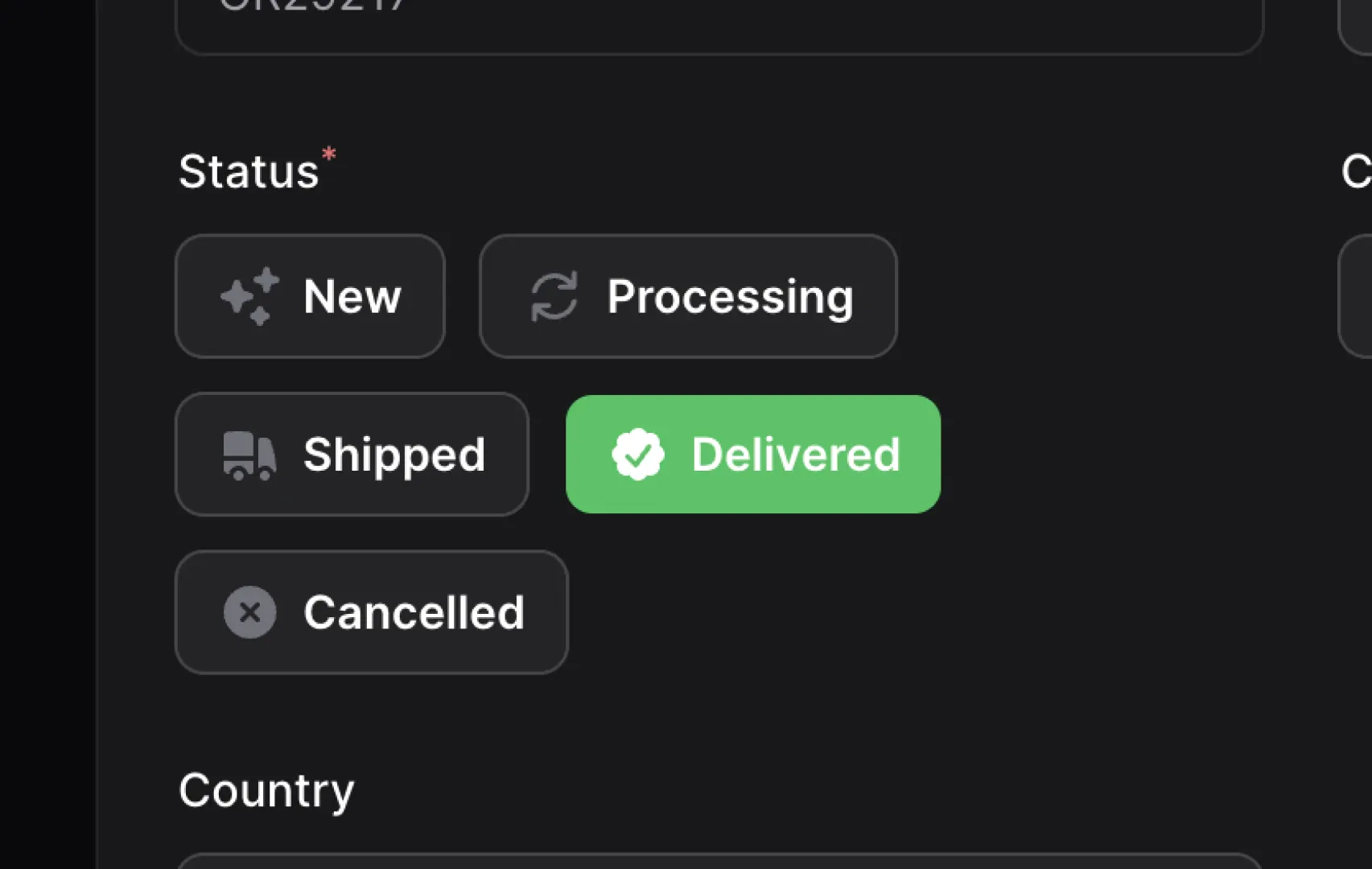

ToggleButtons Component

Filament v3.2 includes a new form component called ToggleButtons. ToggleButtons act as an alternative to the Select, Radio, and CheckboxList components by presenting a new UI for the same sort of functionality. ToggleButtons appear as a grouping of Filament buttons that the user can select one or more of, similarly to how a user would select one or more results from any of the alternative components listed above. As with all of Filament’s UI components, ToggleButtons have plenty of options when it comes to customizing their look and feel, so there should be a solution for every occasion.

Give them a try and let us know what you think!

For more information on ToggleButtons, check out the new dedicated documentation page:

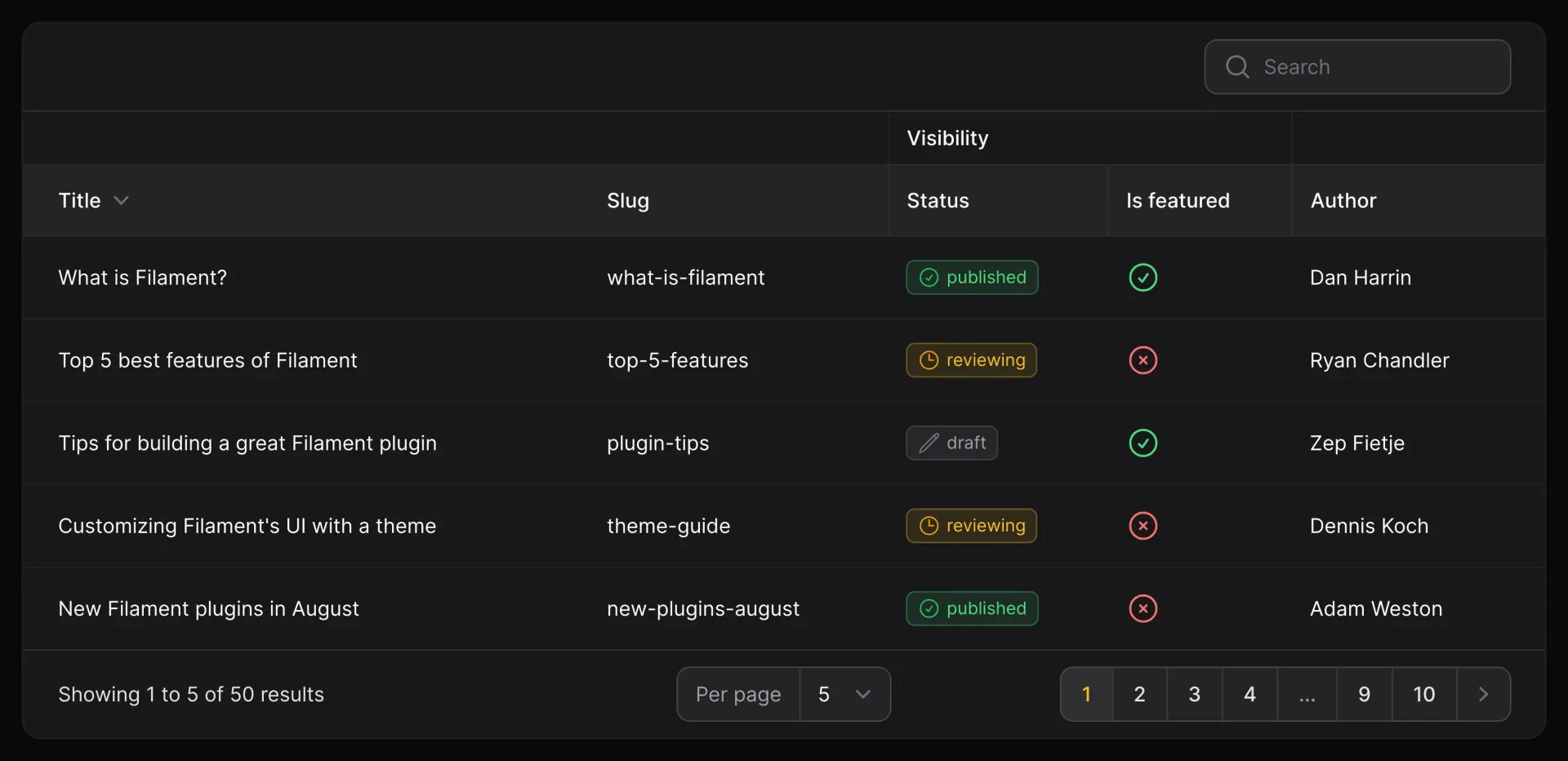

Sometimes, data that is contained within our models and database tables is connected in the business logic, but not in the actual data model itself. This is typically the case with loose groupings; for example, both a status and a is_featured column on a Post model may affect the overall “Visibility” of the Post. Previously, there was no good way in Filament to convey to the user that these two properties were related other than placing them next to each other in the table. However, that all changes with v3.2.

Now, with the same ease with which a developer would add a new column to a Filament table, they can also add column groups to bind columns together in the UI. For example, in our example earlier, status and is_featured are both grouped under the umbrella of “Visibility”. So, instead of just placing those two columns side-by-side and hoping that the association is picked up on by the user, we can wrap them in a ColumnGroup and let Filament display the association directly in the table.

The code for those columns would go from this:

TextColumn::make('status'),

IconColumn::make('is_featured'),

to this:

ColumnGroup::make('Visibility', [

TextColumn::make('status'),

IconColumn::make('is_featured'),

])

It’s that simple!

To learn more about grouping columns and the other ways you adjust the ColumnGroup object, take a look at the documentation linked below:

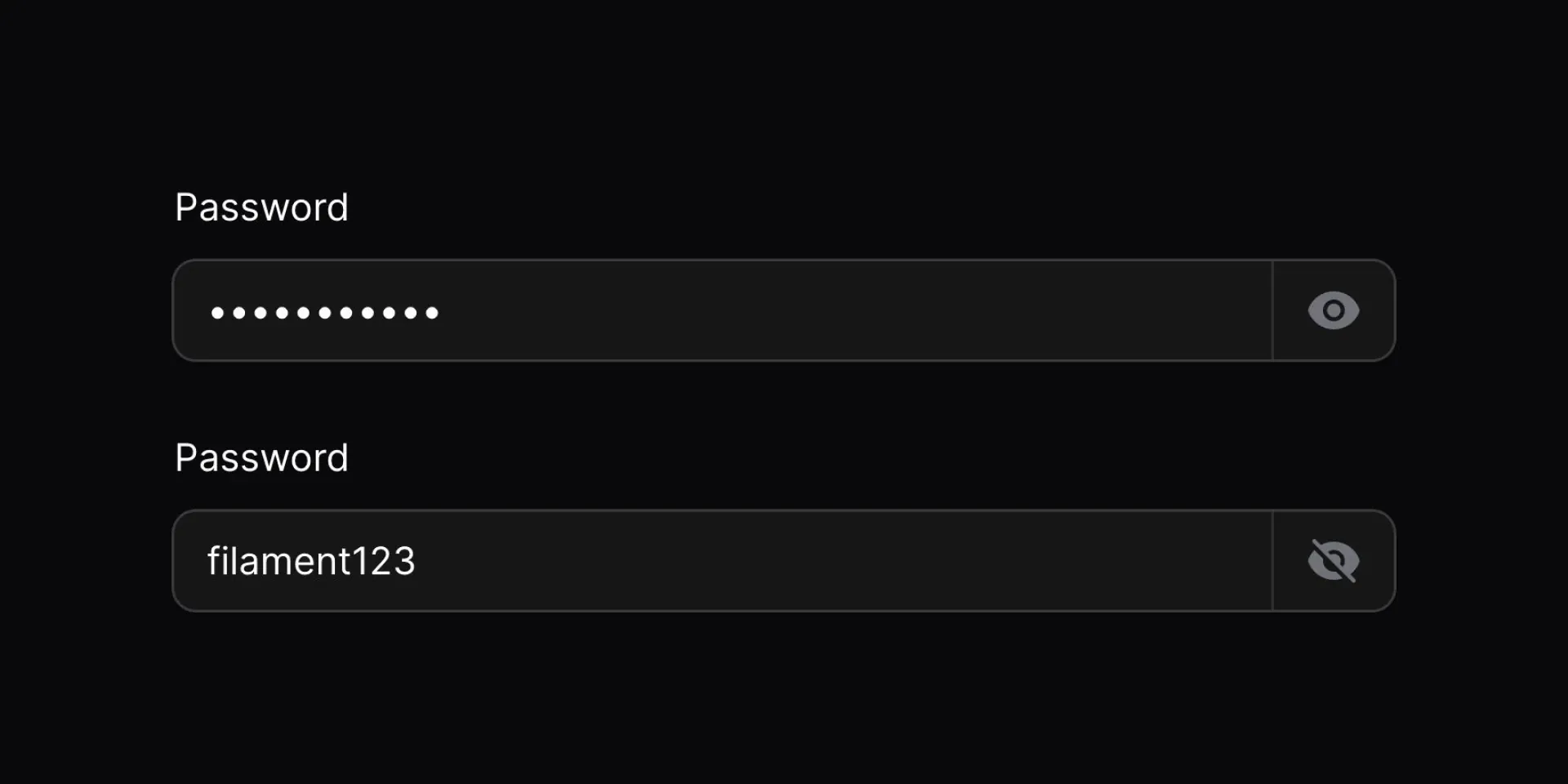

This is a seemingly small update that has been asked for time and time again, so we’re very happy to bring revealable password fields to Filament v3.2!

This does exactly what it says on the tin. Now, by chaining the revealable() method onto your password field declaration, Filament will add a toggle button to the end of the TextInput field that will show/hide the password characters. No more having to hope that the characters behind the masked dots in the input are the ones that you intended to type! Just click and reveal your "password123" password in all its plain-text glory!

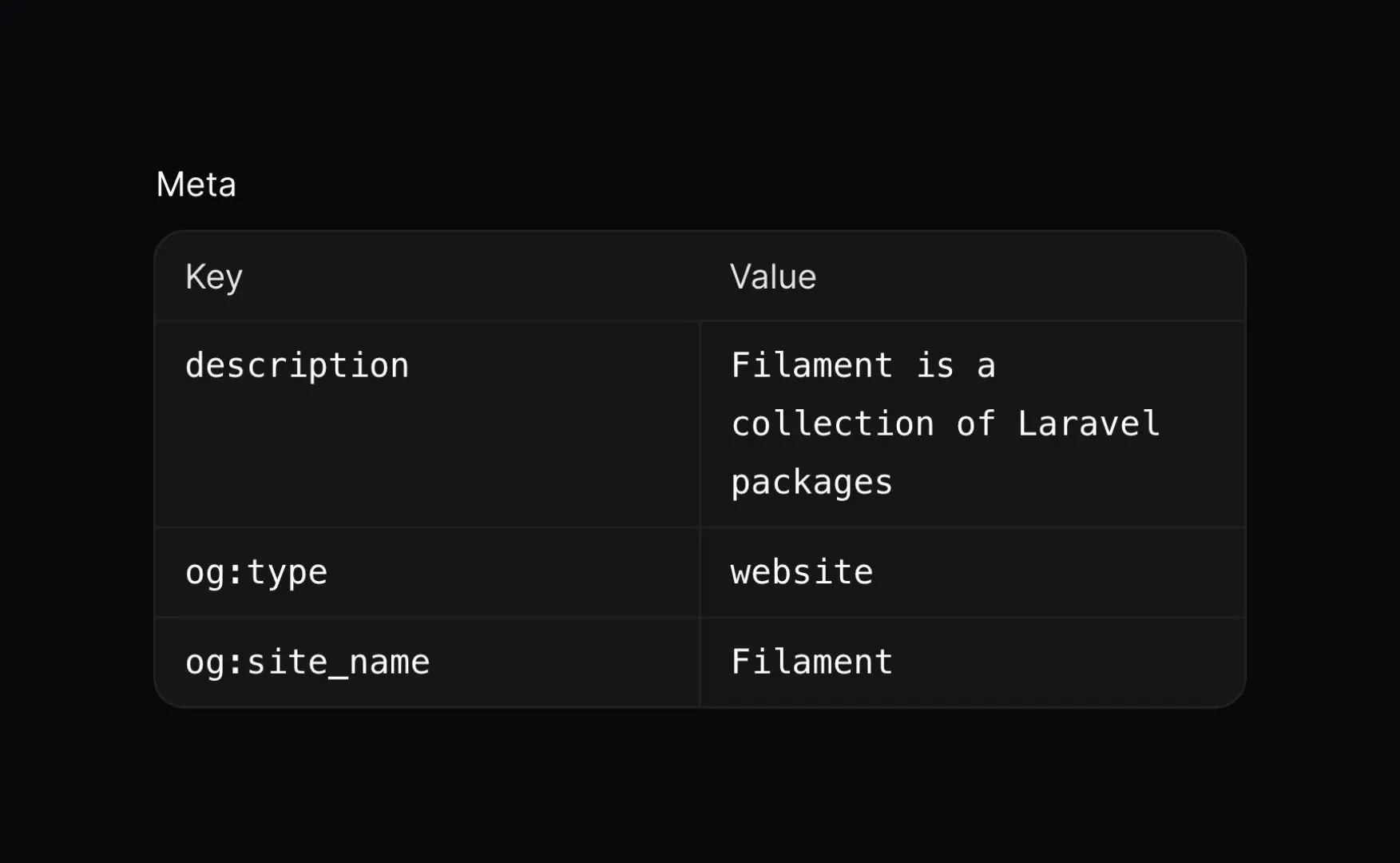

KeyValueEntry Infolist Component

In v3.2, a new Infolist component has been added called the KeyValueEntry. KeyValueEntry components simply display a list of key-value pairs in a nicely formatted two-column table. This list of key-value pairs can be rendered from either a one-dimensional JSON object or a PHP array, which makes this entry exceptionally flexible to meet many use-cases.

The KeyValueEntry component also pairs very well with the existing KeyValueField in the Filament form builder!

For more information, check out the documentation:

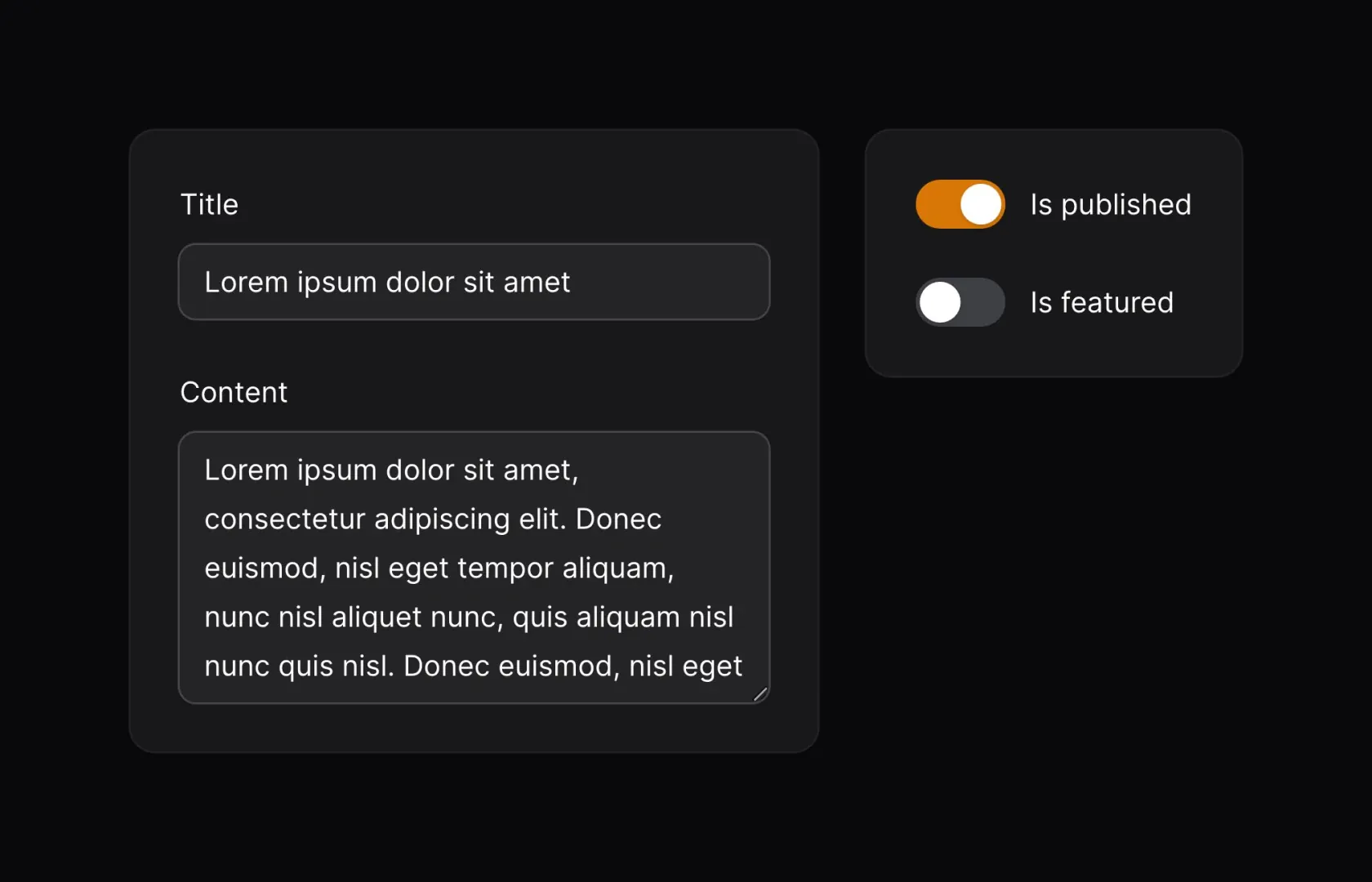

Split Form Layout Component

The Split form layout is another highly-requested feature that we’ve added into v3.2! Until now, form layouts were fairly strictly confined to using a grid with N number of columns. With the Split layout, however, forms can be built with flexibility in mind!

Split, unlike the Grid layout, will allow you to define your layouts with flexbox. This allows for form sections to grow and shrink as the content requires without needing to worry about the content clipping if the section is not wide enough.

One very common use-case for Split so far is to handle sidebars. Often, forms will have one main section that takes up the majority of the page’s horizontal space and will have another sidebar section for metadata, settings, etc. Using the Split layout, we can set the sidebar section to shrink to only take up as much width as the section contents require, allowing our main section to take up as much space as is available!

There are a lot of different uses for the Split component, so take a look at the documentation to see where you might be able to implement it in your app!

This is a simple, but very handy quality-of-life change! Now, in v3.2, Filament offers a HasDescription interface that allows enums to have a textual description displayed in the UI, usually under the label. Similarly to the HasLabel interface, HasDescription will require developers to implement the getDescription() method on the given enum, returning the desired description string for each enum case.

For code examples and specific instructions, see our documentation here:

Last, but certainly not least, now, when you have a scrolling sidebar in your Filament panel, the active item will always attempt to remain as close to the vertical center of the window as possible when navigating between pages! Hopefully, this will further enhance the user experience, since your users won’t have to hunt around for the currently-active page!

As always, we could not have done this without the incredible Filament community! All of you are so crucial to the work that we do, and we are so thankful for the contributions, discussions, and ideas that each of you gives day in and day out in our Discord and on Twitter.

We’re so excited to finally have released v3.2 for all of you to use. Let us know what you think, we’d love to hear it!

Until next release!

The post Introducing Filament v3.2 appeared first on Laravel News.

Join the Laravel Newsletter to get all the latest Laravel articles like this directly in your inbox.

Laravel News

Exporting data to an Excel file is a common requirement in many applications. Laravel Excel provides a straightforward way to generate Excel files with data from your application. In this guide, we’ll walk through the process of creating an Excel export file using Laravel Excel and including dropdowns with predefined options.

In this blog I’ll create an Excel file export, because I want to let the user define the relations already. The Excel export which can later be imported back in the Laravel app will contain the dropdown containing the name of the relations.

To get started, make sure you have Laravel installed along with the Laravel Excel package. Next, create an export class (ItemExport in this case) that implements the necessary interfaces (FromCollection, WithHeadings, and WithEvents).

namespace App\Exports;

use App\Models\Supplier;

use Maatwebsite\Excel\Concerns\Exportable;

class ItemExport implements FromCollection, WithHeadings, WithEvents

{

use Exportable;

protected $selects;

protected $rowCount;

protected $columnCount;

public function __construct()

{

$suppliers = Supplier::orderBy('name')->pluck('name')->toArray();

$selects = [

['columns_name' => 'A', 'options' => $suppliers],

];

$this->selects = $selects;

$this->rowCount = count($suppliers) + 1;

$this->columnCount = 11;

}

}

In the ItemExport class, the registerEvents() method is used to define the behavior after the Excel sheet is created. Inside this method, we’ll set up dropdowns for specified columns.

public function registerEvents(): array

{

return [

AfterSheet::class => function (AfterSheet $event) {

$row_count = $this->rowCount;

$column_count = $this->columnCount;

$hiddenSheet = $event->sheet->getDelegate()->getParent()->createSheet();

$hiddenSheet->setTitle('Hidden');

$hiddenSheet->setSheetState(\PhpOffice\PhpSpreadsheet\Worksheet\Worksheet::SHEETSTATE_HIDDEN);

foreach ($this->selects as $select) {

$drop_column = $select['columns_name'];

$options = $select['options'];

foreach ($options as $index => $option) {

$cellCoordinate = \PhpOffice\PhpSpreadsheet\Cell\Coordinate::stringFromColumnIndex(1) . ($index + 1);

$hiddenSheet->setCellValue($cellCoordinate, $option);

}

$validation = $event->sheet->getCell("{$drop_column}2")->getDataValidation();

$validation->setType(\PhpOffice\PhpSpreadsheet\Cell\DataValidation::TYPE_LIST);

$validation->setShowDropDown(true);

$validation->setFormula1('Hidden!$A$1:$A$' . count($options));

for ($i = 3; $i <= $row_count; $i++) {

$event->sheet->getCell("{$drop_column}{$i}")->setDataValidation(clone $validation);

}

for ($i = 1; $i <= $column_count; $i++) {

$column = \PhpOffice\PhpSpreadsheet\Cell\Coordinate::stringFromColumnIndex($i);

$event->sheet->getColumnDimension($column)->setAutoSize(true);

}

}

},

];

}

To use the ItemExport class, instantiate it and export the data.

use App\Exports\ItemExport;

use Maatwebsite\Excel\Facades\Excel;

public function export()

{

return Excel::download(new ItemExport(), 'exported_data.xlsx');

}

When executed, this will generate an Excel file (exported_data.xlsx) with the specified columns and dropdowns in the designated columns (A in this example), containing the predefined options.

Feel free to adjust the column names, options, and other configurations according to your specific use case.

Laravel News Links

https://files.realpython.com/media/ie-data-analysis-workflowv3.bfb835b95c5e.png

Data analysis is a broad term that covers a wide range of techniques that enable you to reveal any insights and relationships that may exist within raw data. As you might expect, Python lends itself readily to data analysis. Once Python has analyzed your data, you can then use your findings to make good business decisions, improve procedures, and even make informed predictions based on what youâve discovered.

In this tutorial, youâll:

Before you start, you should familiarize yourself with Jupyter Notebook, a popular tool for data analysis. Alternatively, JupyterLab will give you an enhanced notebook experience. You might also like to learn how a pandas DataFrame stores its data. Knowing the difference between a DataFrame and a pandas Series will also prove useful.

Get Your Code: Click here to download the free data files and sample code for your mission into data analysis with Python.

In this tutorial, youâll use a file named james_bond_data.csv. This is a doctored version of the free James Bond Movie Dataset. The james_bond_data.csv file contains a subset of the original data with some of the records altered to make them suitable for this tutorial. Youâll find it in the downloadable materials. Once you have your data file, youâre ready to begin your first mission into data analysis.

Data analysis is a very popular field and can involve performing many different tasks of varying complexity. Which specific analysis steps you perform will depend on which dataset youâre analyzing and what information you hope to glean. To overcome these scope and complexity issues, you need to take a strategic approach when performing your analysis. This is where a data analysis workflow can help you.

A data analysis workflow is a process that provides a set of steps for your analysis team to follow when analyzing data. The implementation of each of these steps will vary depending on the nature of your analysis, but following an agreed-upon workflow allows everyone involved to know what needs to happen and to see how the project is progressing.

Using a workflow also helps futureproof your analysis methodology. By following the defined set of steps, your efforts become systematic, which minimizes the possibility that youâll make mistakes or miss something. Furthermore, when you carefully document your work, you can reapply your procedures against future data as it becomes available. Data analysis workflows therefore also provide repeatability and scalability.

Thereâs no single data workflow process that suits every analysis, nor is there universal terminology for the procedures used within it. To provide a structure for the rest of this tutorial, the diagram below illustrates the stages that youâll commonly find in most workflows:

The solid arrows show the standard data analysis workflow that youâll work through to learn what happens at each stage. The dashed arrows indicate where you may need to carry out some of the individual steps several times depending upon the success of your analysis. Indeed, you may even have to repeat the entire process should your first analysis reveal something interesting that demands further attention.

Now that you have an understanding of the need for a data analysis workflow, youâll work through its steps and perform an analysis of movie data. The movies that youâll analyze all relate to the British secret agent Bond ⦠James Bond.

The very first workflow step in data analysis is to carefully but clearly define your objectives. Itâs vitally important for you and your analysis team to be clear on what exactly youâre all trying to achieve. This step doesnât involve any programming but is every bit as important because, without an understanding of where you want to go, youâre unlikely to ever get there.

The objectives of your data analysis will vary depending on what youâre analyzing. Your team leader may want to know why a new product hasnât sold, or perhaps your government wants information about a clinical test of a new medical drug. You may even be asked to make investment recommendations based on the past results of a particular financial instrument. Regardless, you must still be clear on your objectives. These define your scope.

In this tutorial, youâll gain experience in data analysis by having some fun with the James Bond movie dataset mentioned earlier. What are your objectives? Now pay attention, 007:

Now that youâve been briefed on your mission, itâs time to get out into the field and see what intelligence you can uncover.

Once youâve established your objectives, your next step is to think about what data youâll need to achieve them. Hopefully, this data will be readily available, but you may have to work hard to get it. You may need to extract it from the data storage systems within an organization or collect survey data. Regardless, youâll somehow need to get the data.

In this case, youâre in luck. When your bosses briefed you on your objectives, they also gave you the data in the james_bond_data.csv file. You must now spend some time becoming familiar with what you have in front of you. During the briefing, you made some notes on the content of this file:

| Heading | Meaning |

|---|---|

Release |

The release date of the movie |

Movie |

The title of the movie |

Bond |

The actor playing the title role |

Bond_Car_MFG |

The manufacturer of James Bondâs car |

US_Gross |

The movieâs gross US earnings |

World_Gross |

The movieâs gross worldwide earnings |

Budget ($ 000s) |

The movieâs budget, in thousands of US dollars |

Film_Length |

The running time of the movie |

Avg_User_IMDB |

The average user rating from IMDb |

Avg_User_Rtn_Tom |

The average user rating from Rotten Tomatoes |

Martinis |

The number of martinis that Bond drank in the movie |

As you can see, you have quite a variety of data. You wonât need all of it to meet your objectives, but you can think more about this later. For now, youâll concentrate on getting the data out of the file and into Python for cleansing and analysis.

[ Improve Your Python With ð Python Tricks ð â Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Planet Python