https://www.percona.com/blog/wp-content/uploads/2023/12/pt-table-sync-for-Replica-Tables-With-Triggers-200×119.jpg

In Percona Managed Services, we manage Percona for MySQL, Community MySQL, and MariaDB. Sometimes, the replica server might have replication errors, and the replica might be out of sync with the primary. In this case, we can use Percona Toolkit’s pt-table-checksum and pt-table-sync to check the data drift between primary and replica servers and make the replica in sync with the primary. This blog gives you some ideas on using pt-table-sync for replica tables with triggers.

In my lab, we have two test nodes with replication setup, and both servers will have Debian 11 and Percona Server for MySQL 8.0.33 (with Percona Toolkit) installed.

The PRIMARY server is deb11m8 (IP: 192.168.56.188 ), and the REPLICA server name is deb11m8s (IP: 192.168.56.189).

1. Creating the test tables and the AFTER INSERT trigger

Create the below table and trigger on PRIMARY, and it will replicate down to REPLICA. We have two tables: test_tab and test_tab_log. When a new row is inserted into test_tab, the trigger will fire and put the data and the user who did the insert into the test_tab_log table.

Create database testdb;

Use testdb;

Create table test_tab (id bigint NOT NULL , test_data varchar(50) NOT NULL ,op_time TIMESTAMP NOT NULL , PRIMARY KEY (id,op_time));

Create table test_tab_log (id bigint NOT NULL , test_data varchar(50) NOT NULL ,op_user varchar(60) NOT NULL ,op_time TIMESTAMP NOT NULL , PRIMARY KEY (id,op_time));

DELIMITER $$

CREATE DEFINER=`larry`@`%` TRIGGER after_test_tab_insert AFTER INSERT

ON test_tab FOR EACH ROW

BEGIN

INSERT INTO test_tab_log(id,test_data,op_user,op_time) VALUES(new.id, NEW.test_data, USER(),NOW());

END$$

DELIMITER ;

2. Let’s fill in some test data

We do an insert as a root user. You can see that after data is inserted, the trigger fires as expected.

mysql> insert into test_tab (id,test_data,op_time) values(1,'lt1',now());

Query OK, 1 row affected (0.01 sec)

mysql> select * from test_tab; select * from test_tab_log;

+----+-----------+---------------------+

| id | test_data | op_time |

+----+-----------+---------------------+

| 1 | lt1 | 2023-11-26 09:59:19 |

+----+-----------+---------------------+

1 row in set (0.00 sec)

+----+-----------+----------------+---------------------+

| id | test_data | op_user | op_time |

+----+-----------+----------------+---------------------+

| 1 | lt1 | root@localhost | 2023-11-26 09:59:19 |

+----+-----------+----------------+---------------------+

1 row in set (0.00 sec)

We Insert another row insert into test_tab (id,test_data,op_time) values(2,'lt2',now());

mysql> select * from test_tab; select * from test_tab_log;

+----+-----------+---------------------+

| id | test_data | op_time |

+----+-----------+---------------------+

| 1 | lt1 | 2023-11-26 09:59:19 |

| 2 | lt2 | 2023-11-26 10:01:30 |

+----+-----------+---------------------+

2 rows in set (0.00 sec)

+----+-----------+----------------+---------------------+

| id | test_data | op_user | op_time |

+----+-----------+----------------+---------------------+

| 1 | lt1 | root@localhost | 2023-11-26 09:59:19 |

| 2 | lt2 | root@localhost | 2023-11-26 10:01:30 |

+----+-----------+----------------+---------------------+

2 rows in set (0.00 sec)

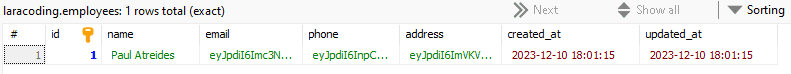

3. Let’s get percona.dsns ready for pt-table-checksum and pt-table-sync

CREATE TABLE percona.dsns (`id` int(11) NOT NULL AUTO_INCREMENT,`parent_id` int(11) DEFAULT NULL, `dsn` varchar(255) NOT NULL, PRIMARY KEY (`id`)) ENGINE=InnoDB DEFAULT CHARSET=utf8;

INSERT INTO percona.dsns (dsn) VALUES ('h=192.168.56.190');

4. Simulate the out of sync on 192.168.56.190 by removing one row (id=1) in test_tab

mysql> use testdb;

Database changed

mysql> set sql_log_bin=0;

Query OK, 0 rows affected (0.00 sec)

mysql> delete from test_tab where id=1;

Query OK, 1 row affected (0.00 sec)

mysql> select * from test_tab; select * from test_tab_log;

+----+-----------+---------------------+

| id | test_data | op_time |

+----+-----------+---------------------+

| 2 | lt2 | 2023-11-26 10:01:30 |

+----+-----------+---------------------+

1 row in set (0.00 sec)

+----+-----------+----------------+---------------------+

| id | test_data | op_user | op_time |

+----+-----------+----------------+---------------------+

| 1 | lt1 | root@localhost | 2023-11-26 09:59:19 |

| 2 | lt2 | root@localhost | 2023-11-26 10:01:30 |

+----+-----------+----------------+---------------------+

2 rows in set (0.00 sec)

5. Run pt-table-checksum to report the difference

root@deb11m8:~/test_pt_trigger# pt-table-checksum h=192.168.56.189 --port=3306 --no-check-binlog-format

--no-check-replication-filters --replicate percona.checksums_test_tab

--recursion-method=dsn=D=percona,t=dsns

--tables testdb.test_tab

--max-load Threads_running=50

--max-lag=10 --pause-file /tmp/checksums_test_tab

Checking if all tables can be checksummed ...

Starting checksum ...

TS ERRORS DIFFS ROWS DIFF_ROWS CHUNKS SKIPPED TIME TABLE

11-26T10:02:58 0 1 2 1 1 0 4.148 testdb.test_tab

root@deb11m8:~/test_pt_trigger#

on REPLICA, deb11m8s, we can see the checksum reports the difference.

mysql> SELECT db, tbl, SUM(this_cnt) AS total_rows, COUNT(*) AS chunks

-> FROM percona.checksums_test_tab

-> WHERE (

-> master_cnt <> this_cnt

-> OR master_crc <> this_crc

-> OR ISNULL(master_crc) <> ISNULL(this_crc))

-> GROUP BY db, tbl;

+--------+----------+------------+--------+

| db | tbl | total_rows | chunks |

+--------+----------+------------+--------+

| testdb | test_tab | 1 | 1 |

+--------+----------+------------+--------+

1 row in set (0.00 sec)

6. Let’s try pt-table-sync to fix it; we will run pt-table-sync under user ‘larry’@’%’

Pt-table-sync says Triggers are defined on the table and will not continue to fix it.

root@deb11m8:~/test_pt_trigger# pt-table-sync h=192.168.56.190,P=3306 --sync-to-master

--replicate percona.checksums_test_tab

--tables=testdb.test_tab

--verbose --print

# Syncing via replication P=3306,h=192.168.56.190

# DELETE REPLACE INSERT UPDATE ALGORITHM START END EXIT DATABASE.TABLE

Triggers are defined on the table at /usr/bin/pt-table-sync line 11306. while doing testdb.test_tab on 192.168.56.190

# 0 0 0 0 0 10:03:31 10:03:31 1 testdb.test_tab

Pt-table-sync has an option –[no]check-triggers- to that will skip trigger checking. The print result is good.

root@deb11m8:~/test_pt_trigger# pt-table-sync --user=larry --ask-pass h=192.168.56.190,P=3306 --sync-to-master --nocheck-triggers

--replicate percona.checksums_test_tab

--tables=testdb.test_tab

--verbose --print

# Syncing via replication P=3306,h=192.168.56.190

# DELETE REPLACE INSERT UPDATE ALGORITHM START END EXIT DATABASE.TABLE

REPLACE INTO `testdb`.`test_tab`(`id`, `test_data`, `op_time`) VALUES ('1', 'lt1', '2023-11-26 09:59:19') /*percona-toolkit src_db:testdb src_tbl:test_tab src_dsn:P=3306,h=192.168.56.189 dst_db:testdb dst_tbl:test_tab dst_dsn:P=3306,h=192.168.56.190 lock:1 transaction:1 changing_src:percona.checksums_test_tab replicate:percona.checksums_test_tab bidirectional:0 pid:4169 user:root host:deb11m8*/;

# 0 1 0 0 Nibble 10:03:54 10:03:55 2 testdb.test_tab

When we run pt-table-sync with –execute under user ‘larry’@’%’:

root@deb11m8:~/test_pt_trigger# pt-table-sync --user=larry --ask-pass h=192.168.56.190,P=3306 --sync-to-master --nocheck-triggers

--replicate percona.checksums_test_tab

--tables=testdb.test_tab

--verbose --execute

# Syncing via replication P=3306,h=192.168.56.190

# DELETE REPLACE INSERT UPDATE ALGORITHM START END EXIT DATABASE.TABLE

# 0 1 0 0 Nibble 10:05:26 10:05:26 2 testdb.test_tab

-------PRIMARY -------

mysql> select * from test_tab; select * from test_tab_log;

+----+-----------+---------------------+

| id | test_data | op_time |

+----+-----------+---------------------+

| 1 | lt1 | 2023-11-26 09:59:19 |

| 2 | lt2 | 2023-11-26 10:01:30 |

+----+-----------+---------------------+

2 rows in set (0.00 sec)

+----+-----------+----------------+---------------------+

| id | test_data | op_user | op_time |

+----+-----------+----------------+---------------------+

| 1 | lt1 | root@localhost | 2023-11-26 09:59:19 |

| 1 | lt1 | larry@deb11m8 | 2023-11-26 10:05:26 |

| 2 | lt2 | root@localhost | 2023-11-26 10:01:30 |

+----+-----------+----------------+---------------------+

3 rows in set (0.00 sec)

-----REPLICA

mysql> select * from test_tab; select * from test_tab_log;

+----+-----------+---------------------+

| id | test_data | op_time |

+----+-----------+---------------------+

| 1 | lt1 | 2023-11-26 09:59:19 |

| 2 | lt2 | 2023-11-26 10:01:30 |

+----+-----------+---------------------+

2 rows in set (0.00 sec)

+----+-----------+----------------+---------------------+

| id | test_data | op_user | op_time |

+----+-----------+----------------+---------------------+

| 1 | lt1 | root@localhost | 2023-11-26 09:59:19 |

| 1 | lt1 | | 2023-11-26 10:05:26 |

| 2 | lt2 | root@localhost | 2023-11-26 10:01:30 |

+----+-----------+----------------+---------------------+

3 rows in set (0.00 sec)

We can see a new row inserted into the test_tab_log table. The reason is that the trigger fired on the primary and replicated to the REPLICA when we ran pt-table-sync.

7. If we do not want that to happen (new row inserted in test_tab_log table)

Option 1: Do the pt-table-checksum/pt-table-sync for the test_tab_log table again. This might fix the issue.

Option 2: We might need to do some work on the trigger like below (or there might be another better way).

Let‘s recreate the trigger as below; the trigger will check if it’s run by ‘larry’.

Drop trigger after_test_tab_insert;

DELIMITER $$

CREATE DEFINER=`larry`@`%` TRIGGER after_test_tab_insert

AFTER INSERT

ON test_tab FOR EACH ROW

BEGIN

IF left(USER(),5) <> 'larry' and trim(left(USER(),5)) <>'' THEN

INSERT INTO test_tab_log(id,test_data, op_user,op_time)

VALUES(new.id, NEW.test_data, USER(),NOW());

END IF;

END$$

DELIMITER ;

And restore the data to its original out-of-sync state.

The PRIMARY

mysql> select * from test_tab; select * from test_tab_log;

+----+-----------+---------------------+

| id | test_data | op_time |

+----+-----------+---------------------+

| 1 | lt1 | 2023-11-26 09:59:19 |

| 2 | lt2 | 2023-11-26 10:01:30 |

+----+-----------+---------------------+

2 rows in set (0.00 sec)

+----+-----------+----------------+---------------------+

| id | test_data | op_user | op_time |

+----+-----------+----------------+---------------------+

| 1 | lt1 | root@localhost | 2023-11-26 09:59:19 |

| 2 | lt2 | root@localhost | 2023-11-26 10:01:30 |

+----+-----------+----------------+---------------------+

The REPLICA

mysql> select * from test_tab; select * from test_tab_log;

+----+-----------+---------------------+

| id | test_data | op_time |

+----+-----------+---------------------+

| 2 | lt2 | 2023-11-26 10:01:30 |

+----+-----------+---------------------+

1 row in set (0.00 sec)

+----+-----------+----------------+---------------------+

| id | test_data | op_user | op_time |

+----+-----------+----------------+---------------------+

| 1 | lt1 | root@localhost | 2023-11-26 09:59:19 |

| 2 | lt2 | root@localhost | 2023-11-26 10:01:30 |

+----+-----------+----------------+---------------------+

2 rows in set (0.00 sec)

Run pt-table-sync under user ‘larry’@’%’.

root@deb11m8s:~# pt-table-sync --user=larry --ask-pass h=192.168.56.190,P=3306 --sync-to-master --nocheck-triggers --replicate percona.checksums_test_tab --tables=testdb.test_tab --verbose --execute

Enter password for 192.168.56.190:

# Syncing via replication P=3306,h=192.168.56.190,p=...,u=larry

# DELETE REPLACE INSERT UPDATE ALGORITHM START END EXIT DATABASE.TABLE

# 0 1 0 0 Nibble 21:02:26 21:02:27 2 testdb.test_tab

We can use pt-table-sync, which will fix the data drift for us, and the trigger will not fire when pt-table-sync is run under user larry.

root@deb11m8s:~# pt-table-sync --user=larry --ask-pass h=192.168.56.190,P=3306 --sync-to-master --nocheck-triggers --replicate percona.checksums_test_tab --tables=testdb.test_tab --verbose --execute

Enter password for 192.168.56.190:

# Syncing via replication P=3306,h=192.168.56.190,p=...,u=larry

# DELETE REPLACE INSERT UPDATE ALGORITHM START END EXIT DATABASE.TABLE

# 0 1 0 0 Nibble 21:02:26 21:02:27 2 testdb.test_tab

—The PRIMARY

mysql> select * from test_tab; select * from test_tab_log;

+----+-----------+---------------------+

| id | test_data | op_time |

+----+-----------+---------------------+

| 1 | lt1 | 2023-11-26 09:59:19 |

| 2 | lt2 | 2023-11-26 10:01:30 |

+----+-----------+---------------------+

2 rows in set (0.00 sec)

+----+-----------+----------------+---------------------+

| id | test_data | op_user | op_time |

+----+-----------+----------------+---------------------+

| 1 | lt1 | root@localhost | 2023-11-26 09:59:19 |

| 2 | lt2 | root@localhost | 2023-11-26 10:01:30 |

+----+-----------+----------------+---------------------+

2 rows in set (0.00 sec)

—The REPLICA

mysql> select * from test_tab; select * from test_tab_log;

+----+-----------+---------------------+

| id | test_data | op_time |

+----+-----------+---------------------+

| 1 | lt1 | 2023-11-26 09:59:19 |

| 2 | lt2 | 2023-11-26 10:01:30 |

+----+-----------+---------------------+

1 row in set (0.00 sec)

+----+-----------+----------------+---------------------+

| id | test_data | op_user | op_time |

+----+-----------+----------------+---------------------+

| 1 | lt1 | root@localhost | 2023-11-26 09:59:19 |

| 2 | lt2 | root@localhost | 2023-11-26 10:01:30 |

+----+-----------+----------------+---------------------+

2 rows in set (0.00 sec)

8. If we still insert other data into the table test_tab under another user (e.g. root@localhost), the trigger will still fire

mysql> select user();

+----------------+

| user() |

+----------------+

| root@localhost |

+----------------+

1 row in set (0.00 sec)

mysql> insert into test_tab (id,test_data,op_time) values(3,'lt3',now());

Query OK, 1 row affected (0.01 sec)

-— The PRIMARY

mysql> select * from test_tab; select * from test_tab_log;

+----+-----------+---------------------+

| id | test_data | op_time |

+----+-----------+---------------------+

| 1 | lt1 | 2023-11-26 09:59:19 |

| 2 | lt2 | 2023-11-26 10:01:30 |

| 3 | lt3 | 2023-11-26 21:04:26 |

+----+-----------+---------------------+

3 rows in set (0.00 sec)

+----+-----------+----------------+---------------------+

| id | test_data | op_user | op_time |

+----+-----------+----------------+---------------------+

| 1 | lt1 | root@localhost | 2023-11-26 09:59:19 |

| 2 | lt2 | root@localhost | 2023-11-26 10:01:30 |

| 3 | lt3 | root@localhost | 2023-11-26 21:04:26 |

+----+-----------+----------------+---------------------+

3 rows in set (0.00 sec)

— The REPLICA

mysql> select * from test_tab; select * from test_tab_log;

+----+-----------+---------------------+

| id | test_data | op_time |

+----+-----------+---------------------+

| 1 | lt1 | 2023-11-26 09:59:19 |

| 2 | lt2 | 2023-11-26 10:01:30 |

| 3 | lt3 | 2023-11-26 21:04:26 |

+----+-----------+---------------------+

3 rows in set (0.00 sec)

+----+-----------+----------------+---------------------+

| id | test_data | op_user | op_time |

+----+-----------+----------------+---------------------+

| 1 | lt1 | root@localhost | 2023-11-26 09:59:19 |

| 2 | lt2 | root@localhost | 2023-11-26 10:01:30 |

| 3 | lt3 | root@localhost | 2023-11-26 21:04:26 |

+----+-----------+----------------+---------------------+

3 rows in set (0.00 sec)

In our test case, we just cover one AFTER INSERT trigger. In a live production system, there might be more complex scenarios (e.g. a lot of different types of triggers defined on the table you are going to do pt-table-sync, auto-increment value, the table has foreign key constraints, etc.). It would be better to test on a test environment before you go to production and make sure you have a valid backup before making a system change.

I hope this will give you some ideas on pt-table-sync on a table with triggers.

Percona Distribution for MySQL is the most complete, stable, scalable, and secure open source MySQL solution available, delivering enterprise-grade database environments for your most critical business applications… and it’s free to use!

Try Percona Distribution for MySQL today!

Percona Database Performance Blog