https://mydbops.files.wordpress.com/2022/06/mydumper_stream.png?w=569

Mydumper supports streaming of backups right from version 0.11.3 and the latest version Mydumper 0.12.3 it enabled its support for compressed streaming backup. This was the most awaited feature added to Mydumper, making it a more powerful tool for data migration to RDS or Cloud.

If you are hearing mydumper for the first time, then let’s have a quick catch-up on what Mydumper is and what it does exactly.

Mydumper is a multithread logical backup and restores tool for MySQL and its forks. To know more you can refer to our previous blogs/presentations below.

- https://mydbops.wordpress.com/2016/01/31/faster-logical-backup-with-mydumper/

- https://mydbops.wordpress.com/2019/03/26/mysqldump%e2%80%8b-vs-mysqlpump-vs-mydumper/

In this blog, we will discuss in short how this streaming process works and how to get the required output.

How does this work?

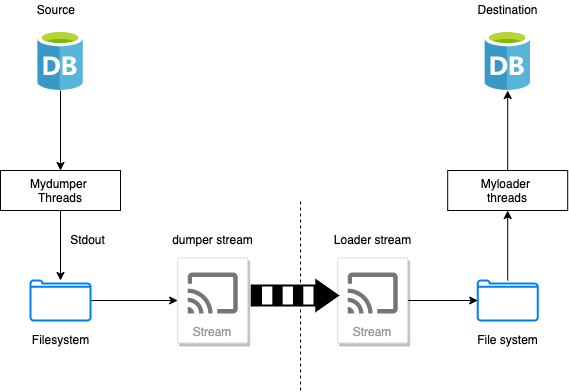

The working of the stream is quite simple

- Mydumper threads read data from the source DB and write to the file system parallels.

- Now mydumper stream thread enqueues these files one by one and pipes them to the stdout.

- Myloader stream reads them and writes to its local filesystem.

- Now myloader threads restore them parallel to the destination by maintaining the thread_id sequence.

How to use it?

Below is the working command which I have used for the production use case to restore a table to RDS using stream.

mydumper -t 6 -u mydbops --password='XXXXXXX' -h localhost -P 3306 --compress -o /mysql/logs/backup -B 'testdb' -T 'testdb.member --stream | myloader --threads=4 -u admin --password='XXXXX' -h 'mydbops-stg.bvbkjkuliuie.ap-south-1.rds.amazonaws.com' -P 3308 -v 4 -d /mysql/logs/restore -o -B ‘testdb’ --stream–stream : mydumper indicates that files created need to be streamed through STDOUT.

–stream : myloader will create a thread to read the stream and creates the file locally

–no-delete : retains the files locally in both source and destination this is optional

By default, once the file is successfully transferred from the source it gets deleted immediately, similarly at the destination once the streamed file is applied it gets deleted from its file system. This avoids high disk utilization during file backup when migrating a high volume of data.

Logs: ** Message: 12:11:12.002: File backup/testdb-member-create.sql.gz transfered | Global: 0 MB/s ** Message: 12:11:12.003: Thread 3 dumping schema for `testdb`.`member` ** Message: 12:11:12.003: Thread 4 dumping data for`testdb`.`member`| Remaining jobs: -3 ** Message: 12:11:12.003: Opening: backup/testdb-member-schema.sql.gz ** Message: 12:11:12.003: File backup/testdb-member-schema.sql.gz transfered | Global: 0 MB/s ** Message: 12:11:12.064: Non-InnoDB dump complete, unlocking tables ** Message: 12:11:12.064: Shutdown jobs enqueued ** Message: 12:27:54.912: Finished dump at: 2022-06-09 12:27:54 ** Message: 12:27:54.913: Removing file: /mysql/logs/restore/restore/testdb-member-schema.sql.gz ** Message: 12:27:54.914: Thread 4 restoring table `testdb`.`member` from ** Message: 12:27:56.433: Removing file: /mysql/logs/restore/restore/testdb-member.00000.sql.gz ** Message: 12:27:56.434: Shutting down stream thread 2 ** Message: 12:27:56.434: Starting table checksum verification

Key Takeaways:

- With a stream, Mydumper is considered an easy and faster method for the migration of data

- Disk utilization is always kept under control with an auto-purge of backup files.

Planet MySQL