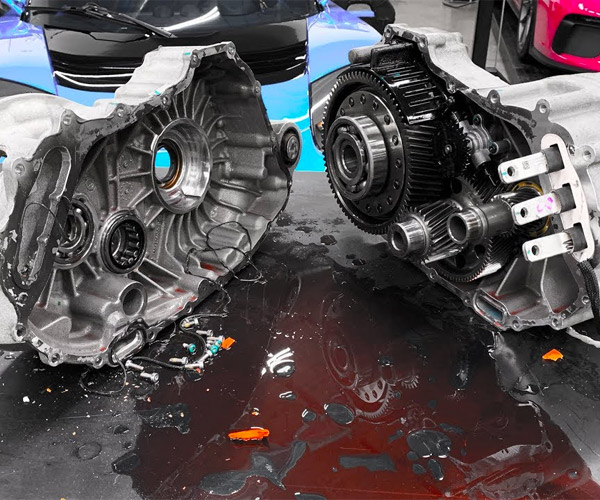

The What’s Inside? channel presents one of its more costly videos, as they rip apart open one of the powerful drive motors from a Tesla Model S to see all of the gears, goo, and other goodies inside. This particular rear motor dates back to 2012, and was purchased off of eBay.

Comic for October 08, 2019

Add Two-Factor Email Verification to Laravel Auth

This is an example project where we will add unique verification sent via email, every time user logs in. At the end of the article, you will find link to free Github repository with full project code.

via Laravel News Links

Add Two-Factor Email Verification to Laravel Auth

Why Laravel? 40 Must-Have Laravel Tools & Resources

Laravel is just a framework for PHP programming language. But for successful project delivery coding skills and technical knowledge is not enough. So-called “soft” skills are as vital as writing the code, so I decided to touch on that part as well.

I have delivered hundreds of projects, and have been a business owner for 8 years. So, believe me, I know a thing or two about successful project management.

Here is my point. As a client, you cannot just write “I need a website like Facebook but for cats”. Yes, the developers are smart guys. But you cannot assume they will figure it all out by themselves. You know your business needs better than them.

To be fair, developers usually don’t care that much about business. They care about delivering good quality code, which is not always the same goal as yours.

You have to take care about the project initial description and explanations. Moreover, it is very important to be involved in all stages of the project.

I don’t mean micro-management and constantly bothering developers with “How’s it going”. I mean always being there if you are needed. You should be ready to answer the questions, provide feedback and test the ongoing versions.

You don’t need to be technical. You don’t have to know the internals of Laravel, or any coding for that matter. It’s usually enough that you explain your needs in your own words, from a business point of view. Then it’s a job of dev-team to turn it into a technical specification first, and then into the final result.

I may offer a solution here. Find a lead technical person or a project manager from your end. This person will be kind of an interpreter between your world and developers. He or she can help with the technical details for the project itself or prepare the specification for potential candidates for the job.

Otherwise, you would need to perform project management yourself or rely on developers to be business-savvy and help you with that. Usually that means higher price. And it is reasonable. In this case you’re hiring not just coding hands, but also person/people to help you with the strategy.

Laravel learning resources

What is great about Laravel, is that you can easily become an expert in the framework. Laravel ecosystem is not only about the development tools you are using. It has much to give for your education.

Here are some educational platforms and courses for aspiring developer, which I would recommend.

Laracasts

Laracasts is an educational resource for web developers with excellent screencasts about Laravel, Vue, PHP in general, Databases and much more. This service is like a “Netflix for your career”.

You can easily start by visiting Laravel from scratch and always stay tuned with What’s new in Laravel.

Test Driven Laravel

When it comes to development strategy, I prefer to use a practice of TDD. Any developer can employ this strategy using Laravel.

Test Driven Laravel is a perfect course for such purposes. Take this video course and make your application more robust.

Confident Laravel

All of us know that we should write tests for our application, but only a few applications has tests. In this course you will find how to break down barriers to testing. Every experienced and ambitious developer write tests. And, of course, we want to lower the time spent on testing our applications.

I recommend you to check Confident Laravel video course. It teaches to write tests for Laravel apps confidently.

Laracon Online

Laracon Online is an annual online conference. It provides the most convenient and affordable way for everyone to get Laracon experience.

Effective PHP

Nuno Maduro’ s Writing Effective PHP video course is very profound. It provides a significant input in developer’s education.

The course teaches you to write code short and simply. It explains the main principles of preventing bugs and much more.

Laravel Core Adventures

Laravel Core Adventures is an excellent video series to gain knowledge and have fun.

Build a Chatbot Course

The Build a chatbot course will teach you to develop, extend and test chatbots and voicebots with PHP.

Laravel Certification

If you have already obtained knowledge and skills in Laravel development, feel free to confirm them with Laravel Certification Program.

Laravel Community

Laravel framework is an amazing framework itself. But its constant development is closely associated with its community. It is sharing tips and code, gives help and points to things that need maintenance. That is why I want to mention some essential resources for every Laravel web developer.

Laracasts Forum

Laracasts Forum is a place where developers share their experience and tutorials. Here you can ask for advice and discuss matters.

LaravelIO

LaravelIO is another place to discuss development matters. Allows to find answers to various questions and share your experience.

Larachat Slack Channel

Larachat Slack Workspace is a Slack workspace with different channels dedicated to different topics.

Laravel News

Laravel News is a weekly newsletter about what is trending in the community.

Laravel Podcast

Laravel Podcast is a perfect place to discuss what is trending in the Laravel community.

LaraJobs

LaraJobs is a place to find and post Laravel, PHP and technical jobs.

Laravel Blog

Laravel Blog is official Laravel blog where you can find information about latest Laravel releases, events and Taylor’s (Laravel creator) podcasts.

Laravel Ecosystem

Laravel ecosystem has much to offer. I don’t usually praise anything I use, but rather try to stay critical. But Laravel ecosystem is something I cannot help talking about over and over again. I would like to mention a bunch of elegant and useful tools for your development.

Development Environments

Laravel Valet

Laravel Valet will easily set up minimalists development environment for Laravel applications. It’s an excellent tool for MacOS users.

The main features:

- Fast (uses roughly 7 MB of RAM)

- Easy to setup

- No need in configuration (just create a folder in the web root)

- Easy to use (provides simple command line tool)

- No need in Vagrant or Docker

- Supports ngrok tunnels

- Allows manual installation of extra services (like Redis or MySQL) via Homebrew

Laravel Valet is an OpenSource software. All the documentation is available on the official Laravel website.

Laravel Homestead

Laravel Homestead is pre-packaged Vagrant box. It provides an excellent development environment. There is no need to install PHP, a web server, and any other server software on your local machine.

The main features:

- Works on any Mac, Windows and Linux systems

- Vagrant boxes are easy to remove and rebuild

- All necessary services are out of box (such as PHP 7.3, Nginx, MySQL, Redis and others)

- Shares a folder between your host and guest machine

Laravel Homestead is also an OpenSource software. Check all the necessary documentation on the official Laravel website.

Laravel Extensions/Packages

Laravel Passport

Laravel Passport is the simplest possible tool for API authentication. It is a full OAuth2 server implementation that is very easy to use.

The main features:

- Makes OAuth2 server easy to setup and use with simple command line tool

- Comes with all necessary database migrations, controllers and routes

- Includes pre-built Vue.JS components

Laravel Passport is OpenSource. Find all the documentation here. The software is an official OAuth2 implementation solution.

Laravel Scout

Add full-text search to your Eloquent models with Laravel Scout. It is a powerful software to synchronise search indexes with your Eloquent records.

The main features:

- Comes with an Algolia driver, which is a fast solution

- Allows to write custom drivers instead of Algolia and extend Scout with your own search implementations

Laravel Scout is OpenSource. You can check it on Laravel official site.

Laravel Spark

Laravel Spark is a perfect tool for boosting typical SaaS application features development.

The main features:

- Is an excellent tool for building your product

- Lets you focus on bringing value to the user

- Handles user management (authentication, password reset, team billing, two-factor authentication, API, announcements, invoicing, payments and more)

- Is maximally customizable

- Empowers your applications with Vue.JS

- Ships with Stripe.js v3 to ensure the best security for payment and subscription process

- Is built on Bootstrap 4.0

- Supports the latest Laravel versions

- Has a complete localization

- Comes with a clean and intuitive settings dashboard

- Allows you to build up a business logic and see what the end product will look like

Laravel Spark is a paid package that comes with a price $99 per site and $299 per for unlimited sites. Learn more on the Spark official site.

Laravel Nova

Laravel Nova is a Laravel administration panel with great UI and UX which boost up a development.

The main features:

- Provides a full CRUD interface for your Eloquent models

- Is easy to add to your Laravel application, both the new and the existing

- Configures with simple PHP code

- Easily displays custom metrics for your app (with query helpers included)

- Integrates with Laravel authorization policies (even for relationships, lenses, fields and tools)

- Comes with Nova CLI to take full control over new field types implementation and design

- Provides queued actions

- Enables you to add lenses to control the Eloquent query

- Provides CLI generators to scaffold your own custom tools

- Can be integrated with Laravel Scout to get lightning-fast search results

- Includes built-in filters for soft deleted resources

- Supports the latest versions of Google Chrome, Apple Safari and Microsoft Edge

Perfectly designed Nova panel comes with price ranging from $99 to $199 per project. Check all the information and documentation here.

Laravel Dusk

If you want to test your application and see how it works from the user’s point of view, try Laravel Dusk. This tool provides automated browser testing with developer-friendly API. Laravel Dusk comes with Chromedriver by default.

The main features:

- Does not require to install JDK or Selenium (but you are free to use any Selenium driver if you wish)

- Is a powerful tool for the web applications using javascript

- Ease the process of testing various clickable elements of your app

- Saves screenshots and browser console outputs of the crushed tests, so you can see what has gone wrong

Check Laravel Dusk on the official website for free.

Laravel Socialite

Laravel Socialite is a package that provides a fluent interface for authentication with OAuth providers, such as Facebook, Twitter, Google, LinkedIn, GitHub and many others.

The main features:

- Is easy to use

- Contains almost all instance social authentication code you may need

- Has great community support with a lot of providers

Find all the necessary documentation for implementing Laravel Socialite here.

Laravel Echo

Laravel Echo is a JavaScript library. It allows you to subscribe to channels and listen to broadcasted events through WebSoсkets.

The main features:

- Lets you add real time updates to your app

- Provides all types of channels (public, private and presence)

- Ability to broadcast P2P events with whisper method

- Working with popular solutions out of the box, such as: Pusher service or Socket.IO library

Laravel Echo can be installed for free. Check all the documentation here.

Laravel Medialibrary

Laravel Medialibrary package associates all sorts of files with Eloquent models. This package makes working with media objects a breeze.

The main features:

- Allows you to take any media file directly from web via url

- Lets you use a custom directory structure

- Provides an ability to define file conversions. Different image sizes, adjustments, effects, etc.

- Allows for an automatic images optimization

- Enables to make several media collections for one Eloquent model

Laravel Medialibrary gives plenty of opportunities. Take a look at it in documentation.

Laravel Mix

Laravel Mix (previously called Laravel Elixir) is a tool that gives you an almost completely managed front-end build process. It provides a clean and flexible API for defining basic Webpack build steps for your Laravel application.

The main features:

- Provides a wide API that corresponds to almost all your needs

- Works as a wrapper around Webpack and allows to extend it

- Eliminates all the difficulties associated with setting up and running Webpack

- Works with modern javascript tools and frameworks: Vue.JS, React.JS, Preact, TypeScript, Babel, CoffeScript.

- Transpiles and bundles Less, Sass and Stylus into CSS files

- Supports BrowserSync, Hot Reloading, Assets versioning, Source Mapping out of the box

Laravel Mix can be installed for free. Check all the documentation on the official website.

Laravel Cashier

Laravel Cashier is a package that makes the process of subscription billing easier than ever before. While I personally find Stripe PHP library a perfect tool itself, using it directly is more complex. Cashier lets me avoid all the potential difficulties and ease subscription management.

The main features:

- Eases the usage of Stripe subscription billing service

- Is a simple, small to consume and easy to understand codebase

- Gives the Stripe PHP Library a clear, comprehensible and intuitive interface

- Handles coupons, subscription trials, one off charges, generating invoice PDFs and much more

- Meets SCA regulations compliant for Europe

The package is free. Set Laravel Cashier up with the help of documentation here.

Laravel Envoy

Laravel Envoy is a useful task runner with a clean and minimal syntax.

The main features:

- Simplifies the deployment process

- Uses familiar Blade style syntax

- Can be used outside Laravel (and even PHP)

- Uses very simple configuration

- Has a feature of stories that groups a set of tasks under a single and convenient name. Thus, you can group small and focused tasks into large ones. Every story can be run as a regular task

- Allows you to run tasks on multiple servers

- Has an option of parallel execution

- Supports sending notifications to Slack and Discord (you will get notifications after each task execution)

Laravel Envoy is a free tool that can be installed via documentation here.

Laravel Horizon

Laravel Horizon is a queue manager that allows you to completely control your Redis queues.

The main features:

- Allows to monitor queues with clean Web UI

- Gives detailed and comprehensible interface for reviewing and retrying failed jobs

- Allows you to monitor relevant runtime metrics (jobs throughput, retries and failure) in real time

- Outputs the recent retries for the job directly on the failed job detail page

- Stores all your worker configuration in a single, simple configuration file (thus, all the configuration stay in source control)

- Makes it simple for your team to collaborate

- Enables you to use auto-balancing for your queue worker processes

- Has useful notifications

- Lets you tag the jobs (and automatically assigns tags to the most jobs)

Laravel Horizon is free and can be implemented with the help of official documentation.

BotMan Studio

BotMan Studio is a bundle built on top of Laravel framework for a better chatbot development experience.

The main features:

- Provides a web driver implementation. You can develop your chatbot locally and interact with it via Vue.JS chat widget.

- Suitable for various platforms (Slack, Telegram, Amazon Alexa, Cisco Spark, Facebook Messenger, Hangouts Chat, HipChat, etc)

- Its logic can be used to write your own chatbot specifically for your app

- Provides test tools for your chatbot

- Supports middleware system, NLP, retrieving user’s information and its storage

BotMan Studio can be installed via all the necessary documentation here.

Laravel Tenancy

Laravel Tenancy is tool for developing multi-tenant Laravel platforms.

The main features:

- Provides drop-in solution for Laravel without sacrificing flexibility

- Lets you scaffold a multi-tenant SaaS platform irrespective of the project complexity

- Provides a clear separation of assets and databases

- Comes with closed and optional integration into the web server

- Suits perfectly for marketing companies that prefer to re-use functionality for different projects

- Enables to add configs, code, routes and more for a specific tenant

- Provides integration tutorials for popular solutions such as: Laravel Permissions and Laravel Medialibrary

Laravel Tenancy is a package that is free for any kind of project. Find all the necessary documentation here.

Lumen

If you don’t need to use the whole Laravel framework, Lumen is a perfect option here. It is a micro-framework that minimize the bootstrapping processes.

The main features:

- Is all about fast performance

- Works perfectly if you need to support both web and mobile apps

- Is useful for micro-services and APIs

- Gives an ability to work with the Eloquent ORM, Queues and other Laravel components without using full framework

- Eases the processes of routing, caching and others

Lumen is OpenSource with all the documentation stored here.

Laravel Telescope

Laravel Telescope is a polished Laravel debugging assistant. Well, imagine the best debugger you have ever used became a standalone UI with supertools. Then you get Laravel Telescope.

The main features:

- Eases the process of development

- Provides a powerful interface to monitor and debug numerous aspects of your app

- Expands the horizons of development process providing a direct access to a wide range of information

- Cuts down bugs and gives the ideas on how to improve your application

- Gives a sense of the requests coming into your application. Provides a clear understanding of all the running exceptions, database queries, mail, log entries, cache operations, notifications and much more

- Collects the information on how long does it take to execute all the necessary commands and queries

It is totally free. You can install Laravel Telescope on the official website.

Laravel WebSockets Package

WebSockets for Laravel is a thing every developer had a thirst for. This powerful package makes an implementation of WebSockets server in PHP a breeze.

The main features:

- Completely handles WebSockets server side

- Replaces Pusher and Laravel Echo Server

- Is Ratchet-based, but doesn’t require you to set up Ratchet yourself

- Ships with a real time Debug Dashboard

- Provides a real time chart for you to inspect the WebSockets key metrics (peak connections, the amount of messages sent and API messages received)

- Enables to use in multi-tenant applications

- Comes with the pusher message protocol (all the packages you already have that support Pusher will work with Laravel WebSockets too)

- Is totally compatible with Laravel Echo

- Preserves all the main Pusher features (private and presence channels, Pusher HTTP API)

Check Laravel WebSockets documentation here.

Helpful Services in Laravel

Laravel Forge

Laravel Forge will make the web applications provision and deployment process as easy as pie. It takes over most of the administrative work.

The main features:

- Provides an easy server management with simple and clear UI

- Works with Digital Ocean, Linode, AWS, Vultr providers out of the box

- Gives an ability to setup custom VPS

- Provides preconfigured up-to-date software for all your purposes (Ubuntu, PHP, Nginx, MySQL etc.)

- Allows you to forget about the pain of deploying and hosting, but concentrate on developing

- Takes trouble of creating and provisioning a new server

- Allows you to restart each service and whole server from UI directly

- Easily sets up necessary SSH keys for server access

- Installs SSL certificates within seconds

- Supports LetsEncrypt (free SSL certificates) out of the box

- Gives an instant Nginx configuration for domains and subdomains

- Provides easy private networking settings for horizontal scaling

- Lets you build, configure servers and share them with the team

- Enables to attach Git repositories to each site for provision

- Supports GitHub, BitBucket, GitLab and custom repositories

- Provides for auto deployments based on Git branch update

- Gives the simple deployment bash scripts with ability to trigger via “Deployment Trigger Url”

- Configures scheduled tasks, firewall rules and queue workers

- Can be used for any PHP frameworks

- Provides an automatic setup and settings for Blackfire and Papertail

Laravel Forge is a paid service. Its price depends on the chosen plan and ranges from 12$ to 39$ per month. Every plan has a 5-day free trial. Learn more about Laravel Forge on the official site.

Laravel Vapor

What about serverless deployment platform that does your job for you? I’ll take two, please. I am talking about Laravel Vapor service which I personally find impressive.

The main features:

- Is an autoscaling platform powered by AWS Lambda

- Comes with auto scaling database, cache clusters and queue workers

- Lets you manage Laravel infrastructure with ease

- Enables to directly upload files to S3 with Vapor’s built-in JavaScript utilities

- Provides zero-downtime deployments and rollbacks

- Is CI friendly

- Provides environment variables, DNS and database management (including point-in-time restores and scaling)

- Allows custom application domains

- Provides secrets creation. It’s like environment variables but encrypted at rest, versioned and with no 4 kb limit

- Comes with auto-uploading assets to Cloudfront CDN during deployment

- Allows certificate management and renewal

- Provides unique vanity URLs for each environment, ensuring prompt inspection

- Supplies with key metrics (app, database and cache)

- Provides database and cache tunnels, letting an easy local inspection

- Comes with pretty CLI tool

Laravel Vapor has a fixed price for unlimited projects and deployments. There are 39$ monthly and 399$ yearly plans (exclusive of AWS cloud costs). Learn more on the Laravel Vapor official webpage.

Chipper CI

If you are looking for a Laravel continuous integration tool, Chipper CI is something you definitely need.

The main features:

- Runs PHPUnit and Laravel Dusk seamlessly and with zero configuration

- Provides really fast and stable Laravel-oriented CI

- Uses intelligent dependency caching, allowing fast build speeds

- Ensures easy Laravel Forge, Envoyer and Vapor deployment integrations

What can I say, hats off to David Hemphill and Chris Fidao, who developed this perfect tool to ease every Laravel developer life. ChipperCI is a paid service. It comes with a 14-days free trial and $39 monthly plan for unlimited projects, team members and 1 concurrent build. Go to the official Chipper CI website to learn more.

Flare

Flare is the error tracker the Laravel community was longing for.

The main features:

- Ensures immediate solutions and related documentation for common problems solving

- Provides a clear and focused interface for solving common issues

- Collects local and production errors

- Lets the Ignition error page automatically fix things for you with just a click

- Allows you to collaborate by sharing exceptions to fix errors efficiently

- Lets you reduce time on fixing bugs

- Provides an exception tracking and notifications

Flare has a 7-days free trial, 3 monthly plans with price ranging from $29 to $279 and 3 yearly plans with price ranging from $319 to $3069. Look through the documentation and details here.

Laravel Shift

What if I tell you that you can upgrade your Laravel versions automatically? Well, not you. It is all can be done by Laravel Shift.

The main features:

- Upgrades Laravel versions automatically and instantly

- Provides the fastest way to upgrade any Laravel version

- Saves your time and nerves

- Works perfectly with Bitbucket, Gitlab and GitHub projects

- Does not keep a copy of your code

Laravel Shift service offers various plans to cover all your needs with price ranging from $7 to $59 per month. You can find all the details on the official website.

Laravel Envoyer

Laravel Envoyer is a zero-downtime deployer for your PHP and Laravel applications.

The main features:

- Takes care of providing a fully functional app to the end user in the middle of performing deployments

- Supports unlimited and customizable deployments to multiple servers along with the app health monitoring

- Provides clear and clean UI for deployment configuration

- Integrates with GitLab, GitHub, Bitbucket and Slack

- Provides GitLab self-hosted integration

- Monitors cron jobs

- Provides seamless deployment rollbacks

- Allows for unlimited team members and deployments

The monthly price of Laravel Envoyer is $10-$50 per. The cost depends on number of your projects. The service offers 5-days trial. For more detailed information please visit the official website.

Laravel Ecosystem is all the rage

If you tell these tools didn’t impress you, well, what could possibly do? Do not forget that this is just the list of things I personally like about Laravel environment. There are more tools you can try.

I see that the framework is gaining more and more popularity each year. The Laravel community is growing with an irresistible force. That means that there will be even more tools and packages launched in 2019 and next years. The usage of Laravel tools certainly speeds up the process of development and improves quality of your future projects.

Laravel makes the development an effective solution. This framework would certainly help to realize your wildest ambitions. If you are already using Laravel, try some tools I mentioned above. If not, you can shift from your previous framework any time. Laravel ecosystem is easy to enter and, believe me, you wouldn’t like to go back.

via Laravel News Links

Why Laravel? 40 Must-Have Laravel Tools & Resources

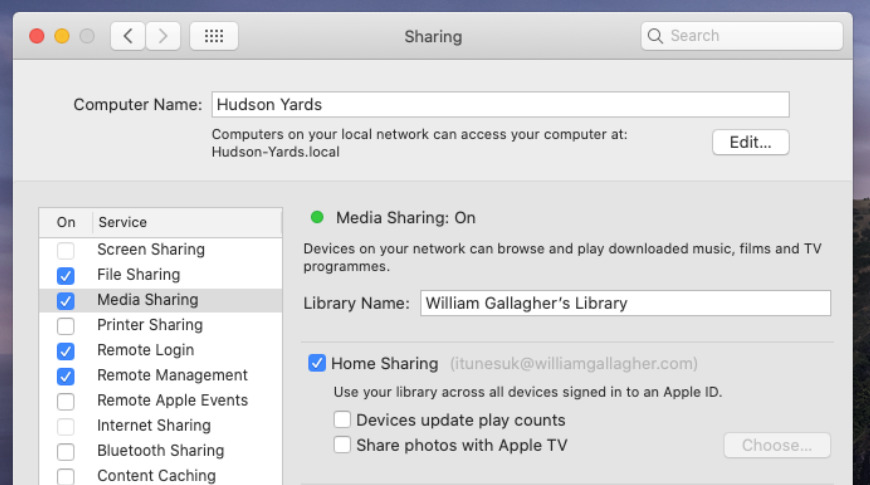

How to turn on Home Sharing in the Music and TV apps in macOS Catalina

The iTunes feature that let you stream your personal media to other devices like an Apple TV is still in macOS Catalina, but the procedure is a bit different. Here’s what you need to know to set it up, turn it on, and why it’s worth doing.

via AppleInsider

How to turn on Home Sharing in the Music and TV apps in macOS Catalina

Comic for October 06, 2019

Transcript

Man: I hear what you’re saying, and I disagree.

Dilbert: Because…?

Man: Because what?

Dilbert: Do you have any reasons for your disagreement?

Man: No, I’m a lifestyle disagreer. I disagree with everyone all the time. The reasons are irrelevant.

Dilbert: You sound smart.

Man: No. I’m not smart.

Dilbert: And you’re attractive too.

Man: No. I’m ugl…okay, I see what you’re doing.

Star Trek: Picard (Trailer 2)

CBS All Access drops another look at the upcoming Jean-Luc Picard series, which kicks off with the best Starfleet Captain ever coming out of retirement to come to the aid of a mysterious young woman. And the Captain now has two Number Ones – his cat, and Lieutenant Riker. Premieres 1/23/20.

It’s just one big ST:NG reunion in latest trailer for Star Trek: Picard

Jean-Luc Picard (Patrick Steward) comes out of retirement in Star Trek: Picard, coming soon to CBS All Access.

Star Trek fans have been eagerly awaiting the debut of the new series, Star Trek: Picard, slated to debut in January 2020. It was first announced at the Las Vegas Star Trek Convention in August 2018, and a nice long trailer just debuted at New York Comic-Con. It’s practically a Star Trek: The Next Generation reunion, featuring plenty of familiar faces alongside the new cast.

Rumors began swirling about a Picard-centric spin-off series shortly after Star Trek: Discovery showrunner Alex Kurtzmann signed a five-year development deal with CBS to further expand the franchise for its streaming service. One potential snag was whether Patrick Stewart, who created the character, would be willing to reprise his role. Kurtzman envisioned a more contemplative tone, describing the series as “a more psychological show, a character study about this man in his emeritus years.” The concept was sufficiently unique that Stewart signed on.

“I truly felt my time with Star Trek had run its course,” Stewart said at the 2018 convention. But he professed himself “humbled” by the stories from fans about how much the character of Picard and ST:NG had brought comfort to them during difficult times in their lives. “I feel I’m ready to return to him for the same reason—to research and experience what comforting and reforming light he might shine on these often very dark times.” There are ten episodes in the first season, with plans for two additional seasons if the series succeeds.

-

Who is that Federation officer in the vineyard?

YouTube/CBS All Access

-

Data (Brent Spiner) appears in Picard’s dreams

YouTube/CBS All Access

-

Just a retired captain and his trusty Number One.

YouTube/CBS All Access

-

A mysterious young woman needs Picard’s help

YouTube/CBS All Access

-

Federation headquarters looks much the same.

YouTube/CBS All Access

-

“This isn’t your house anymore.”

YouTube/CBS All Access

-

Picard finds a pilot in Christobal (Santiago Cabrera)

YouTube/CBS All Access

-

Christobal greets Dr. Agnes Jurati (Alison Pill)

YouTube/CBS All Access

-

Raffi (Michelle Hurd) is a former intelligence officer.

YouTube/CBS All Access

-

Elnor (Evan Evagora) is skilled at hand-to-hand combat.

YouTube/CBS All Access

-

Picard’s still got a few fencing moves.

YouTube/CBS All Access

-

Seven of Nine (Jeri Ryan) is back with guns blazing

YouTube/CBS All Access

-

It’s Riker! (Jonathan Frakes)

-

Is that Deanna Troi (Marina Sirtis)?

YouTube/CBS All Access

The first trailer debuted at San Diego Comic Con in July. Picard has retired to the family vineyard, filled with regret because Data sacrificed his life to save him in Star Trek: Nemesis. (The series is set about 20 years after those events.) His bucolic existence is interrupted by the arrival of a mysterious woman named Dahj (Isa Briones), who pleads, “Everything inside of me says that I’m safe with you.” It’s unclear who she is, but she has shapeshifting powers and is quite the athlete. Clearly Picard has his suspicions, telling an admiral at what appears to be a Starfleet outpost, “If she is who I think she is, she is in serious danger.”

We caught glimpses of of Seven of Nine (Jeri Ryan) and Data (Brent Spiner). And the SDCC unveiling included confirmation that at least two other regulars from ST:NG would at least make an appearance on Picard: Hugh (Jonathan Del Arco) and William Riker (Jonathan Frakes). We do indeed catch sight of Riker in this latest trailer, along with Deanna Troi (Marina Sirtis). The new cast members include Christobal (Santiago Cabrera), a skilled thief who becomes the pilot of Picard’s ship; Raffi (Michelle Hurd), a former intelligence officer and recovering addict; Dr. Agnes Jurati (Alison Pill); and two Romulan refugees, Elnor (Evan Evagora) and Narek (Harry Treadaway).

The new trailer opens with a dream sequence, as Picard encounters Data in the vineyard working on a strangely haunting painting of a woman. He offers to let Picard complete the painting but Picard responds he doesn’t know how. “That is not true, sir,” Dream Data replies, handing him the paintbrush. As images of past tragedy flash by, Picard wakes up next to his trusty dog, Number One. “I came here to find safety,” he says in a voiceover. “But one is never safe from the past.”

When the mysterious Dahj shows up, he travels to Federation headquarters to convince them to help her. But he’s been gone a long time, and his entreaties are rebuffed. Never one to admit defeat, Picard amasses his own crew, both old and new faces, for a top-secret unauthorized rescue mission. “The past is written,” he intones. “But we are left to write the future,”

Star Trek: Picard premieres January 23, 2010, on CBS All Access. Feel free to toast the occasion with a nice glass of Star Trek Wine.

Listing image by YouTube/CBS All Access

via Ars Technica

It’s just one big ST:NG reunion in latest trailer for Star Trek: Picard

7 Free Bootable Antivirus Disks to Clean Malware From Your PC

Your antivirus or antimalware suite keeps your system clean. At least, it does most of the time. Security programs are better than ever, but some malware still squeezes through the gaps. There is also the other common issue: the human touch. Where there is a human, there is a chance for malware to slip through.

When that happens, you can reach for a bootable antivirus disk. A bootable antivirus disk is a malware removal environment that works like a Linux Live CD or USB. Here are seven free bootable antivirus disks you should check out.

1. Kaspersky Rescue Disk

The Kaspersky Rescue Disk is one of the best bootable antivirus disks, allowing you to scan an infected machine with ease. The Kaspersky Rescue Disk scanner has a reasonable range of antivirus scanning options, including individual folder scanning, startup objects, system drive, and fileless objects. You can also scan your system boot sectors, which is a nice option to find stubbornly hidden malware.

That said, the Kaspersky Rescue Disk does come with a smattering of additional tools, such as Firefox, a file manager, and more.

Another handy Kaspersky Rescue Disk feature is the graphic or text-based interface. For most users, the GUI is the best option, but the text-only mode is available for low power machines or otherwise.

Kaspersky consistently achieves high scores in antivirus testing, and the Kaspersky Rescue Disk brings that to you in a bootable format. Kaspersky also features in our list of the best antivirus suites for Windows 10.

Download: Kaspersky Rescue Disk (Free)

2. Bitdefender Rescue CD

Bitdefender Rescue CD is of the same ilk as Kaspersky’s offering. It is a large download, has a simple to use UI, and comes with several antivirus scan options to help you figure out what’s wrong with your system.

The scanning interface is easy to navigate, allowing you to exclude certain file types, scan archive files (such as a .ZIP or .7z), scan files below a particular size, or just drag and drop individual files into the scanner. For the most part, however, the standard scan is fine as you want to make sure your system is free of malware.

Also included in the Bitdefender Rescue CD is Firefox, a file browser, disk recovery tool, and a few other utilities.

The major downside to Bitdefender Rescue CD is that it is no longer updated; therefore, the virus signatures are outdated. Still, it is a decent rescue disk.

Download: Bitdefender Rescue CD (Free)

3. Avira Rescue System

Avira Rescue System is an Ubuntu-based bootable antivirus rescue disc. The Avira Rescue Disc is one of the best bootable antivirus environments for beginners, as it includes a handy guide to walk you through scanning your drives. Moreover, Avira offers very little in the way of scan customization. Again, this might suit a beginner, as there is less chance of turning part of a scan off and missing a malicious file.

The Avira Rescue System environment is easy to navigate, with a streamlined interface of labeled boxes. Like the other options, the Avira Rescue System includes a web browser and a disk partitioning tool amongst others.

As Avira is an Ubuntu-based rescue disk, it also works well with Linux-based systems.

Download: Avira Rescue System (Free)

4. Trend Micro Rescue Disk

From three of the largest bootable antivirus rescue disks to the smallest. The Trend Micro Rescue Disk may well be the smallest bootable antivirus disk on this list, but it packs a handy punch that will help you regain control of your system.

Given its size (around 70MB at the time of writing), you can forgive that the Trend Micro Rescue Disk has no graphical user interface. Instead, you use the rescue disk exclusively through plain text menus. It sounds a little daunting. But in practice, the text menus are simple to navigate, and you will find your way through.

Download: Trend Micro Rescue Disk for Windows (Free)

5. Dr.Web Live Disk

Despite the slightly suspect name (Dr.Web sounds like a phony internet firm, to me at least), Dr.Web Live Disk offers a wide range of antivirus scanning options in its bootable antivirus environment. In comparison with the alternatives, Dr.Web’s range of scanning options is extensive.

For instance, you can configure the types and sizes of files for inclusion and exclusion. You can set individual actions for specific types of malware, such as a bootkit, dialer, adware, and so on. You can also limit the amount of time the virus scan spends on individual files, handy if you have multiple large media file types.

Dr.Web Live Disk has another string in its bow, too: you can use it to scan Linux systems.

Download: Dr.Web Live Disk (Free)

6. AVG Rescue

AVG is one of the most recognizable names in security. The AVG Rescue disk has a very basic, text-only interface, but it does allow for customizable scanning.

For instance, you can mount a specific Windows volume and scan only that, or scan specific files within that volume. Alternatively, you can scan Windows Startup objects or even just the Windows Registry to seek out specific malware types. (Should you clean the Windows Registry, anyway?) In addition, AVG Rescue comes with a few diagnostic and analytical tools to help you fix drive specific issues, rather than just straight-up malware.

The very basic text interface won’t be everyone’s cup of tea. Having to navigate back and forth through the interface using the arrow keys is infuriating at times. But, given the range of options available and the accuracy of the AVG Rescue disk scanner, you might overlook the arrow keys.

Download: AVG Rescue (Free)

7. ESET SysRescue Live

The final bootable antivirus disk for your consideration is ESET SysRescue Live, an advanced antivirus rescue disk with lots of features.

ESET SysRescue Live comes with extensive antivirus scan control. You can scan archives, email folders, symbolic links, boot sectors, and more, or use the ESET scan profiles. The ESET SysRescue Live disk also packs in a disk analysis tool to check for defects and other failures, plus a memory test tool to check your system RAM for errors.

The rescue environment also comes with the Chromium browser, partition manager GParted, TeamViewer for remote system access, and a smattering of other handy utilities.

Download: ESET SysRescue Live (Free)

What Is the Best Free Bootable Antivirus Disk?

For me, the best free bootable antivirus disk is a toss-up between Kaspersky Rescue Disk and Dr.Web Live Disk.

The Kaspersky Rescue Disk is cumbersome but effective, updating regularly and backed with Kaspersky’s excellence in the field of security. But it doesn’t have the same extensive functionality as the Dr.Web Live Disk. While the latter cannot truly compete with Kaspersky on scanning terms alone, the scanning options available in the Dr.Web Live Disk make it a handy tool.

Regarding the other tools, all are strong and will clean your system. Depending on the severity of the malware, you could download two bootable antivirus disks and run them one after the other to make sure nothing slips through the net.

For more malware removal advice, check out our malware removal guide.

Image Credit: Asiorek/Depositphotos

Read the full article: 7 Free Bootable Antivirus Disks to Clean Malware From Your PC

via MakeUseOf.com

7 Free Bootable Antivirus Disks to Clean Malware From Your PC

Now UI Dashboard Preset for Laravel

Now UI Dashboard Preset for Laravel

The Laravel Frontend Presets GitHub organization has a frontend preset for Now UI, a Bootstrap 4 admin template. Speed up development with this admin dashboard for Laravel 5.5:

After installing Now UI with composer, you can install it in your project with the following preset command:

php artisan preset nowui The Now UI preset includes stubs for a profile page, a generic page, and a user controller to manage users.

You can learn more about this package, get full installation instructions, and view the source code on GitHub at laravel-frontend-presets/now-ui-dashboard.

Filed in: News

Enjoy this? Get Laravel News delivered straight to your inbox every Sunday.

No Spam, ever. We’ll never share your email address and you can opt out at any time.